运行一半,报这个错误是什么情况,我的是openmv4 plus,图像都出来了,然后报这个错误

@kidswong999 # Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.

net = "trained.tflite"

labels = [line.rstrip('\n') for line in open("labels.txt")]

clock = time.clock()

while(True):

clock.tick()

img = sensor.snapshot()

# default settings just do one detection... change them to search the image...

for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5):

print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect())

img.draw_rectangle(obj.rect())

# This combines the labels and confidence values into a list of tuples

predictions_list = list(zip(labels, obj.output()))

for i in range(len(predictions_list)):

print("%s = %f" % (predictions_list[i][0], predictions_list[i][1]))

print(clock.fps(), "fps")出现这个问题怎么解决:RuntimeError: Frame capture has timed out

# Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.

net = "trained.tflite"

labels = [line.rstrip('\n') for line in open("labels.txt")]

clock = time.clock()

while(True):

clock.tick()

img = sensor.snapshot()

# default settings just do one detection... change them to search the image...

for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5):

print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect())

img.draw_rectangle(obj.rect())

# This combines the labels and confidence values into a list of tuples

predictions_list = list(zip(labels, obj.output()))

for i in range(len(predictions_list)):

print("%s = %f" % (predictions_list[i][0], predictions_list[i][1]))

print(clock.fps(), "fps")

MemoryError: Out of fast Frame Buffer Stack Memory

Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.

net = "trained.tflite"

labels = [line.rstrip('\n') for line in open("labels.txt")]

clock = time.clock()

while(True):

clock.tick()

img = sensor.snapshot()

# default settings just do one detection... change them to search the image...

for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5):

print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect())

img.draw_rectangle(obj.rect())

# This combines the labels and confidence values into a list of tuples

predictions_list = list(zip(labels, obj.output()))

for i in range(len(predictions_list)):

print("%s = %f" % (predictions_list[i][0], predictions_list[i][1]))

print(clock.fps(), "fps")不是说可以训练小一点的lenet模型在M4上面运行的嘛?怎么解决哦?

# Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.

net = "trained.tflite"

labels = [line.rstrip('\n') for line in open("labels.txt")]

clock = time.clock()

while(True):

clock.tick()

img = sensor.snapshot()

# default settings just do one detection... change them to search the image...

for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5):

print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect())

img.draw_rectangle(obj.rect())

# This combines the labels and confidence values into a list of tuples

predictions_list = list(zip(labels, obj.output()))

for i in range(len(predictions_list)):

print("%s = %f" % (predictions_list[i][0], predictions_list[i][1]))

print(clock.fps(), "fps")

为何对数字1单独进行训练识别,串口终端还显示对数字2,3,4的识别呢?

Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.GRAYSCALE) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.

net = "trained.tflite"

labels = [line.rstrip('\n') for line in open("labels.txt")]

clock = time.clock()

while(True):

clock.tick()

img = sensor.snapshot()

# default settings just do one detection... change them to search the image...

for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.5, x_overlap=0.5, y_overlap=0.5):

print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect())

img.draw_rectangle(obj.rect())

# This combines the labels and confidence values into a list of tuples

predictions_list = list(zip(labels, obj.output()))

for i in range(len(predictions_list)):

print("%s = %f" % (predictions_list[i][0], predictions_list[i][1]))

print(clock.fps(), "fps")生成出来的程序运行时出错误OSError【Errno 2】ENOENT应该怎么解决?

Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.

net = "trained.tflite"

labels = [line.rstrip('\n') for line in open("labels.txt")]

clock = time.clock()

while(True):

clock.tick()

img = sensor.snapshot()

# default settings just do one detection... change them to search the image...

for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5):

print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect())

img.draw_rectangle(obj.rect())

# This combines the labels and confidence values into a list of tuples

predictions_list = list(zip(labels, obj.output()))

for i in range(len(predictions_list)):

print("%s = %f" % (predictions_list[i][0], predictions_list[i][1]))

print(clock.fps(), "fps")请问iic和串口uart不能同时使用吗,一起用就会报错OSError: [Errno 19] ENODEV

# Welcome to the OpenMV IDE! Click on the green run arrow button below to run the script!

import sensor, image, time, utime

from machine import I2C

from pyb import RTC

from vl53l1x import VL53L1X

from pyb import UART

i2c = I2C(2)

distance = VL53L1X(i2c)

rtc = RTC()

rtc.datetime((2021,11,25, 4,20,11, 0, 0))

uart = UART(3)

uart.init(115200, timeout_char=1000)

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.skip_frames(time = 2000) # Wait for settings take effect.

clock = time.clock() # Create a clock object to track the FPS.

while(True):

clock.tick() # Update the FPS clock.

img = sensor.snapshot() # Take a picture and return the image.

uart.write(img.compressed(quality=50)) # Note: OpenMV Cam runs about half as fast when connected

# uart.write("range: mm ", distance.read())

# uart.write(rtc.datetime())

print("range: mm ", distance.read())

time.sleep_ms(1000)

大佬们,如何弄:有多个不规则形状的绿色和棕色物体,用openMV识别,并求出得到绿色在总物体中占的比例

@ohu5 很简单,先使用阈值助手设置绿色和棕色的阈值,然后二值化,然后计算直方图就行了。

hist[0]和hist[1]就是颜色比例

import sensor, image, time

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.skip_frames(time = 2000) # Wait for settings take effect.

clock = time.clock() # Create a clock object to track the FPS.

green_threshold = (10,30,30,40,50,60)#随便写的,需要重新设置阈值

brown_threshold = (40,50,-30,20,-10,10)#随便写的,需要重新设置阈值

while(True):

clock.tick()

img = sensor.snapshot()

img.binary([green_threshold, brown_threshold], to_bitmap=True)

hist = img.get_histogram(bins=2).l_bins()

print(hist)

print(clock.fps())

使用LCD模块,3分钟就死机,请问是什么原因?

可有可能是代码在哪里报错了,脱机运行看不到。

可以在代码最外面加 try, 如果捕获异常就闪灯

import sensor, image, time

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.skip_frames(time = 2000) # Wait for settings take effect.

clock = time.clock() # Create a clock object to track the FPS.

try:

# raise "error"

while(True):

clock.tick() # Update the FPS clock.

img = sensor.snapshot() # Take a picture and return the image.

print(clock.fps()) # Note: OpenMV Cam runs about half as fast when connected

# to the IDE. The FPS should increase once disconnected.

except:

from pyb import LED

red_led = LED(1)

while(True):

red_led.on()

time.sleep_ms(1000)

red_led.off()

time.sleep_ms(1000)

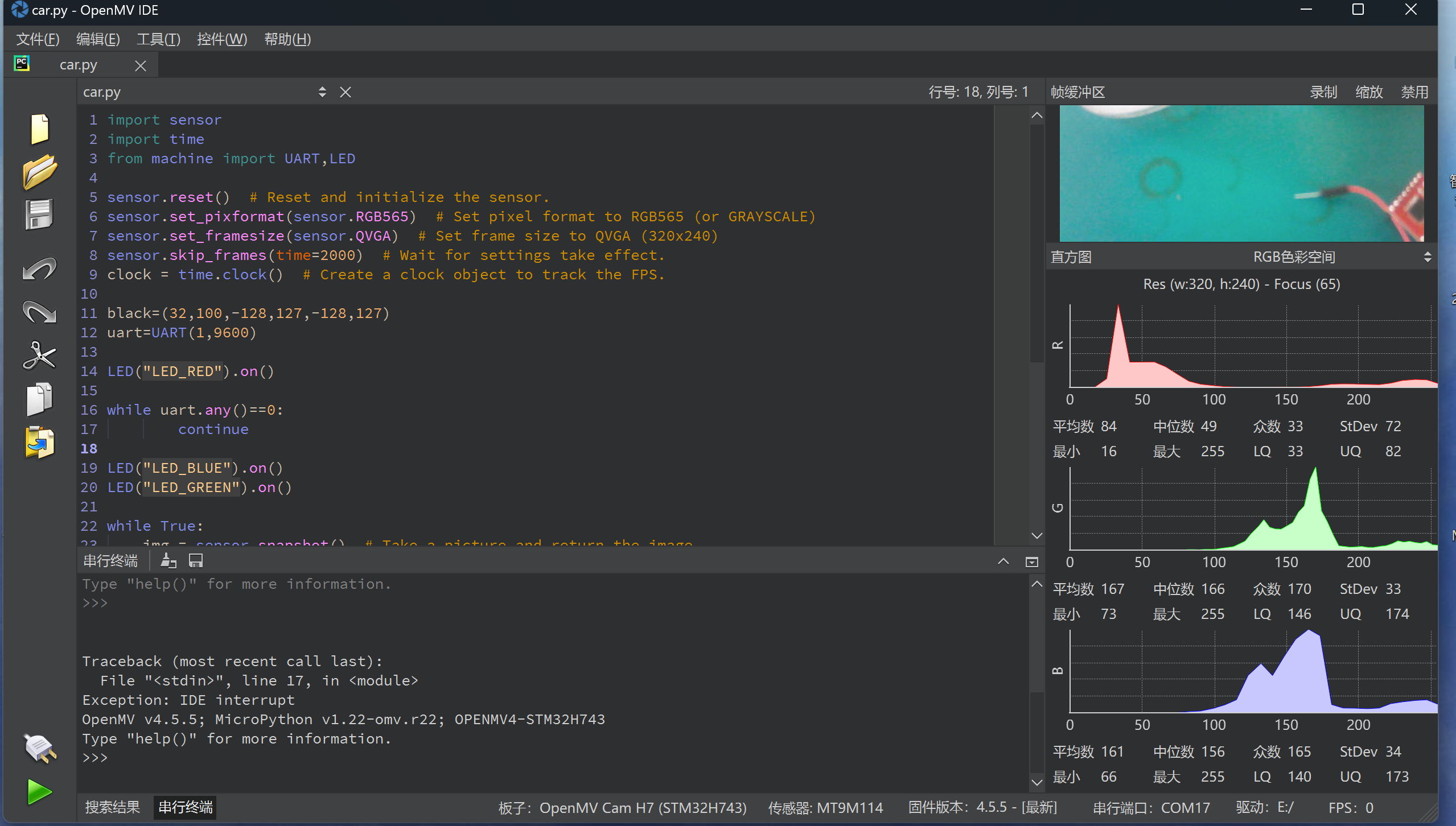

代码没有问题,但是运行发生中断。

import sensor

import time

from machine import UART,LED

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.skip_frames(time=2000) # Wait for settings take effect.

clock = time.clock() # Create a clock object to track the FPS.

black=(32,100,-128,127,-128,127)

uart=UART(1,9600)

LED("LED_RED").on()

while uart.any()==0:

continue

LED("LED_BLUE").on()

LED("LED_GREEN").on()

while True:

img = sensor.snapshot() # Take a picture and return the image.

blobs=img.find_blobs([black],invert=True,roi=[0,100,320,48])

if len(blobs)==1:

img.draw_rectangle([0,100,320,48])

img.draw_rectangle(blobs[0][0:4], color=(0,255,0))

uart.write('#'+str(blobs[0].cx())+'$')

print('#'+str(blobs[0].cx())+'$')

elif len(blobs)==0:

uart.write('S')

print('S')

LED("LED_GREEN").off()

while True:

continue