该怎么控制它转动的角度呢?怎么让它随停呢?

qdok 发布的帖子

-

RE: 180度的舵机,可以直接给它转180度的指令吗?如果可以,我给了它这个指令为什么它只转了90度?发布在 OpenMV Cam

@kidswong999 如果要自己写一个舵机控制函数的话,要怎么写啊?

-

如何让这个程序能:有垃圾放入才开始识别,而不是插电之后就开始识别摄像头照到的一切东西?发布在 OpenMV Cam

# main.py -- put your code here! import pyb, time from pyb import Servo import sensor, image, time, os, tf led = pyb.LED(3) usb = pyb.USB_VCP() # while (usb.isconnected()==False): led.on() # time.sleep(150) # led.off() # time.sleep(100) # led.on() # time.sleep(150) # led.off() # time.sleep(600) # Edge Impulse - OpenMV Image Classification Example sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = "trained.tflite" labels = [line.rstrip('\n') for line in open("labels.txt")] clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f"% (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps") a = obj.output() maxx = max(a) print(maxx) maxxx = a.index(max(a)) print(maxxx) s1 = Servo(1) s2 = Servo(2) if maxxx == 3: s1.angle(90) time.sleep_ms(1000) s1.angle(0) time.sleep_ms(1000) s2.angle(90) time.sleep_ms(1000) s2.angle(0) time.sleep_ms(3000) s1.angle(180) time.sleep_ms(1000) s1.angle(0) -

RE: 180度的舵机,可以直接给它转180度的指令吗?如果可以,我给了它这个指令为什么它只转了90度?发布在 OpenMV Cam

@kidswong999 不行,运行你这个也只能是舵机转90度之后再回到0度。

-

180度的舵机,可以直接给它转180度的指令吗?如果可以,我给了它这个指令为什么它只转了90度?发布在 OpenMV Cam

from pyb import Servo import time s1 = Servo(1) s2 = Servo(2) s1.angle(180) time.sleep_ms(1000) -

为什么运行这个程序第一次能让舵机转,之后再运行就不转了?发布在 OpenMV Cam

import pyb s1 = pyb.Servo(1) # create a servo object on position P7 #在P7引脚创建servo对象 s1.angle(45) # move servo 1 to 45 degrees #将servo1移动到45度 -

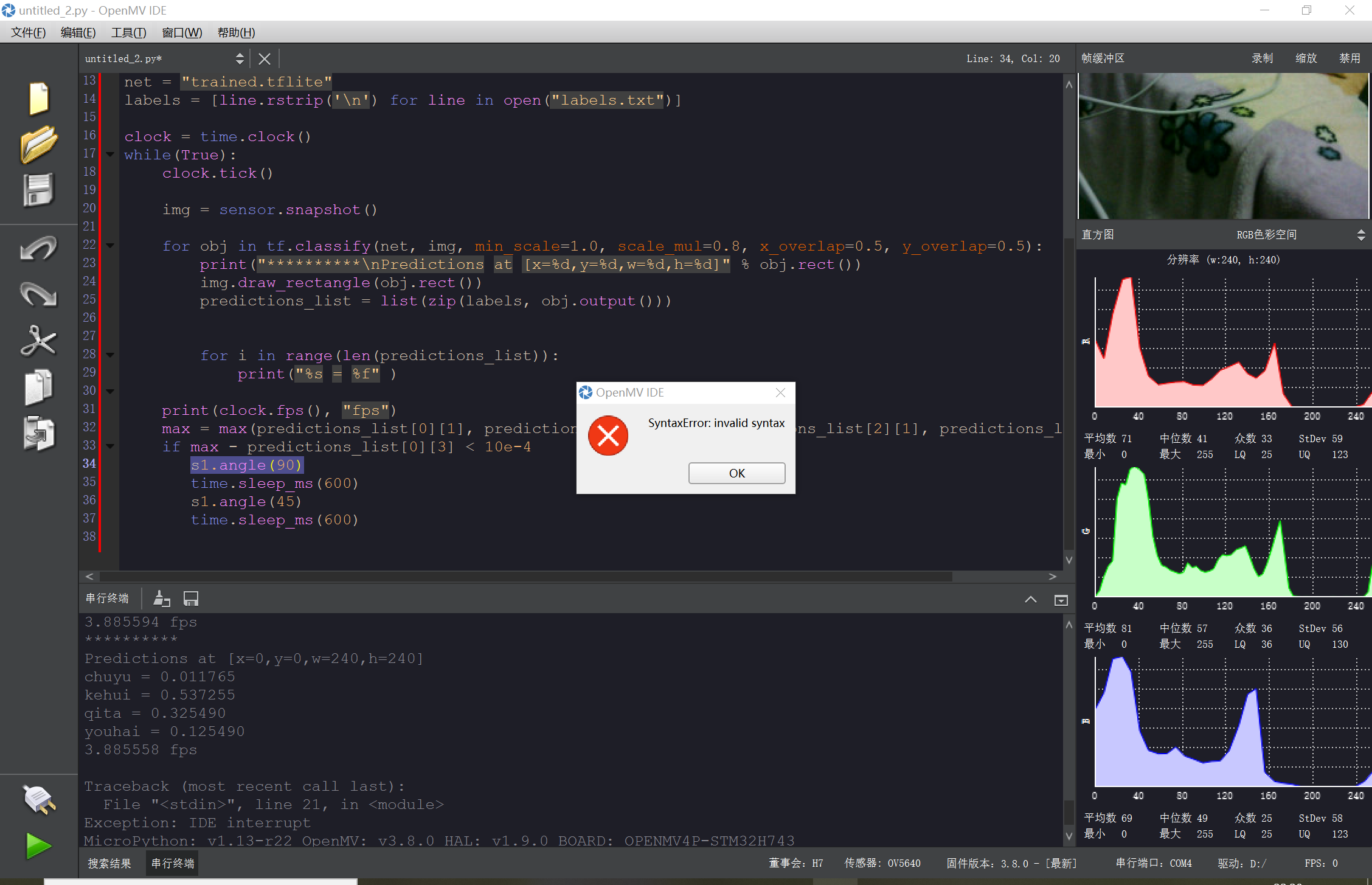

这个程序有什么问题吗?发布在 OpenMV Cam

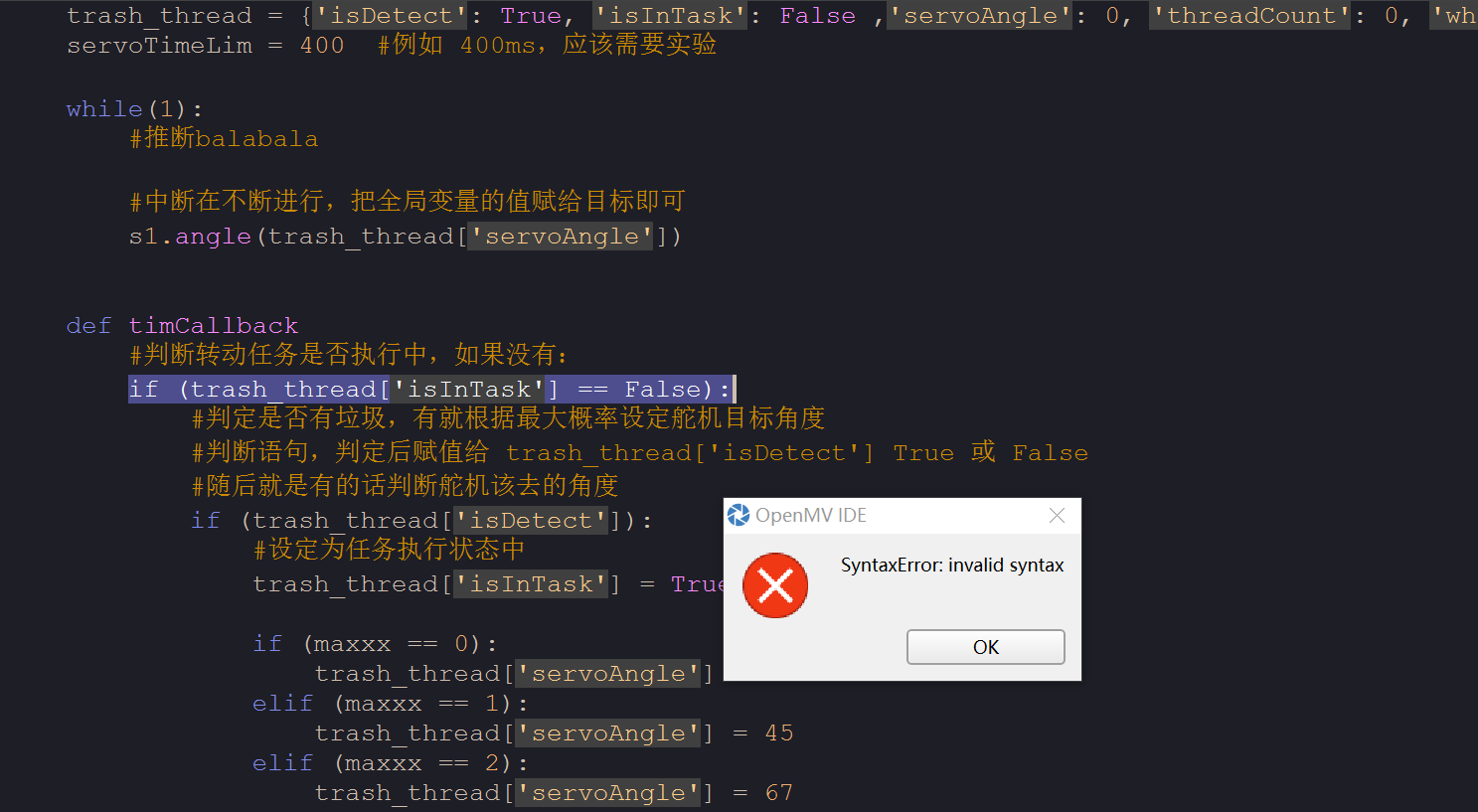

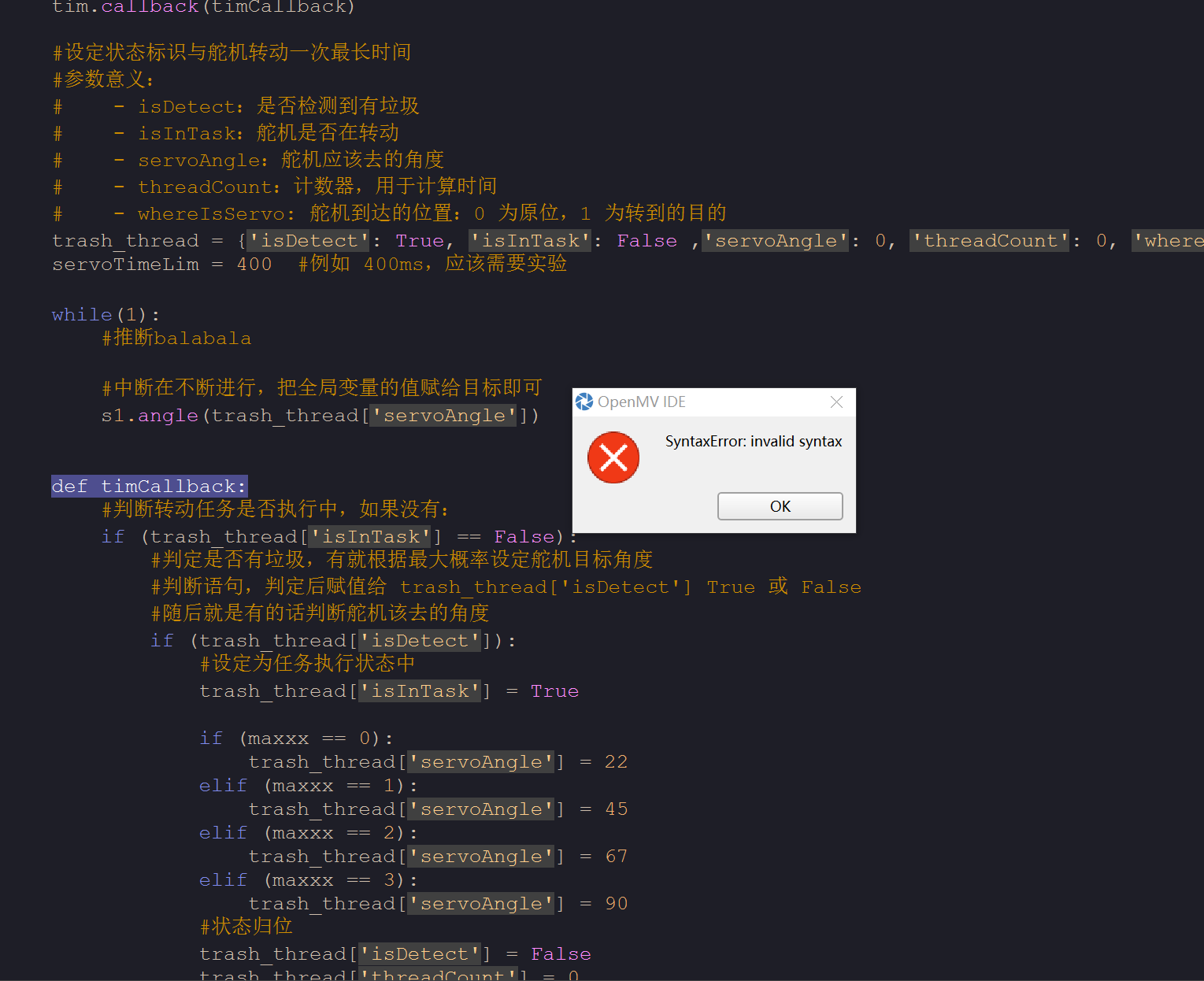

# Edge Impulse - OpenMV Image Classification Example from pyb import Servo import sensor, image, time, os, tf sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = "trained.tflite" labels = [line.rstrip('\n') for line in open("labels.txt")] clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f"% (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps") a = obj.output() maxx = max(a) print(maxx) maxxx = a.index(max(a)) print(maxxx) s1 = Servo(1) s2 = Servo(2) tim = pyb.Timer(4) #选一个时钟即可 #初始化这个时钟 tim.init(freq = 200) #假设每秒想判断舵机状态 200 次 #设定中断执行的内容为函数 `timCallback` tim.callback(timCallback) #设定状态标识与舵机转动一次最长时间 #参数意义: # - isDetect:是否检测到有垃圾 # - isInTask:舵机是否在转动 # - servoAngle:舵机应该去的角度 # - threadCount:计数器,用于计算时间 # - whereIsServo: 舵机到达的位置:0 为原位,1 为转到的目的 trash_thread = {'isDetect': True, 'isInTask': False ,'servoAngle': 0, 'threadCount': 0, 'whereIsServo': 0} servoTimeLim = 400 #例如 400ms,应该需要实验 while(1): #推断balabala #中断在不断进行,把全局变量的值赋给目标即可 s1.angle(trash_thread['servoAngle']) def timCallback #判断转动任务是否执行中,如果没有: if (trash_thread['isInTask'] == False): #判定是否有垃圾,有就根据最大概率设定舵机目标角度 #判断语句,判定后赋值给 trash_thread['isDetect'] True 或 False #随后就是有的话判断舵机该去的角度 if (trash_thread['isDetect']): #设定为任务执行状态中 trash_thread['isInTask'] = True if (maxxx == 0): trash_thread['servoAngle'] = 22 elif (maxxx == 1): trash_thread['servoAngle'] = 45 elif (maxxx == 2): trash_thread['servoAngle'] = 67 elif (maxxx == 3): trash_thread['servoAngle'] = 90 #状态归位 trash_thread['isDetect'] = False trash_thread['threadCount'] = 0 trash_thread['whereIsServo'] = 0 else: #无检测则保持原位 trash_thread['servoAngle'] = 0 #判断转动任务是否执行中,如果是: else: #开始转动后,每次中断计数器加一;随后计算开始旋转后的时间,若超出限制则认为到达位置 trash_thread['threadCount'] += 1 if (trash_thread['threadCount'] * 50 >= servoTimeLim): #到达后改变记录的舵机位置 trash_thread['whereIsServo'] += 1 #如果此次目标是回归原位 if (trash_thread['whereIsServo'] == 2): trash_thread['whereIsServo'] = 0 trash_thread['isInTask'] = False trash_thread['threadCount'] = 0 #如果此次目标是转到目标位置 elif (trash_thread['whereIsServo'] == 1): trash_thread['servoAngle'] = 0 else : pass

-

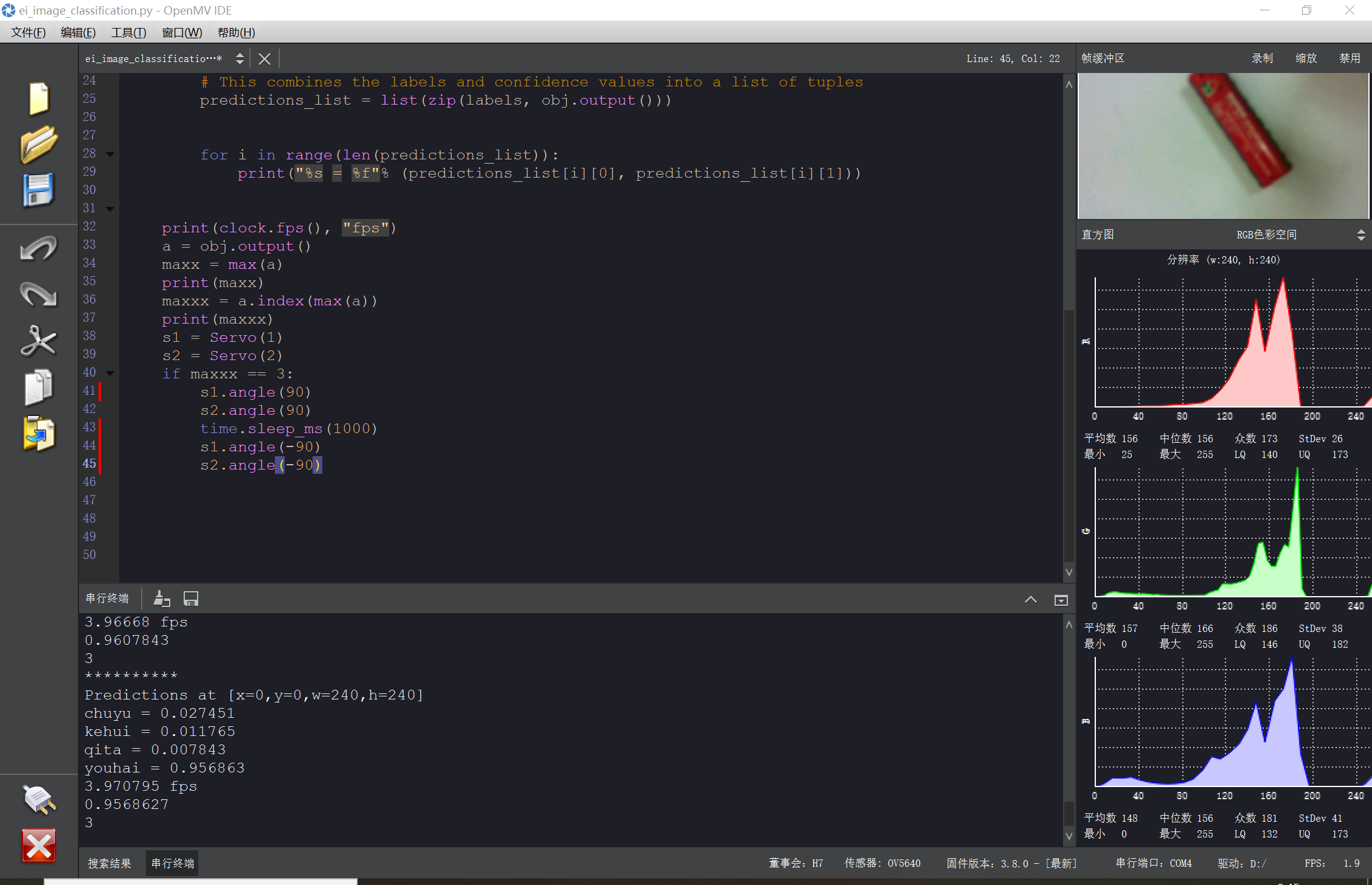

为什么这个程序运行后只能是s2转动,如何让s1先转动一定角度,再让S2转动一个角度,然后相继复位?发布在 OpenMV Cam

# Edge Impulse - OpenMV Image Classification Example from pyb import Servo import sensor, image, time, os, tf sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = "trained.tflite" labels = [line.rstrip('\n') for line in open("labels.txt")] clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f"% (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps") a = obj.output() maxx = max(a) print(maxx) maxxx = a.index(max(a)) print(maxxx) s1 = Servo(1) s2 = Servo(2) if maxxx == 3: s1.angle(180) s2.angle(90) -

如何比较这里列表里元素的大小,把概率最大对应的的元素提取出来?发布在 OpenMV Cam

Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.net = "trained.tflite"

labels = [line.rstrip('\n') for line in open("labels.txt")]clock = time.clock()

while(True):

clock.tick()img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) # for i in range(len(predictions_list)): # print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps") -

怎么改这个程序·,让它只输出最大可能性的垃圾类型?而不是把每种垃圾的可能性都列出来?发布在 OpenMV Cam

Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.net = "trained.tflite"

labels = [line.rstrip('\n') for line in open("labels.txt")]clock = time.clock()

while(True):

clock.tick()img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps") -

如何让定时器中断打开,那个里面运行识别部分的程序作为一个后台,然后舵机的部分接受它的输出?最大值发布在 OpenMV Cam

# Edge Impulse - OpenMV Image Classification Example import sensor, image, time, os, tf sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = "trained.tflite" labels = [line.rstrip('\n') for line in open("labels.txt")] clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps") -

RE: 垃圾分类中,如何使舵机在识别相应的垃圾种类后转动相应的角度?发布在 OpenMV Cam

@kidswong999 那样就能把定时器中断打开,那个里面运行识别部分的程序作为一个后台,然后舵机的部分就接受它的输出最大值了嘛

加了冒号还是这个问题啊

加了冒号还是这个问题啊