用哪些部分可以建立呢

ctq3 发布的帖子

-

RE: openmv程序中串口3通信在openmv H7上可以用,在openmv H7 plus上就不可以是怎么回事发布在 OpenMV Cam

我运行也没有错误,但是和其他单片机通信,一样的程序,openmv4可以成功通信,plus不行,不清楚原因

-

openmv程序中串口3通信在openmv H7上可以用,在openmv H7 plus上就不可以是怎么回事发布在 OpenMV Cam

import sensor, image, time from pyb import UART,LED #from pid import PID sensor.reset() sensor.set_pixformat(sensor.RGB565) sensor.set_framesize(sensor.QQVGA) sensor.skip_frames(time = 2000) sensor.set_auto_whitebal(False) clock = time.clock() LED(1).on() LED(2).on() LED(3).on() uart = UART(3,9600,timeout_char=1000) yellow_threshold = [(100, 58, -128, 127, 31, 127), (33, 77, -41, -69, 11, 33)] size_threshold = 2000 def find_max(blobs): max_size=0 max_blob=None for blob in blobs: if blob[2]*blob[3] > max_size: max_blob=blob max_size = blob[2]*blob[3] return max_blob #flag = 0 while(True): clock.tick() img = sensor.snapshot() blobs = img.find_blobs(yellow_threshold) max_blob = None if blobs: max_blob = find_max(blobs) img.draw_rectangle(max_blob[0:4]) img.draw_cross(max_blob[5], max_blob[6]) if max_blob: pan_error = max_blob.cx()-img.width()/2 if pan_error <0: print("左移") uart.write("$LY!") time.sleep_ms(100) if pan_error >0: print("右移") uart.write("$RY!") time.sleep_ms(100) if pan_error ==0: print("抓取") if max_blob.code()==1: uart.write("$ZC!") time.sleep_ms(400) # flag=1 -

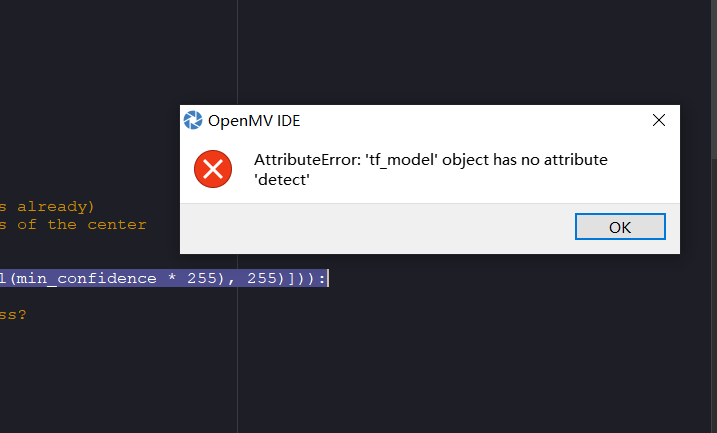

从edge impulse生成的程序运行显示‘’tf model''对象没有''detect''属性发布在 OpenMV Cam

# Edge Impulse - OpenMV Object Detection Example import sensor, image, time, os, tf, math, uos, gc sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = None labels = None min_confidence = 0.5 try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') colors = [ # Add more colors if you are detecting more than 7 types of classes at once. (255, 0, 0), ( 0, 255, 0), (255, 255, 0), ( 0, 0, 255), (255, 0, 255), ( 0, 255, 255), (255, 255, 255), ] clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # detect() returns all objects found in the image (splitted out per class already) # we skip class index 0, as that is the background, and then draw circles of the center # of our objects for i, detection_list in enumerate(net.detect(img, thresholds=[(math.ceil(min_confidence * 255), 255)])): 此句 if (i == 0): continue # background class if (len(detection_list) == 0): continue # no detections for this class? print("********** %s **********" % labels[i]) for d in detection_list: [x, y, w, h] = d.rect() center_x = math.floor(x + (w / 2)) center_y = math.floor(y + (h / 2)) print('x %d\ty %d' % (center_x, center_y)) img.draw_circle((center_x, center_y, 12), color=colors[i], thickness=2) print(clock.fps(), "fps", end="\n\n") -

从edge impulse 生成的程序运行时显示这个错误?发布在 OpenMV Cam

# Edge Impulse - OpenMV Object Detection Example import sensor, image, time, os, tf, math, uos, gc sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = None labels = None min_confidence = 0.5 try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') colors = [ # Add more colors if you are detecting more than 7 types of classes at once. (255, 0, 0), ( 0, 255, 0), (255, 255, 0), ( 0, 0, 255), (255, 0, 255), ( 0, 255, 255), (255, 255, 255), ] clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # detect() returns all objects found in the image (splitted out per class already) # we skip class index 0, as that is the background, and then draw circles of the center # of our objects for i, detection_list in enumerate(net.detect(img, thresholds=[(math.ceil(min_confidence * 255), 255)])): if (i == 0): continue # background class if (len(detection_list) == 0): continue # no detections for this class? print("********** %s **********" % labels[i]) for d in detection_list: [x, y, w, h] = d.rect() center_x = math.floor(x + (w / 2)) center_y = math.floor(y + (h / 2)) print('x %d\ty %d' % (center_x, center_y)) img.draw_circle((center_x, center_y, 12), color=colors[i], thickness=2) print(clock.fps(), "fps", end="\n\n")

-

垃圾分类openmv神经网络模型,openmv怎么准确识别垃圾的位置,让机械臂抓取呢?发布在 OpenMV Cam

Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf, uos, gc, pyb

from pyb import UART,LEDsensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((128, 128)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.

LED(1).on()

LED(2).on()

LED(3).on()

uart = UART(3,9600,timeout_char=1000)

net = None

labels = Nonetry:

# load the model, alloc the model file on the heap if we have at least 64K free after loading

net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024)))

except Exception as e:

print(e)

raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')try:

labels = [line.rstrip('\n') for line in open("labels.txt")]

except Exception as e:

raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')clock = time.clock()

while(True):

clock.tick()

img = sensor.snapshot()obj = net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5)

# default settings just do one detection... change them to search the image... for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1]))程序运行都是摄像头整幅画面

-

为什么没有识别到相应颜色时pan_error显示没有定义,运行开始时识别到相应颜色就可以运行?发布在 OpenMV Cam

import sensor, image, time from pyb import UART from pid import PID sensor.reset() sensor.set_pixformat(sensor.RGB565) sensor.set_framesize(sensor.QQVGA) sensor.set_vflip(True)#垂直方向的翻转 sensor.set_hmirror(True)#水平方向的翻转 sensor.skip_frames(10) sensor.set_auto_whitebal(False) clock = time.clock() uart = UART(3,115200,timeout_char=1000) yellow_threshold = [(17, 96, -17, 40, 88, 19),#黄色 (37, 100, 3, 47, -31, 2)] #红色) size_threshold = 2000 def find_max(blobs): max_size=0 max_blob=None for blob in blobs: if blob[2]*blob[3] > max_size: max_blob=blob max_size = blob[2]*blob[3] return max_blob while(True): clock.tick() img = sensor.snapshot() blobs = img.find_blobs(yellow_threshold) max_blob = None if blobs: max_blob = find_max(blobs) img.draw_rectangle(max_blob[0:4]) img.draw_cross(max_blob[5], max_blob[6]) if max_blob: pan_error = max_blob.cx()-img.width()/2 if max_blob == None: print("旋转") uart.write("$ZY!") time.sleep_ms(100) if pan_error >0: #此处显示没有定义 print("左移") uart.write("$ZY!") time.sleep_ms(100) -

为什么max_blob没有识别到颜色时显示没有定义,而运行开始时识别到相应颜色就没事?发布在 OpenMV Cam

# Blob Detection Example # # This example shows off how to use the find_blobs function to find color # blobs in the image. This example in particular looks for dark green objects. import sensor, image, time from pyb import UART sensor.reset() sensor.set_pixformat(sensor.RGB565) sensor.set_framesize(sensor.QQVGA) sensor.skip_frames(10) sensor.set_auto_whitebal(False) clock = time.clock() uart = UART(3,19200,timeout_char=1000) green_threshold = (17, 96, -17, 40, 88, 19) size_threshold = 2000 def find_max(blobs): max_size=0 max_blob=None for blob in blobs: if blob[2]*blob[3] > max_size: max_blob=blob max_size = blob[2]*blob[3] return max_blob while(True): clock.tick() # Track elapsed milliseconds between snapshots(). img = sensor.snapshot() # Take a picture and return the image. blobs = img.find_blobs([green_threshold]) if blobs: max_blob = find_max(blobs) img.draw_rectangle(max_blob[0:4]) # rect img.draw_cross(max_blob[5], max_blob[6]) # cx, cy if max_blob: #此处max_blob显示没定义 pan_error = max_blob.cx()-img.width()/2 if max_blob == None: print('旋转') uart.write('$ZY!') time.sleep_ms(100)