刚刚又改了一下代码,发现不报错了,但是串行终端没有输出数据,这个代码是哪里出问题了呀?

csm6 发布的帖子

-

RE: 代码也不报错,连接openmv运行就会自动终止运行,串行终端提示这个是怎么回事啊?发布在 OpenMV Cam

-

RE: 代码也不报错,连接openmv运行就会自动终止运行,串行终端提示这个是怎么回事啊?发布在 OpenMV Cam

import sensor, image, time, os, tf, uos, gc, pyb, machine,math,tf from pyb import UART uart = UART(3, 9600) sensor.reset() sensor.set_pixformat(sensor.RGB565) sensor.set_framesize(sensor.QVGA) sensor.set_windowing((240, 240)) sensor.skip_frames(time=2000) sensor.set_auto_gain(False) sensor.set_auto_whitebal(False) net = None labels = None ROI = (20,20,200,200) try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: print(e) raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') clock = time.clock() def compare(): img1 = sensor.snapshot() img1.draw_rectangle(ROI) statistics = img1.get_statistics(roi = ROI) img2 = sensor.snapshot() img2.draw_rectangle(ROI) statistics2 = img2.get_statistics(roi = ROI) num1 = statistics.mode() num2 = statistics.mode() if(abs(num1-num2)>50): return 1 #print("1")return 0 def rubbish(): clock.tick() pyb.delay(1000) img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps") if (sum(predictions_list[i][1])/len(predictions_list[i][1]))>0.8: print(prediction_list[i][1]) uart.write(predictions_list[i][0]) while(True): res = compare() if(res==1): print(OK) rubbish() while(True): if uart.any(): notice = uart.readline().decode() if notice =='G': rubbish() -

RE: 代码也不报错,连接openmv运行就会自动终止运行,串行终端提示这个是怎么回事啊?发布在 OpenMV Cam

这个代码是哪里有问题吗?怎么运行不了也拍不了照片,传不了值?自动就停止运行了?跟arduino连接也没反应

-

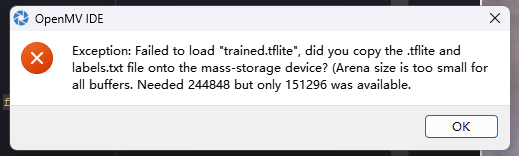

代码也不报错,连接openmv运行就会自动终止运行,串行终端提示这个是怎么回事啊?发布在 OpenMV Cam

import sensor, image, time, os, tf, uos, gc, pyb, machine,math,tf from pyb import UART uart = UART(3, 9600) sensor.reset() sensor.set_pixformat(sensor.RGB565) sensor.set_framesize(sensor.QVGA) sensor.set_windowing((240, 240)) sensor.skip_frames(time=2000) sensor.set_auto_gain(False) sensor.set_auto_whitebal(False) net = None labels = None ROI = (20,20,200,200) try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: print(e) raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') clock = time.clock() def compare(): img1 = sensor.snapshot() img1.draw_rectangle(ROI) statistics = img1.get_statistics(roi = ROI) img2 = sensor.snapshot() img2.draw_rectangle(ROI) statistics2 = img2.get_statistics(roi = ROI) num1 = statistics.mode() num2 = statistics.mode() if(abs(num1-num2)>20):return 1 else:return 0 def rubbish(): clock.tick() pyb.delay(1000) img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps") if (sum(predictions_list[i][1])/len(predictions_list[i][1]))>0.8: print(prediction_list[i][1]) uart.write(predictions_list[i][0]) else: rubbish() if(compare()==1): rubbish() while(True): if uart.any(): notice = uart.readline().decode() if notice =='G': rubbish()

-

RE: openmv连接超声波模块,提示pix format错误发布在 OpenMV Cam

@fmcq 在 openmv连接超声波模块,提示pix format错误 中说:

mport time,utime,pyb

from pyb import Pinwave_echo_pin = Pin('P7', Pin.IN, Pin.PULL_NONE)

wave_trig_pin = Pin('P8', Pin.OUT_PP, Pin.PULL_DOWN)wave_distance = 0

tim_counter = 0

flag_wave = 0#超声波启动

def wave_start():

wave_trig_pin.value(1)

utime.sleep_us(15)

wave_trig_pin.value(0)#超声波距离计算

def wave_distance_calculation():

#全局变量声明

global tim_counter

#频率f为0.2MHZ 高电平时间t=计数值1/f

wave_distance = tim_counter5*0.017

#输出最终的测量距离(单位cm)

print('wave_distance',wave_distance)#超声波数据处理

def wave_distance_process():

global flag_wave

if(flag_wave == 0):

wave_start()

if(flag_wave == 2):

wave_distance_calculation()

flag_wave = 0#配置定时器

tim =pyb.Timer(1, prescaler=720, period=65535) #相当于freq=0.2M#外部中断配置

def callback(line):

global flag_wave,tim_counter

#上升沿触发处理

if(wave_echo_pin.value()):

tim.init(prescaler=720, period=65535)

flag_wave = 1

#下降沿

else:

tim.deinit()

tim_counter = tim.counter()

tim.counter(0)

extint.disable()

flag_wave = 2

#中断配置

extint = pyb.ExtInt(wave_echo_pin, pyb.ExtInt.IRQ_RISING_FALLING, pyb.Pin.PULL_DOWN, callback)while(True):

wave_distance_process()

time.sleep(100)

print('wave_distance',wave_distance)想问一下,这个代码最后是怎么实现超声波测距的吗

-

RE: 需要一个缓冲区协议的对象,这个怎么解决发布在 OpenMV Cam

import sensor, image, time, os, tf, uos, gc,pyb,machine from pyb import UART uart = UART(3,115200) green = (10,100,-50,15,-15,50) red = (0,100,-30,50,-30,30) blue = (10,100,-20,40,-10,40) orange = (10,80,-10,30,-20,35) brown = (0,80,-10,20,-20,30) yellow = (50,80,-10,10,-20,60) white = (10,100,-20,30,-30,20) gray = (20,80,-10,15,-10,25) sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time = 2000) sensor.set_auto_gain(False) sensor.set_auto_whitebal(False) clock = time.clock() while(True): clock.tick() def rubbish(): net = None labels = None try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64 * 1024))) except Exception as e: raise Exception( 'Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str( e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception( 'Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str( e) + ')') image = sensor.snapshot() scores = net.classify(image) # default settings just do one detection... change them to search the image... for obj in net.classify(image, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): image.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) name = predictions_list[0][0] for i in range(len(predictions_list)): max = 0.8 if predictions_list[i][1] > max: max = predictions_list[i][1] name = predictions_list[i][0] if name == 'KHSLJ': name = 'k' elif name == 'YHLJ': name = 'y' elif name == 'CYLJ': name = 'c' elif name == 'QTLJ': name = 'q' return name name = rubbish() img = sensor.snapshot() blobs1 = img.find_blobs([green]) blobs2 = img.find_blobs([red]) blobs3 = img.find_blobs([blue]) blobs4 = img.find_blobs([orange]) blobs5 = img.find_blobs([brown]) blobs6 = img.find_blobs([yellow]) blobs7 = img.find_blobs([white]) blobs8 = img.find_blobs([gray]) if blobs1: rubbish() uart.write(name) print(name) if blobs2: rubbish() uart.write(name) if blobs3: rubbish() uart.write(name) if blobs4: rubbish() uart.write(name) if blobs5: rubbish() uart.write(name) if blobs6: rubbish() uart.write(name) if blobs7: rubbish() uart.write(name) if blobs8: rubbish() uart.write(name) -

需要一个缓冲区协议的对象,这个怎么解决发布在 OpenMV Cam

# Edge Impulse - OpenMV Image Classification Example import sensor, image, time, os, tf, uos, gc,pyb,machine from pyb import UART uart = UART(3,115200) green = (10,100,-50,15,-15,50) red = (0,100,-30,50,-30,30) blue = (10,100,-20,40,-10,40) orange = (10,80,-10,30,-20,35) brown = (0,80,-10,20,-20,30) yellow = (50,80,-10,10,-20,60) white = (10,100,-20,30,-30,20) gray = (20,80,-10,15,-10,25) sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time = 2000) sensor.set_auto_gain(False) sensor.set_auto_whitebal(False) clock = time.clock() name = 'c' while(True): clock.tick() def rubbish(): net = None labels = None try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64 * 1024))) except Exception as e: raise Exception( 'Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str( e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception( 'Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str( e) + ')') image = sensor.snapshot() scores = net.classify(image) # default settings just do one detection... change them to search the image... for obj in net.classify(image, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): image.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) name = predictions_list[0][0] for i in range(len(predictions_list)): max = 0.8 if predictions_list[i][1] > max: max = predictions_list[i][1] name = predictions_list[i][0] if name == 'KHSLJ': name = 'k' elif name == 'YHLJ': name = 'y' elif name == 'CYLJ': name = 'c' elif name == 'QTLJ': name = 'q' return name img = sensor.snapshot() blobs1 = img.find_blobs([green]) blobs2 = img.find_blobs([red]) blobs3 = img.find_blobs([blue]) blobs4 = img.find_blobs([orange]) blobs5 = img.find_blobs([brown]) blobs6 = img.find_blobs([yellow]) blobs7 = img.find_blobs([white]) blobs8 = img.find_blobs([gray]) if blobs1: rubbish() uart.write(name) if blobs2: rubbish() uart.write(name) if blobs3: rubbish() uart.write(name) if blobs4: rubbish() uart.write(name) if blobs5: rubbish() uart.write(name) if blobs6: rubbish() uart.write(name) if blobs7: rubbish() uart.write(name) if blobs8: rubbish() uart.write(name)