为什么跑mnist例程的代码,正确率这么低

-

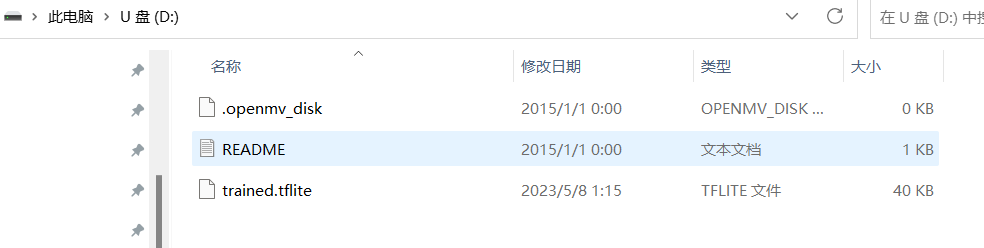

请在这里粘贴代码# This code run in OpenMV4 H7 or OpenMV4 H7 Plus import sensor, image, time, os, tf sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.GRAYSCALE) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. clock = time.clock() while(True): clock.tick() img = sensor.snapshot().binary([(0,64)]) for obj in tf.classify("trained.tflite", img, min_scale=1.0, scale_mul=0.5, x_overlap=0.0, y_overlap=0.0): output = obj.output() number = output.index(max(output)) print(number) print(clock.fps(), "fps")

-

我也这么觉得

蹲个解决方法

蹲个解决方法

-

mnist为什么直接运行例程可以,设置roi区域后根本就不能识别roi区域里的数字?

-

@gwum 而且必须离得很近,根本就没有使用的场景,我不知道快贴到数字才能识别有什么意义?

-

@gwum https://github.com/SingTown/openmv_tensorflow_training_scripts/blob/main/mnist/openmv_mnist.ipynb

代码在这里,你可以自己改数据集,想怎么改就怎么改。