系统迁移中,暂时无法访问,所有数据会迁移到新的网站。

OpenMV VSCode 扩展发布了,在插件市场直接搜索OpenMV就可以安装

如果有产品硬件故障问题,比如无法开机,论坛很难解决。可以直接找售后维修。

发帖子之前,请确认看过所有的视频教程,https://singtown.com/learn/ 和所有的上手教程http://book.openmv.cc/

每一个新的提问,单独发一个新帖子

帖子需要目的,你要做什么?

如果涉及代码,需要报错提示与全部代码文本,请注意不要贴代码图片

必看:玩转星瞳论坛了解一下图片上传,代码格式等问题。

口罩识别模型训练后用edgeimpulse生成代码运行时口罩人脸识别偏差很大

-

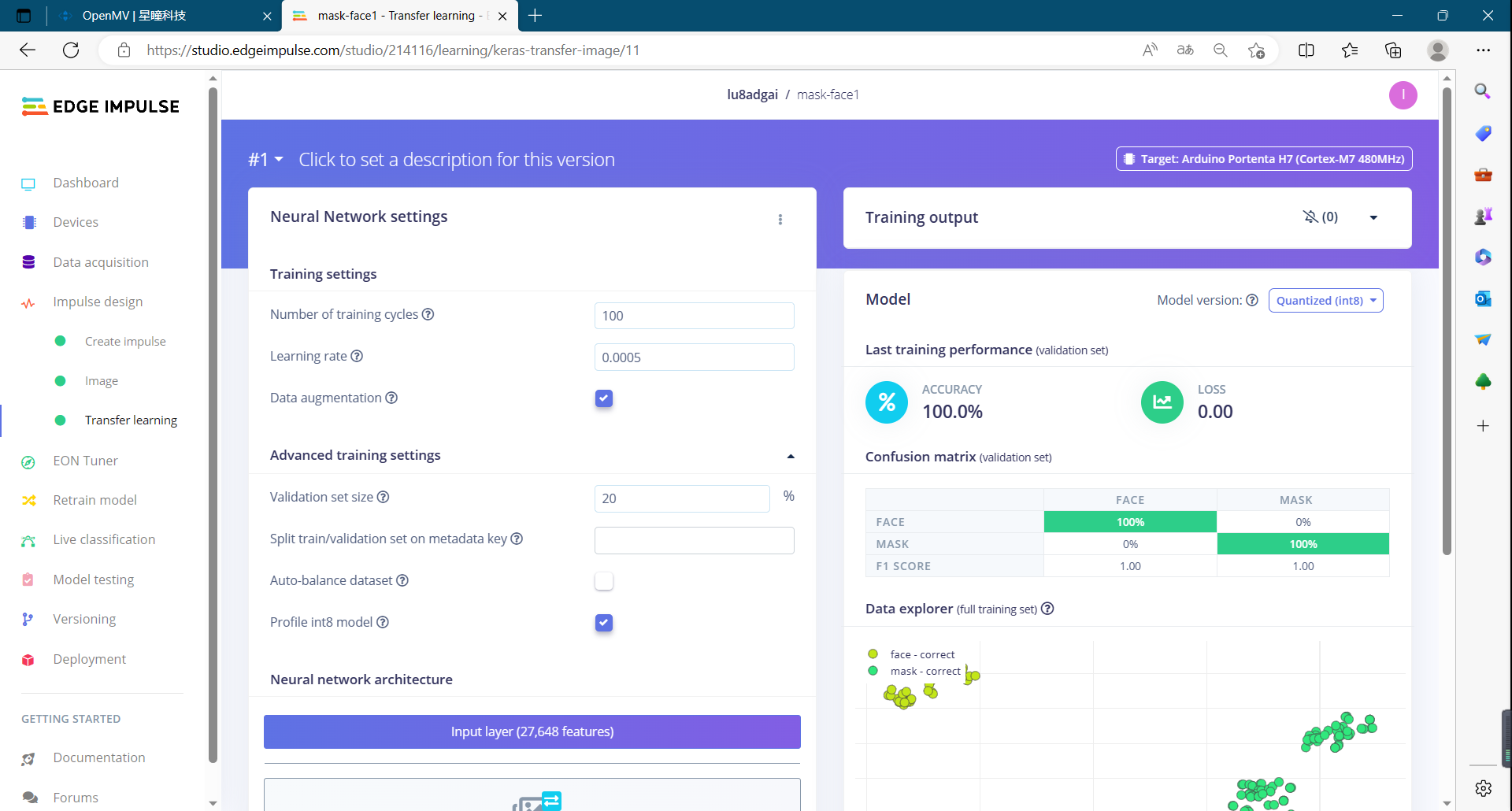

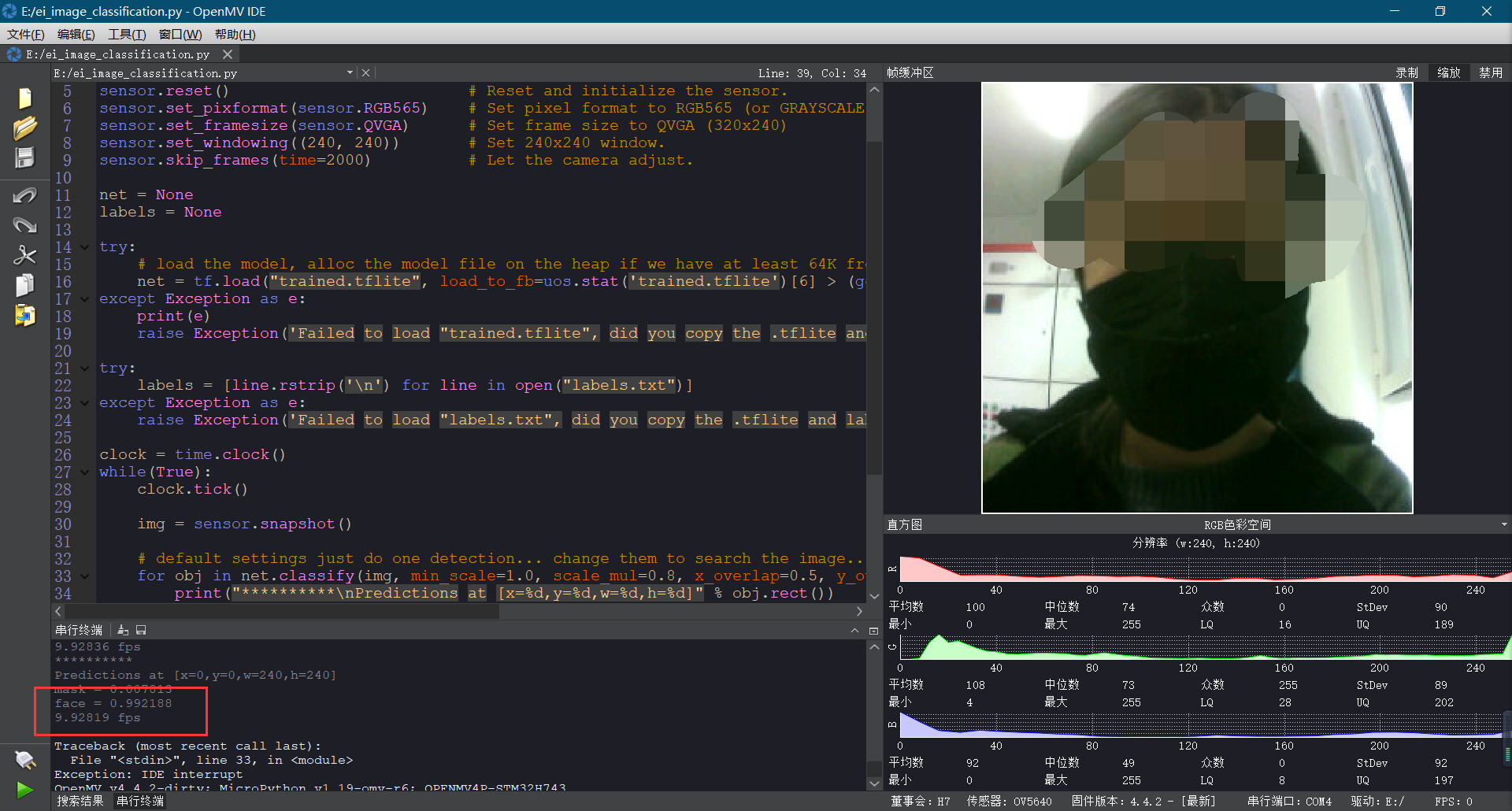

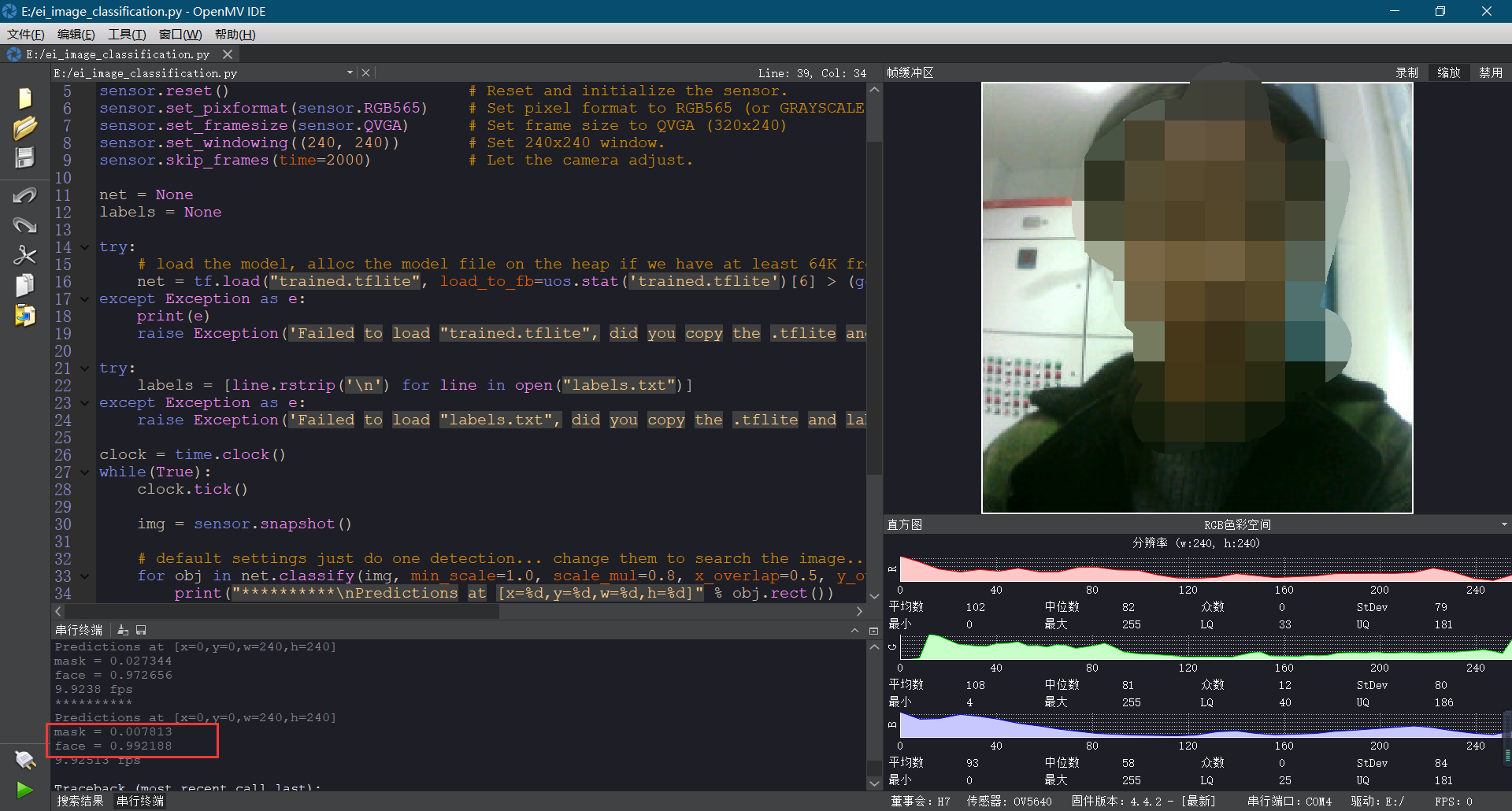

进行口罩识别训练时Transfer learning和Model testing时都是百分之百没有差错但是运行代码进行口罩识别测试的时候会差很多请问下是什么原因

# Edge Impulse - OpenMV Image Classification Example import sensor, image, time, os, tf, uos, gc sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = None labels = None try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: print(e) raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps")

-

你的实际画面,和训练集是否一致?人物,口罩颜色,光照。