与ros串口传输数据乱码

-

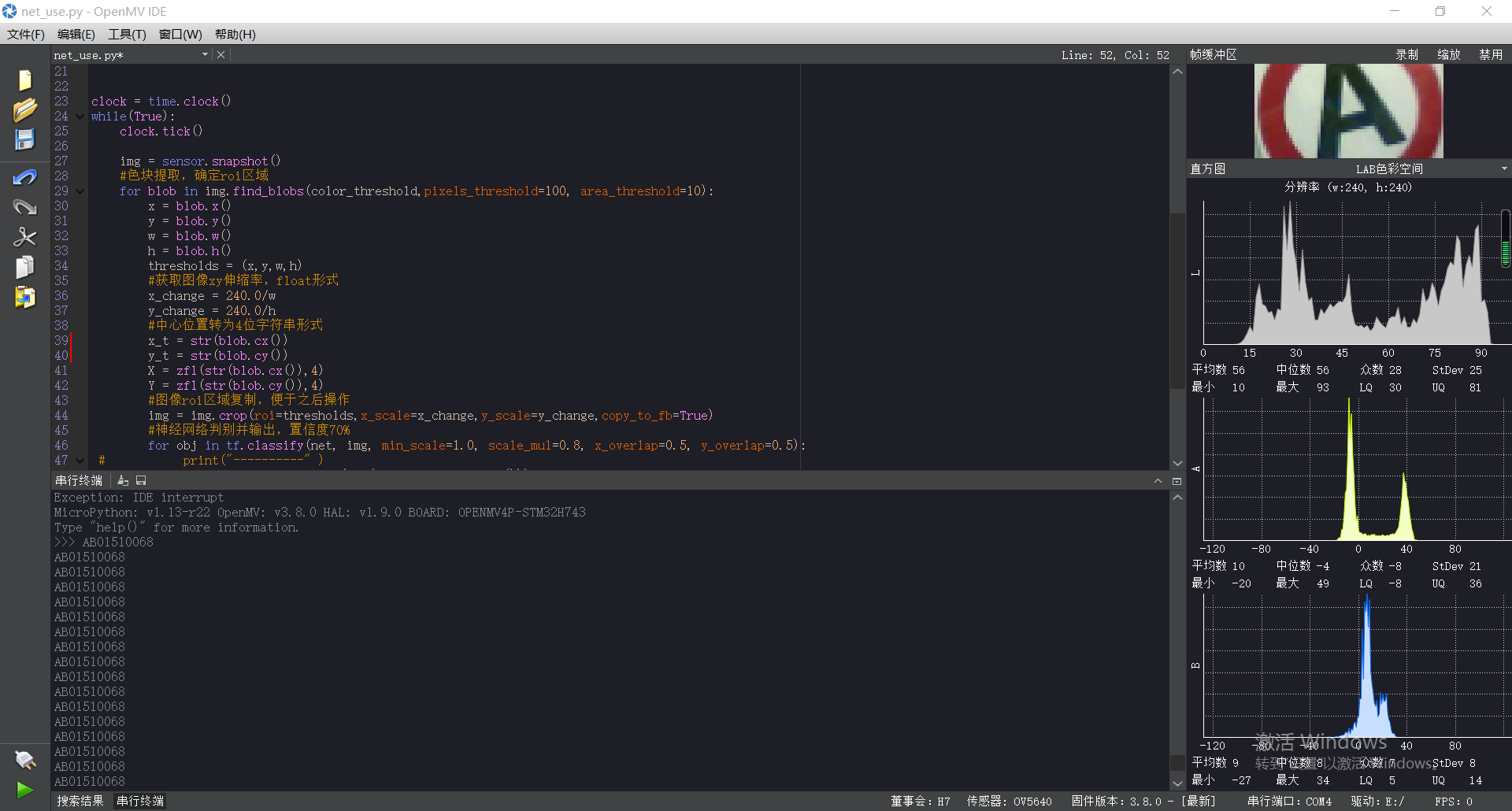

import sensor, image, time, os, tf from pyb import UART import json sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = "trained.tflite"#调用神经网络模型 labels = [line.rstrip('\n') for line in open("labels.txt")] color_threshold = [(0, 100, 12, 127, -9, 55)]#红色色块提取 uart = UART(3, 115200)#串口通信 def zfl(s, width): return '{:0>{w}}'.format(s, w=width) clock = time.clock() while(True): clock.tick() img = sensor.snapshot() #色块提取,确定roi区域 for blob in img.find_blobs(color_threshold,pixels_threshold=100, area_threshold=10): x = blob.x() y = blob.y() w = blob.w() h = blob.h() thresholds = (x,y,w,h) #获取图像xy伸缩率,float形式 x_change = 240.0/w y_change = 240.0/h #中心位置转为4位字符串形式 x_t = str(blob.cx()) y_t = str(blob.cy()) X = zfl(str(blob.cx()),4) Y = zfl(str(blob.cy()),4) #图像roi区域复制,便于之后操作 img = img.crop(roi=thresholds,x_scale=x_change,y_scale=y_change,copy_to_fb=True) #神经网络判别并输出,置信度70% for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): # print("----------" ) predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): if (predictions_list[i][1] > 0.6):#相似度达到60%以上认为是 output_str = 'AB'+X+Y # print("%s"%(predictions_list[i][0])) #output_str = 'A'+'B'+'A'+x_t+y_t uart.write(output_str) print(output_str)

print打印的都正常,通过TTL转USB串口传输之后就跟我想要的毫无干系了,出错的图片证明上传失败了,放在评论。。。。

-

传输失败的图片一致传不上来,一至报错,发不上来

传输的类似\0xf1\0x8b\0xfe1u10068AB01511\0xfc\0xdeaBx1511068\0xff\0xf9这样的,我都不知道是什么,求求了,卡了两天了,哥哥姐姐们快回复我吧

-

此回复已被删除!

-

你是用的串口助手吗?我不知道是不是ROS的协议不一样。先用串口助手测试一遍。

-

已经解决了,是地线连接不稳导致经常传输/0xff这种数据