问题就出在

for line in lines:

这句话上

请问我的代码运行时为什么返回如标题错误

import sensor, image, time, lcd, gc

sensor.reset()

sensor.set_pixformat(sensor.GRAYSCALE)

sensor.set_framesize(sensor.CIF)

sensor.set_windowing([0,0,136,200])

sensor.skip_frames(time = 2000)

clock = time.clock()

#==========================寻找离视野中心最近的直线函数================

def find_theline(lines):

min_dis = 100

for line in lines:

if (abs(line.x1() - img.width()/2) < min_dis):

the_line = line

min_dis = abs(line.x1() - img.width()/2)

return the_line

#=================================================================

#=============================滤波参数==============================

kernel_size = 1

kernel = [-1, -1, -1,\

-1, +8, -1,\

-1, -1, -1]

thresholds = [(0, 36)]

#=================================================================

while(True):

clock.tick()

img = sensor.snapshot().lens_corr(strength = 1.0,zoom = 1)

img.morph(kernel_size, kernel)

img.binary(thresholds)

img.erode(1, threshold = 2)

lines = img.get_regression([(0,36)], robust = True)

if (lines):

theline = find_theline(lines)

if(theline.magnitude() > 8):

rho_err = abs(theline.rho())-img.width()/2

print("rho_error:",rho_err)

img.draw_line(theline.line())

gc.collect()

print("fps:",clock.fps())

请问openmv能不能实现在特定区域进行图像滤波,例如在特定区域进行膨胀滤波,不使用sensor.set_windowing,因为涉及lcd显示。

使用官方例程,我只是加入了lcd.init和lcd.display两句函数,但是学习完颜色阈值后,lcd上并未显示绘制出的矩形

# Automatic RGB565 Color Tracking Example

#

# This example shows off single color automatic RGB565 color tracking using the OpenMV Cam.

import sensor, image, time, lcd

print("Letting auto algorithms run. Don't put anything in front of the camera!")

sensor.reset()

sensor.set_pixformat(sensor.RGB565)

sensor.set_framesize(sensor.QVGA)

sensor.skip_frames(time = 2000)

sensor.set_auto_gain(False) # must be turned off for color tracking

sensor.set_auto_whitebal(False) # must be turned off for color tracking

clock = time.clock()

lcd.init()

# Capture the color thresholds for whatever was in the center of the image.

r = [(320//2)-(50//2), (240//2)-(50//2), 50, 50] # 50x50 center of QVGA.

print("Auto algorithms done. Hold the object you want to track in front of the camera in the box.")

print("MAKE SURE THE COLOR OF THE OBJECT YOU WANT TO TRACK IS FULLY ENCLOSED BY THE BOX!")

for i in range(60):

img = sensor.snapshot()

img.draw_rectangle(r)

print("Learning thresholds...")

threshold = [50, 50, 0, 0, 0, 0] # Middle L, A, B values.

for i in range(60):

img = sensor.snapshot()

hist = img.get_histogram(roi=r)

lo = hist.get_percentile(0.01) # Get the CDF of the histogram at the 1% range (ADJUST AS NECESSARY)!

hi = hist.get_percentile(0.99) # Get the CDF of the histogram at the 99% range (ADJUST AS NECESSARY)!

# Average in percentile values.

threshold[0] = (threshold[0] + lo.l_value()) // 2

threshold[1] = (threshold[1] + hi.l_value()) // 2

threshold[2] = (threshold[2] + lo.a_value()) // 2

threshold[3] = (threshold[3] + hi.a_value()) // 2

threshold[4] = (threshold[4] + lo.b_value()) // 2

threshold[5] = (threshold[5] + hi.b_value()) // 2

for blob in img.find_blobs([threshold], pixels_threshold=100, area_threshold=100, merge=True, margin=10):

img.draw_rectangle(blob.rect())

img.draw_cross(blob.cx(), blob.cy())

img.draw_rectangle(r)

print("Thresholds learned...")

print("Tracking colors...")

while(True):

clock.tick()

img = sensor.snapshot()

for blob in img.find_blobs([threshold], pixels_threshold=100, area_threshold=100, merge=True, margin=10):

img.draw_rectangle(blob.rect())

img.draw_cross(blob.cx(), blob.cy())

lcd.display(sensor.snapshot())

#print(clock.fps())

而且我的LCD大小为240*280,使用QVGA的分辨率LCD显示会有缺失

当我把图像分辨率设置为VGA并img.set_windowing(240,280)时,image.draw_rectangle又开始报如标题的错误,请问我该如何修改代码?

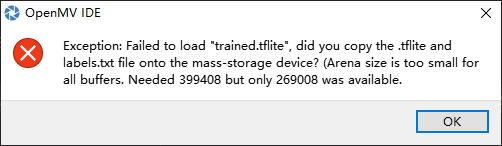

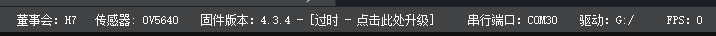

固件信息如下:

代码如下:

# Edge Impulse - OpenMV Object Detection Example

import sensor, image, time, os, tf, math, uos, gc

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.

net = None

labels = None

min_confidence = 0.5

try:

# load the model, alloc the model file on the heap if we have at least 64K free after loading

net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024)))

except Exception as e:

raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')

try:

labels = [line.rstrip('\n') for line in open("labels.txt")]

except Exception as e:

raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')

colors = [ # Add more colors if you are detecting more than 7 types of classes at once.

(255, 0, 0),

( 0, 255, 0),

(255, 255, 0),

( 0, 0, 255),

(255, 0, 255),

( 0, 255, 255),

(255, 255, 255),

]

clock = time.clock()

while(True):

clock.tick()

img = sensor.snapshot()

# detect() returns all objects found in the image (splitted out per class already)

# we skip class index 0, as that is the background, and then draw circles of the center

# of our objects

for i, detection_list in enumerate(net.detect(img, thresholds=[(math.ceil(min_confidence * 255), 255)])):

if (i == 0): continue # background class

if (len(detection_list) == 0): continue # no detections for this class?

print("********** %s **********" % labels[i])

for d in detection_list:

[x, y, w, h] = d.rect()

center_x = math.floor(x + (w / 2))

center_y = math.floor(y + (h / 2))

print('x %d\ty %d' % (center_x, center_y))

img.draw_circle((center_x, center_y, 12), color=colors[i], thickness=2)

print(clock.fps(), "fps", end="\n\n")

其中此python文件及labels.txt文件、模型文件保存在SD卡中,请问我是需要更新固件还是?