# Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf, uos, gc

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.

net = None

labels = None

try:

# load the model, alloc the model file on the heap if we have at least 64K free after loading

net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024)))

except Exception as e:

print(e)

raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')

try:

labels = [line.rstrip('\n') for line in open("labels.txt")]

except Exception as e:

raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')

clock = time.clock()

while(True):

clock.tick()

img = sensor.snapshot()

# default settings just do one detection... change them to search the image...

for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5):

print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect())

img.draw_rectangle(obj.rect())

# This combines the labels and confidence values into a list of tuples

predictions_list = list(zip(labels, obj.output()))

for i in range(len(predictions_list)):

print("%s = %f" % (predictions_list[i][0], predictions_list[i][1]))

print(clock.fps(), "fps")

weun

@weun

weun 发布的帖子

-

我有六种动作,edge impulse生成的程序运行识别的时候,串行终端显示只有动作1234的概率,没有56发布在 OpenMV Cam

-

脱机运行识别率太低,有六种图像通过edge impulse训练之后只能识别两种,图像2识别成图像3发布在 OpenMV Cam

# Edge Impulse - OpenMV Image Classification Example import sensor, image, time, os, tf, uos, gc from pyb import UART sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = None labels = None try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: print(e) raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') clock = time.clock() while(True): clock.tick() uart = UART(3,9600) img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) predictions_list=sorted(predictions_list,key=lambda x:x[1],reverse=True) highest_prediction=predictions_list[0] print("The highest prediction is:%s=%f"%(highest_prediction[0],highest_prediction[1])) uart.write(highest_prediction[0]) uart.write("\r\n") -

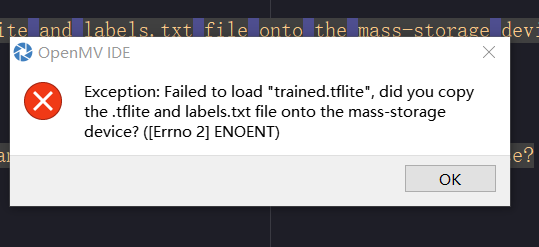

固件已经是最新版本,那三个文件也复制到SD卡,为什么?发布在 OpenMV Cam

Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf, uos, gc

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.net = None

labels = Nonetry:

# load the model, alloc the model file on the heap if we have at least 64K free after loading

net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024)))

except Exception as e:

print(e)

raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')try:

labels = [line.rstrip('\n') for line in open("labels.txt")]

except Exception as e:

raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')clock = time.clock()

while(True):

clock.tick()img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps")

-

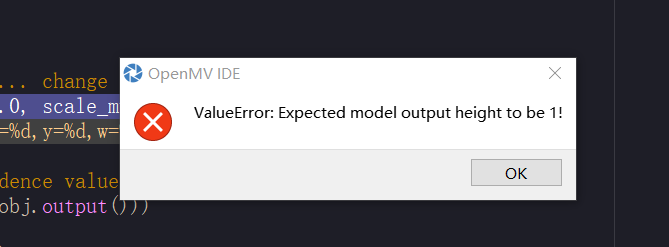

edge impuse训练模型程序运行出错发布在 OpenMV Cam

# Edge Impulse - OpenMV Image Classification Example import sensor, image, time, os, tf, uos, gc sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = None labels = None try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: print(e) raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps")

-

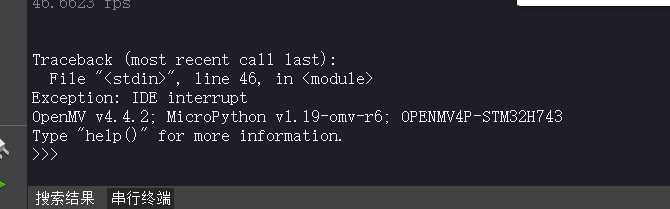

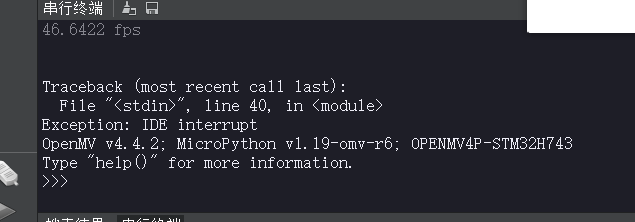

IDE异常中断是为什么?发布在 OpenMV Cam

Edge Impulse - OpenMV Object Detection Example

import sensor, image, time, os, tf, math, uos, gc

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.net = None

labels = None

min_confidence = 0.5try:

# load the model, alloc the model file on the heap if we have at least 64K free after loading

net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024)))

except Exception as e:

raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')try:

labels = [line.rstrip('\n') for line in open("labels.txt")]

except Exception as e:

raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')colors = [ # Add more colors if you are detecting more than 7 types of classes at once.

(255, 0, 0),

( 0, 255, 0),

(255, 255, 0),

( 0, 0, 255),

(255, 0, 255),

( 0, 255, 255),

(255, 255, 255),

]clock = time.clock()

while(True):

clock.tick()img = sensor.snapshot() # detect() returns all objects found in the image (splitted out per class already) # we skip class index 0, as that is the background, and then draw circles of the center # of our objects for i, detection_list in enumerate(net.detect(img, thresholds=[(math.ceil(min_confidence * 255), 255)])): if (i == 0): continue # background class if (len(detection_list) == 0): continue # no detections for this class? print("********** %s **********" % labels[i]) for d in detection_list: [x, y, w, h] = d.rect() center_x = math.floor(x + (w / 2)) center_y = math.floor(y + (h / 2)) print('x %d\ty %d' % (center_x, center_y)) img.draw_circle((center_x, center_y, 12), color=colors[i], thickness=2) print(clock.fps(), "fps", end="\n\n")

-

摄像头识别一直没有结果发布在 OpenMV Cam

# Edge Impulse - OpenMV Object Detection Example import sensor, image, time, os, tf, math, uos, gc sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = None labels = None min_confidence = 0.5 try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') colors = [ # Add more colors if you are detecting more than 7 types of classes at once. (255, 0, 0), ( 0, 255, 0), (255, 255, 0), ( 0, 0, 255), (255, 0, 255), ( 0, 255, 255), (255, 255, 255), ] clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # detect() returns all objects found in the image (splitted out per class already) # we skip class index 0, as that is the background, and then draw circles of the center # of our objects for i, detection_list in enumerate(net.detect(img, thresholds=[(math.ceil(min_confidence * 255), 255)])): if (i == 0): continue # background class if (len(detection_list) == 0): continue # no detections for this class? print("********** %s **********" % labels[i]) for d in detection_list: [x, y, w, h] = d.rect() center_x = math.floor(x + (w / 2)) center_y = math.floor(y + (h / 2)) print('x %d\ty %d' % (center_x, center_y)) img.draw_circle((center_x, center_y, 12), color=colors[i], thickness=2) print(clock.fps(), "fps", end="\n\n")