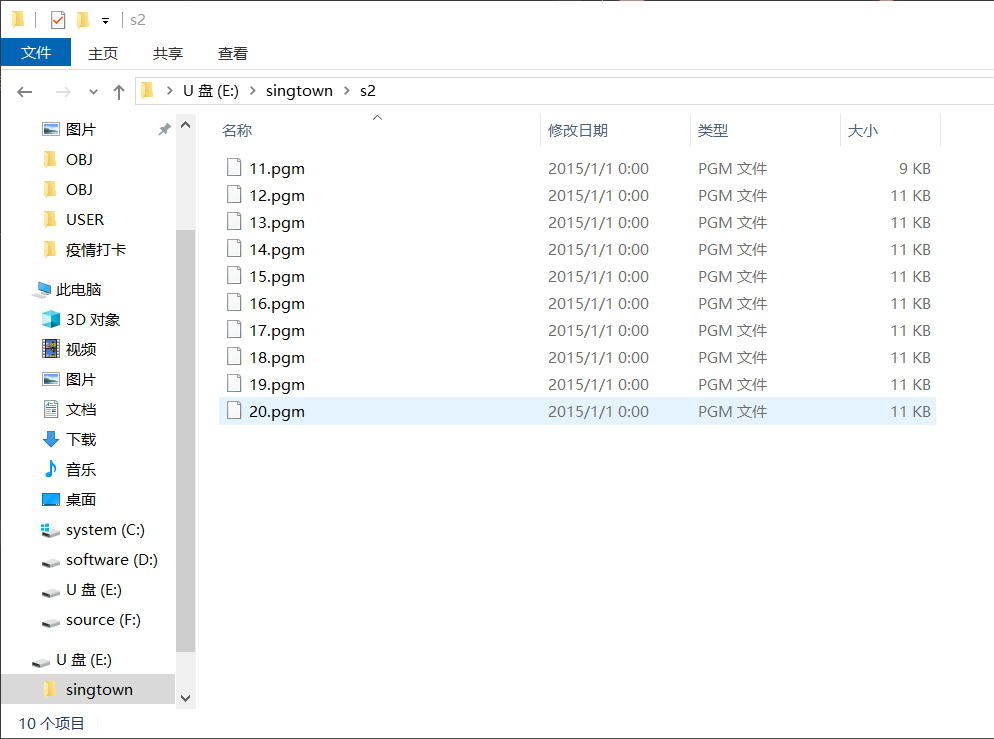

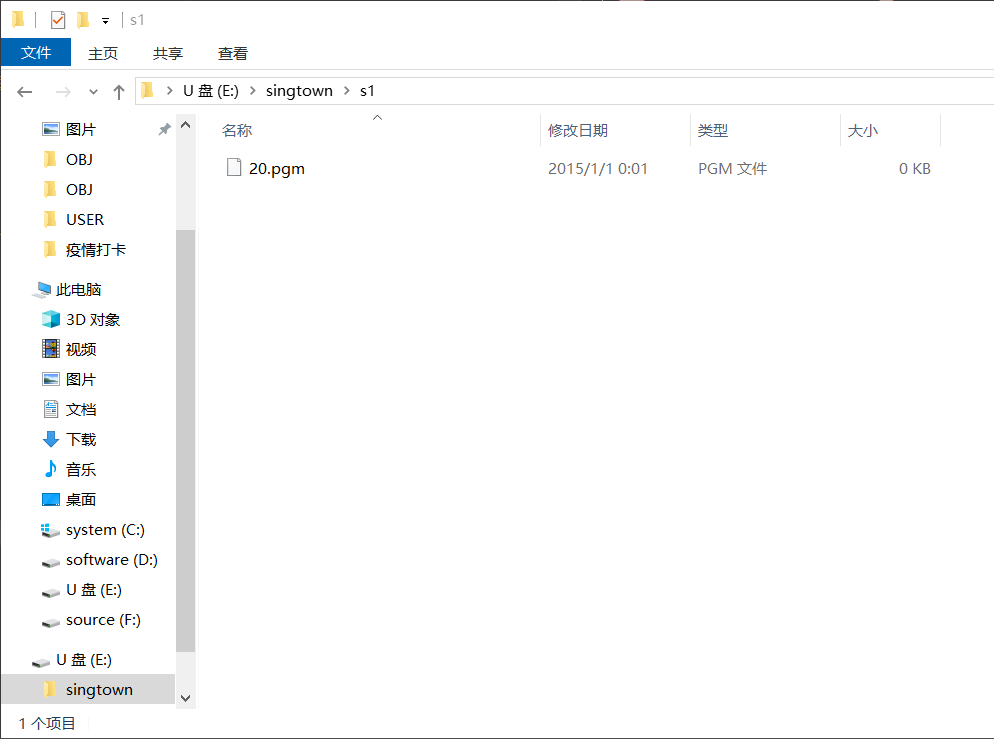

你好,内存卡格式化之后,重新建的相关文件夹,重试之后设置的拍摄20张,但还是第一次只拍10张,修改序号后,第二次拍第一张后就失败断开

P

pt6u

@pt6u

0

声望

22

楼层

1263

资料浏览

0

粉丝

0

关注

pt6u 发布的帖子

-

人脸分辨采集失败问题发布在 OpenMV Cam

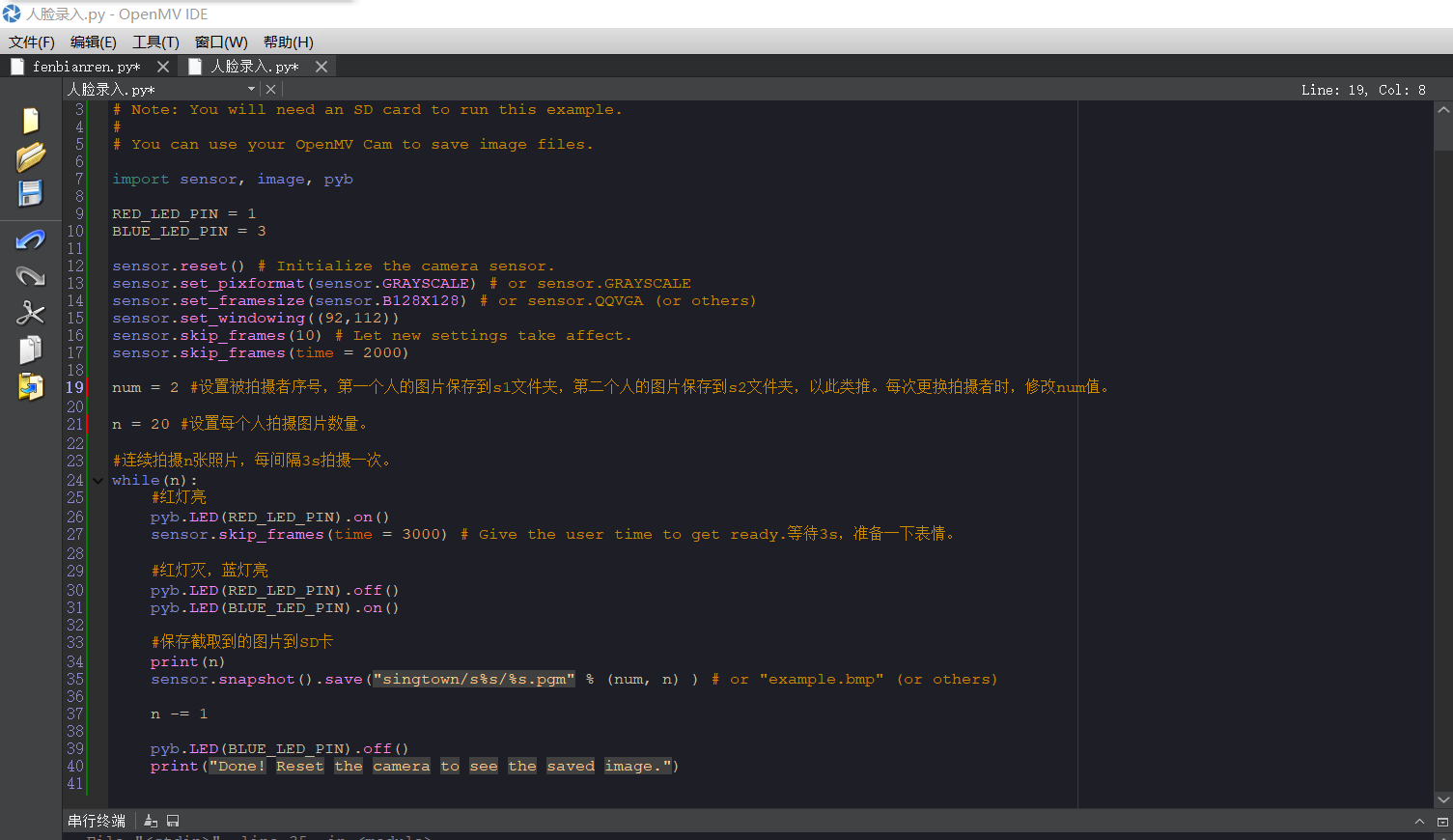

例程代码设置的20张采集样本,结果第一次运行只采集了10张就停了,然后修改num为1后,再次运行代码,只采集一次,机器就断开连接了,内存卡是16G的,对内存卡的识别也是正常的

# Snapshot Example # # Note: You will need an SD card to run this example. # # You can use your OpenMV Cam to save image files. import sensor, image, pyb RED_LED_PIN = 1 BLUE_LED_PIN = 3 sensor.reset() # Initialize the camera sensor. sensor.set_pixformat(sensor.GRAYSCALE) # or sensor.GRAYSCALE sensor.set_framesize(sensor.B128X128) # or sensor.QQVGA (or others) sensor.set_windowing((92,112)) sensor.skip_frames(10) # Let new settings take affect. sensor.skip_frames(time = 2000) num = 2 #设置被拍摄者序号,第一个人的图片保存到s1文件夹,第二个人的图片保存到s2文件夹,以此类推。每次更换拍摄者时,修改num值。 n = 20 #设置每个人拍摄图片数量。 #连续拍摄n张照片,每间隔3s拍摄一次。 while(n): #红灯亮 pyb.LED(RED_LED_PIN).on() sensor.skip_frames(time = 3000) # Give the user time to get ready.等待3s,准备一下表情。 #红灯灭,蓝灯亮 pyb.LED(RED_LED_PIN).off() pyb.LED(BLUE_LED_PIN).on() #保存截取到的图片到SD卡 print(n) sensor.snapshot().save("singtown/s%s/%s.pgm" % (num, n) ) # or "example.bmp" (or others) n -= 1 pyb.LED(BLUE_LED_PIN).off() print("Done! Reset the camera to see the saved image.") -

RE: 关于颜色识别和形状同时识别出现的问题发布在 OpenMV Cam

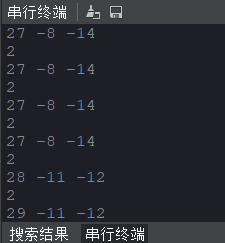

最开始就是全部打印出来的,但其他的参数没有用,所以我打印了statistics.l_mode() statistics.a_mode() statistics.b_mode() 这三个参数,这三个参数就是if语句的判断条件,打印出来的结果是满足if语句的,但问题就是没运行if语句下的代码

-

RE: 关于颜色识别和形状同时识别出现的问题发布在 OpenMV Cam

你好,没有说同时执行if,else语句,我意思是在代码满足if语句条件的时候,但他没运行if语句下的代码块,而是运行了else下的代码块,这几天我测试发现把这一句:

if 0<statistics.l_mode()<36 and -20<statistics.a_mode()<10 and -10<statistics.b_mode()<-128:

中的and全部改成or的时候才能满足判断语句,然后运行if下的语句,但是我看颜色与形状识别视频教程时,教程用的就是and语句 -

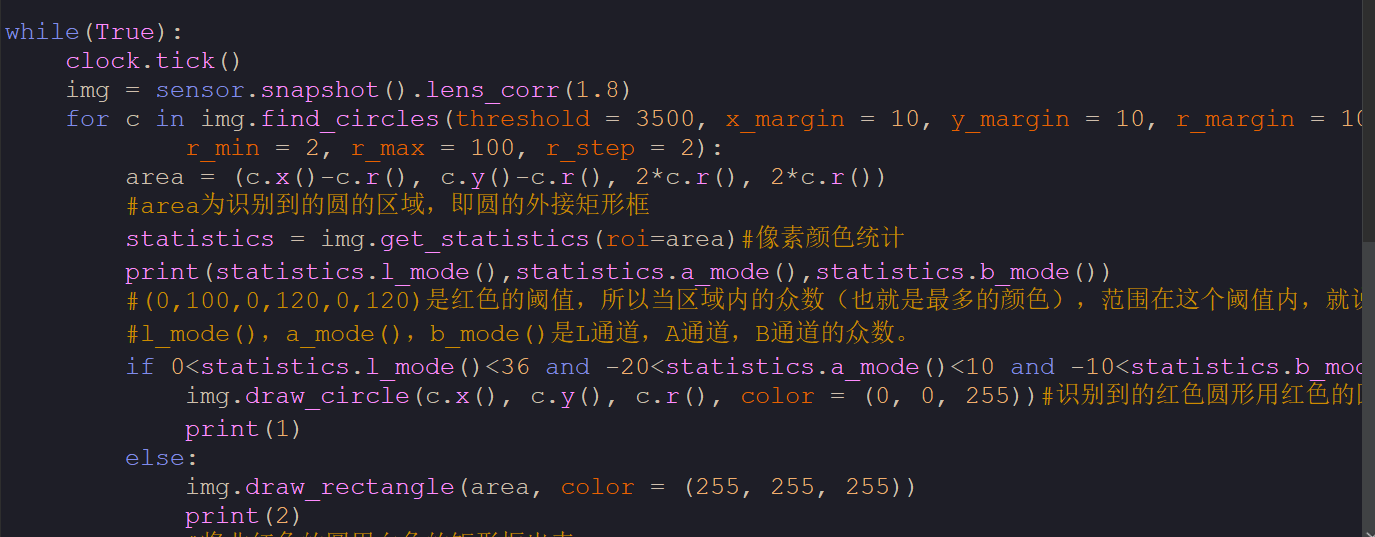

关于颜色识别和形状同时识别出现的问题发布在 OpenMV Cam

请问我在做颜色和形状同时识别时,为什么在满足if语句的条件时,程序还在执行else的语句(判断条件的色块范围我是根据打印的众数写的范围,因为之前试了多颜色例程中的蓝色的色块范围不行)

import sensor, image, time sensor.reset() sensor.set_pixformat(sensor.RGB565) sensor.set_framesize(sensor.QQVGA) sensor.skip_frames(time = 2000) sensor.set_auto_gain(False) # must be turned off for color tracking sensor.set_auto_whitebal(False) # must be turned off for color tracking clock = time.clock() while(True): clock.tick() img = sensor.snapshot().lens_corr(1.8) for c in img.find_circles(threshold = 3500, x_margin = 10, y_margin = 10, r_margin = 10, r_min = 2, r_max = 100, r_step = 2): area = (c.x()-c.r(), c.y()-c.r(), 2*c.r(), 2*c.r()) #area为识别到的圆的区域,即圆的外接矩形框 statistics = img.get_statistics(roi=area)#像素颜色统计 print(statistics.l_mode(),statistics.a_mode(),statistics.b_mode()) #(0,100,0,120,0,120)是红色的阈值,所以当区域内的众数(也就是最多的颜色),范围在这个阈值内,就说明是红色的圆。 #l_mode(),a_mode(),b_mode()是L通道,A通道,B通道的众数。 if 0<statistics.l_mode()<36 and -20<statistics.a_mode()<10 and -10<statistics.b_mode()<-128:#if the circle is red img.draw_circle(c.x(), c.y(), c.r(), color = (0, 0, 255))#识别到的红色圆形用红色的圆框出来 print(1) else: img.draw_rectangle(area, color = (255, 255, 255)) print(2) #将非红色的圆用白色的矩形框出来 -

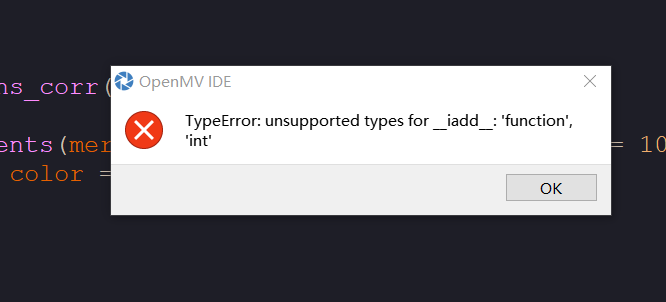

关于利用识别线段来识别三角形的问题发布在 OpenMV Cam

在代码中 sum+=l.theta()一直报错,改为将l.theta()改为其他类型,还是报错,请问怎样解决啊

import sensor, image, time, math sensor.reset() sensor.set_pixformat(sensor.RGB565) # 灰度更快 sensor.set_framesize(sensor.QQVGA) sensor.skip_frames(time = 2000) clock = time.clock() # 所有线段都有 `x1()`, `y1()`, `x2()`, and `y2()` 方法来获得他们的终点 # 一个 `line()` 方法来获得所有上述的四个元组值,可用于 `draw_line()`. while(True): clock.tick() img = sensor.snapshot() if enable_lens_corr: img.lens_corr(1.8) # for 2.8mm lens... for l in img.find_line_segments(merge_distance = 10, max_theta_diff = 10): img.draw_line(l.line(), color = (255, 0, 0)) sum+=l.theta() sum-=180 if sum<110 and sum>1: print('三角形') num_segment=1