你用‘time_ms’试一下

N

nhmg

@nhmg

0

声望

10

楼层

1598

资料浏览

0

粉丝

1

关注

nhmg 发布的帖子

-

追小球的小车在走到小球面前后如何让它执行追其他颜色的小球发布在 OpenMV Cam

# Blob Detection Example # # This example shows off how to use the find_blobs function to find color # blobs in the image. This example in particular looks for dark green objects. import sensor, image, time import car from pid import PID # You may need to tweak the above settings for tracking green things... # Select an area in the Framebuffer to copy the color settings. sensor.reset() # Initialize the camera sensor. sensor.set_pixformat(sensor.RGB565) # use RGB565. sensor.set_framesize(sensor.QQVGA) # use QQVGA for speed. sensor.skip_frames(10) # Let new settings take affect. sensor.set_auto_whitebal(False) # turn this off. clock = time.clock() # Tracks FPS. # For color tracking to work really well you should ideally be in a very, very, # very, controlled enviroment where the lighting is constant... green_threshold = (76, 96, -110, -30, 8, 66) size_threshold = 2000 x_pid = PID(p=0.5, i=1, imax=100) h_pid = PID(p=0.05, i=0.1, imax=50) def find_max(blobs): max_size=0 for blob in blobs: if blob[2]*blob[3] > max_size: max_blob=blob max_size = blob[2]*blob[3] return max_blob while(True): clock.tick() # Track elapsed milliseconds between snapshots(). img = sensor.snapshot() # Take a picture and return the image. blobs = img.find_blobs([green_threshold]) if blobs: max_blob = find_max(blobs) x_error = max_blob[5]-img.width()/2 h_error = max_blob[2]*max_blob[3]-size_threshold print("x error: ", x_error) ''' for b in blobs: # Draw a rect around the blob. img.draw_rectangle(b[0:4]) # rect img.draw_cross(b[5], b[6]) # cx, cy ''' img.draw_rectangle(max_blob[0:4]) # rect img.draw_cross(max_blob[5], max_blob[6]) # cx, cy x_output=x_pid.get_pid(x_error,1) h_output=h_pid.get_pid(h_error,1) print("h_output",h_output) car.run(-h_output-x_output,-h_output+x_output) else: car.run(18,-18) -

RE: 这种降低像素的情况怎么解决呀,(降到最低也不行)发布在 OpenMV Cam

用的是openmv-h7,代码是垃圾识别的代码

请在这里粘贴代码 ```# Edge Impulse - OpenMV Image Classification Example import sensor, image, time, os, tf sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = "trained.tflite" labels = [line.rstrip('\n') for line in open("labels.txt")] clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps") -

这种降低像素的情况怎么解决呀,(降到最低也不行)发布在 OpenMV Cam

Memory Error:Out of fast FrameBuffer Stack Memory!Please reduce there solution of the image you are running this algorithm on toy pass this issue.

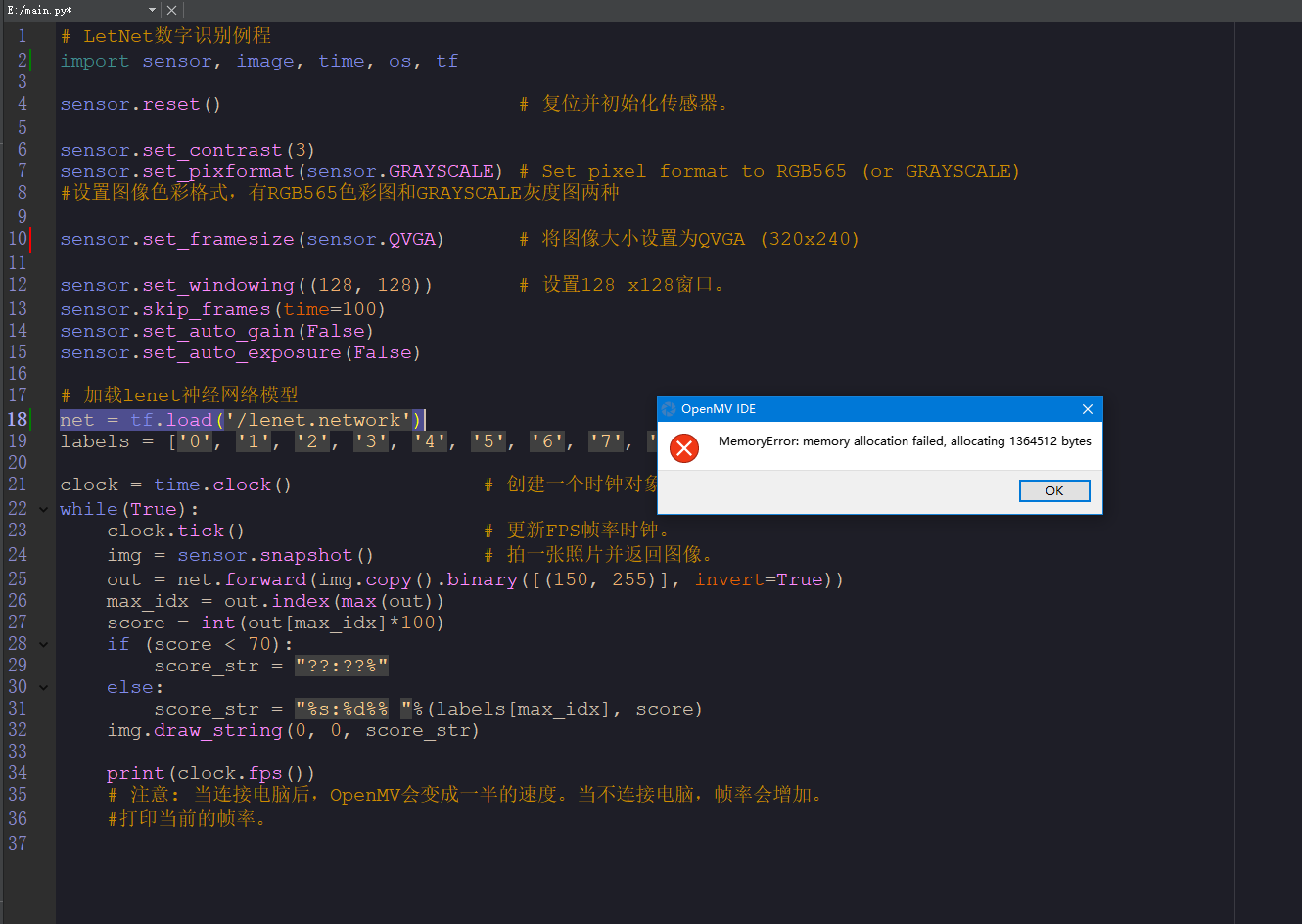

请问,这种错误要怎么解决呀,用的是H7-Plus,代码是数字识别的代码

请问,这种错误要怎么解决呀,用的是H7-Plus,代码是数字识别的代码