@kidswong999 不太懂应该怎么写啊 关键是现在我这个代码就是那个网站都进不去水为什么呢

k5r1 发布的帖子

-

RE: 我想把深度学习检测到的结果通过WiFi模块发送到电脑上发布在 OpenMV Cam

@kidswong999 我感觉我这个模型训练和这个WiFi传输没有加好,还有一个问题就是我这个程序运行起来没几分钟摄像头就不动了,就像卡住了一样,但是不报错

-

我想把深度学习检测到的结果通过WiFi模块发送到电脑上发布在 OpenMV Cam

import sensor, image, time, os, tf, uos, gc, network, usocket, sys, json SSID ='OPENMV_AP' # Network SSID KEY ='1234567890' # Network key (must be 10 chars) HOST = '' # Use first available interface PORT = 8080 # Arbitrary non-privileged port # Reset sensor sensor.reset() # Set sensor settings sensor.set_contrast(1) sensor.set_brightness(1) sensor.set_saturation(1) sensor.set_gainceiling(16) sensor.set_framesize(sensor.QQVGA) sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = None labels = None try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: print(e) raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') clock = time.clock() # Init wlan module in AP mode. wlan = network.WINC(mode=network.WINC.MODE_AP) wlan.start_ap(SSID, key=KEY, security=wlan.WEP, channel=2) # You can block waiting for client to connect #print(wlan.wait_for_sta(10000)) def response(s): print ('Waiting for connections..') client, addr = s.accept() # set client socket timeout to 2s client.settimeout(2.0) print ('Connected to ' + addr[0] + ':' + str(addr[1])) # Read request from client data = client.recv(1024) # Should parse client request here # Send multipart header client.send("HTTP/1.1 200 OK\r\n" \ "Server: OpenMV\r\n" \ "Content-Type: application/json\r\n" \ "Cache-Control: no-cache\r\n" \ "Pragma: no-cache\r\n\r\n") # FPS clock clock = time.clock() # Start streaming images # NOTE: Disable IDE preview to increase streaming FPS. clock.tick() img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): #print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): #print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) a=obj.output()[0] b=obj.output()[1] c=obj.output()[2] d=obj.output()[3] f=obj.output()[4] g=obj.output()[5] if a>0.95: h=1 print(h) client.send(json.dumps(h)) client.close() while (True): # Create server socket s = usocket.socket(usocket.AF_INET, usocket.SOCK_STREAM) try: # Bind and listen s.bind([HOST, PORT]) s.listen(5) # Set server socket timeout # NOTE: Due to a WINC FW bug, the server socket must be closed and reopened if # the client disconnects. Use a timeout here to close and re-create the socket. s.settimeout(3) response(s) except OSError as e: s.close() print("socket error: ", e) #sys.print_exception(e)

-

模板匹配我识别数字的时候输出的结果是带JPG的不能传给32我想让它就单纯输出1.2我就路径格式改了,然后就报错发布在 OpenMV Cam

# Template Matching Example - Normalized Cross Correlation (NCC) # # 这个例子展示了如何使用OpenMV凸轮的NCC功能将小部分图像与图像的各个部分 # 进行匹配...期望获得极其可控的环境NCC并不是全部有用的。 # # 警告:NCC支持需要重做!到目前为止,这个功能需要做大量的工作才能有用。 # 这个脚本将重新表明功能的存在,但在目前的状态是不足的。 import time, sensor, image from image import SEARCH_EX, SEARCH_DS #从imgae模块引入SEARCH_EX和SEARCH_DS。使用from import仅仅引入SEARCH_EX, #SEARCH_DS两个需要的部分,而不把image模块全部引入。 # Reset sensor sensor.reset() # Set sensor settings sensor.set_contrast(1) sensor.set_gainceiling(16) # Max resolution for template matching with SEARCH_EX is QQVGA sensor.set_framesize(sensor.QQVGA) # You can set windowing to reduce the search image. #sensor.set_windowing(((640-80)//2, (480-60)//2, 80, 60)) sensor.set_pixformat(sensor.GRAYSCALE) # Load template. # Template should be a small (eg. 32x32 pixels) grayscale image. num_quantity=9 for t in range(0,num_quantity+1):#循环将根目录下F文件夹的数字图片载入 templates = [image.Image('/F/'+str(t)+'.pgm')] #保存多个模板 #加载模板图片 clock = time.clock() # Run template matching while (True): clock.tick() img = sensor.snapshot() # find_template(template, threshold, [roi, step, search]) # ROI: The region of interest tuple (x, y, w, h). # Step: The loop step used (y+=step, x+=step) use a bigger step to make it faster. # Search is either image.SEARCH_EX for exhaustive search or image.SEARCH_DS for diamond search # # Note1: ROI has to be smaller than the image and bigger than the template. # Note2: In diamond search, step and ROI are both ignored. for t in templates: template = image.Image(t) #对每个模板遍历进行模板匹配 r = img.find_template(template, 0.70, step=4, search=SEARCH_EX) #, roi=(10, 0, 60, 60)) #find_template(template, threshold, [roi, step, search]),threshold中 #的0.7是相似度阈值,roi是进行匹配的区域(左上顶点为(10,0),长80宽60的矩形), #注意roi的大小要比模板图片大,比frambuffer小。 #把匹配到的图像标记出来 if r: img.draw_rectangle(r) print(t) #打印模板名字 #print(clock.fps()) -

RE: 视频教程里形状识别那一节,为了过滤掉小圆不是应该改变x_stride(跳过像素数量)嘛?教程里是改变了x_margin?发布在 OpenMV Cam

threshold = 3500比较合适。如果视野中检测到的圆过多,请增大阈值;

-

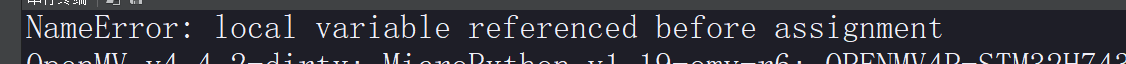

我的a明明定义了 为什么老是会报 a,b,c没有定义的错误啊发布在 OpenMV Cam

import sensor, image, time from pyb import UART # 为了使色彩追踪效果真的很好,你应该在一个非常受控制的照明环境中。 BLUE_threshold = ( 0, 80, -70, -10, -0, 30) # 设置蓝色的阈值 sensor.reset() # 初始化sensor sensor.set_pixformat(sensor.RGB565) # use RGB565. sensor.set_framesize(sensor.QQVGA) #设置图像像素大小 sensor.skip_frames(10) # 让新的设置生效。 sensor.set_auto_whitebal(False) clock = time.clock() uart = UART(3,115200) uart.init(115200, bits=8, parity=None, stop=1) #通信 K=5000#自取 while(True): clock.tick() img = sensor.snapshot() # 拍一张照片并返回图像。 blobs = img.find_blobs([BLUE_threshold]) if len(blobs) == 1: # Draw a rect around the blob. b = blobs[0] img.draw_rectangle(b[0:4]) # rect img.draw_cross(b[5], b[6]) # cx, cy Lm = (b[2]+b[3])/2 length = K/Lm print(length) c=int(length) if blobs: #如果找到了目标颜色 for b in blobs: #迭代找到的目标颜色区域 # Draw a rect around the blob. img.draw_rectangle(b[0:4]) # rect #用矩形标记出目标颜色区域 img.draw_cross(b[5], b[6]) # cx, cy #在目标颜色区域的中心画十字形标记 print(b[5], b[6]) #输出目标物体中心坐标 print("1") a=1 else: print("2") b=2 data = bytearray([0xb3,a,b,c,0x5b]) -

我要把黑色和圆形同时作为判断条件,应该怎么加发布在 OpenMV Cam

import sensor, image, time from pyb import UART sensor.reset() sensor.set_pixformat(sensor.RGB565) # grayscale is faster sensor.set_framesize(sensor.QVGA) sensor.skip_frames(time = 2000) clock = time.clock() uart = UART(3,115200) uart.init(115200, bits=8, parity=None, stop=1) while(True): clock.tick() img = sensor.snapshot().lens_corr(strength = 1.8, zoom = 1.0) for c in img.find_circles(threshold = 2200, x_margin = 10, y_margin = 10, r_margin = 10, r_min = 2, r_max = 15, r_step = 2,roi=(53,33,196,203)): img.draw_circle(c.x(), c.y(), c.r(), color = (255, 0, 0))#画圆 用红线框出 x=int(c.x()) y=int(c.y()) data = bytearray([0xb3,x,y,0x5b]) uart.write(data) print(x,y) -

我只想识别我roi区域的那一块,可是我的roi加进去好像没起到作用发布在 OpenMV Cam

import sensor, image, time from pyb import UART sensor.reset() sensor.set_pixformat(sensor.RGB565) # grayscale is faster sensor.set_framesize(sensor.QQVGA) sensor.skip_frames(time = 2000) clock = time.clock() uart = UART(3,115200) uart.init(115200, bits=8, parity=None, stop=1) area= (66, 100, -31, 85, -8, 94) while(True): clock.tick() img = sensor.snapshot().lens_corr(strength = 1.8, zoom = 1.0) for c in img.find_circles(threshold = 2000, x_margin = 10, y_margin = 10, r_margin = 10, r_min = 2, r_max = 15, r_step = 2): roi=(18,7,113,106) #感兴趣区域 img.draw_circle(area,c.x(), c.y(), c.r(), color = (255, 0, 0))#画圆 用红线框出 x=int(c.x()) y=int(c.y()) data = bytearray([0xb3,x,y,0x5b]) uart.write(data) print(x,y)