那我要怎么获取我发的那个数据,我用下标引不出来么

g36h 发布的帖子

-

串口接受问题,输出的有额外字符发布在 OpenMV Cam

这个接受怎么输出的有额外字符,如发111,收到b'111\r\n',我要怎么获取我发的字符呢

import time

from pyb import UARTuart = UART(3, 115200)

buf=0

while(True):

buf=uart.read()

print(buf)

time.sleep_ms(1000) -

图片传输显示异常,数据段提前结束?发布在 OpenMV Cam

报错Corrupt JPEG data: premature end of data segment

图片非正常显示,用串口传输的uart.write(img.compressed(quality=50))

在软件段接收到后能显示,但是显示如图!,有分割,这是怎么回事

-

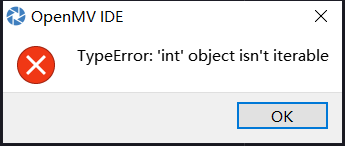

RE: 语法是正确,但这个错误是什么意思,不可迭代发布在 OpenMV Cam

import sensor, image, time from pyb import UART from pyb import LED from image import SEARCH_EX,SEARCH_DS #pink_threshold = (65, 21, 7, 67, 55, -2) #pink_threshold =(42, 62, 67, 14, -16, 65) pink_threshold = (65, 21, 7, 67, 55, -2) pink_color_code = 1 # code = 2^0 = 1 uart = UART(3, 115200) uart.init(115200, bits=8, parity=None, stop=1) #8位数据位,无校验位,1位停止位、 sensor.reset() # 初始化摄像头 sensor.set_contrast(1)#对比度 sensor.set_gainceiling(16)#图像增益上限 sensor.set_pixformat(sensor.RGB565) # 选择像素模式 RGB565. sensor.set_framesize(sensor.QQVGA) # use QQVGA for speed. sensor.skip_frames(time = 2000) # Let new settings take affect. #sensor.set_pixformat(sensor.GRAYSCALE) sensor.set_auto_gain(False) # 颜色跟踪必须关闭自动增益 sensor.set_auto_whitebal(False) #关闭白平衡。白平衡是默认开启的,在颜色识别中,需要关闭白平衡。 templates =["111.pgm","221.pgm","333.pgm","334.pgm","335.pgm","443.pgm","444.pgm.", "555.pgm","556.pgm","557.pgm","666.pgm","667.pgm","777.pgm","778.pgm","888.pgm"] clock = time.clock() # Tracks FPS. shj=0 while(shj == 0): clock.tick() img = sensor.snapshot() img = img.to_grayscale() for t in templates: template = image.Image(t) r = img.find_template(template, 0.70, step=4, search=SEARCH_EX) if r: shj=t[0] LED(2).on() time.sleep_ms(3000) LED(2).off() x=[] for i in range(len(templates)): if shj == templates[i][0]: x.append(templates[i]) flag = 1 while(True): clock.tick() img = sensor.snapshot() img = img.to_grayscale() if flag == 1: for t in x: h = image.Image(t) r = img.find_template(h, 0.70, step=4, search=SEARCH_EX) if r: img.draw_rectangle(r,0) if(r[0]+(r[2]/2) > sensor.width() /2): uartData = bytearray([0xa5,0x2b,0x01]) elif(r[0]+(r[2]/2) < sensor.width()/2): uartData = bytearray([0xa5,0x2b,0x02]) flag = 0 LED(1).on() uart.write(uartData) clock.tick() img = sensor.snapshot() blobs = img.find_blobs([pink_threshold], area_threshold=100) if blobs: ##如果找到了目标颜色 for blob in blobs: ##迭代找到的目标颜色区域 x = blob[0] y = blob[1] # width = blob[2] # 色块矩形的宽度 height = blob[3] # 色块矩形的高度 center_x = blob[5] # 色块中心点x值 center_y = blob[6] # 色块中心点y值 color_code = blob[8] # 颜色代码 ## 添加颜色说明 if color_code == pink_color_code: img.draw_string(x, y - 10, "red", color = (0xFF, 0x00, 0x00)) ##用矩形标记出目标颜色区域 img.draw_rectangle([x, y, width, height]) ##在目标颜色区域的中心画十字形标记 img.draw_cross(center_x, center_y) -

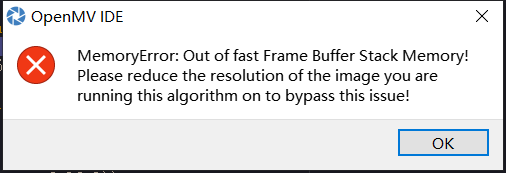

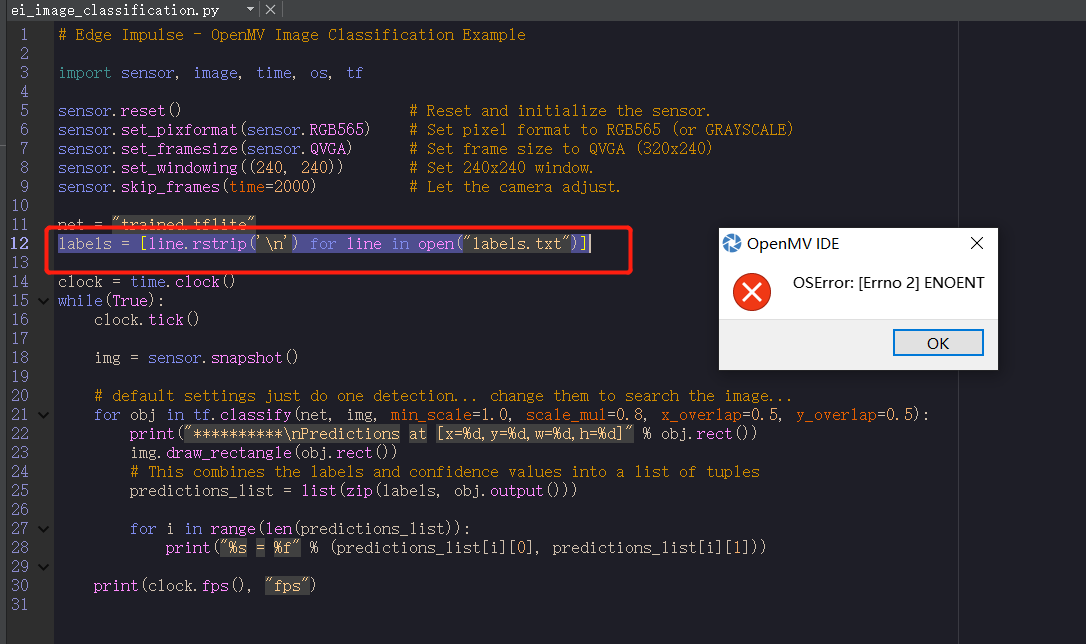

MemoryError: Out of fast Frame Buffer Stack Memory发布在 OpenMV Cam

Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.net = "trained.tflite"

labels = [line.rstrip('\n') for line in open("labels.txt")]clock = time.clock()

while(True):

clock.tick()img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps") -

RE: 运行一半,报这个错误是什么情况,我的是openmv4 plus,图像都出来了,然后报这个错误发布在 OpenMV Cam

@kidswong999 您好,是很多库都删了吗,我现在好多程序运行不了了

-

RE: 运行一半,报这个错误是什么情况,我的是openmv4 plus,图像都出来了,然后报这个错误发布在 OpenMV Cam

@kidswong999 您好,我下载咱这个测试也有问题,会报那个错

-

RE: 运行一半,报这个错误是什么情况,我的是openmv4 plus,图像都出来了,然后报这个错误发布在 OpenMV Cam

@kidswong999 您好,是有的,格式化fat32之后再重放还是这样的

-

RE: 运行一半,报这个错误是什么情况,我的是openmv4 plus,图像都出来了,然后报这个错误发布在 OpenMV Cam

@kidswong999 这个我插了个SD卡,因为空间不够,有影响吗

-

RE: 运行一半,报这个错误是什么情况,我的是openmv4 plus,图像都出来了,然后报这个错误发布在 OpenMV Cam

@kidswong999 您好,我试了还是会中途报错,图像出现了1秒后就会报那个错误

-

RE: 运行一半,报这个错误是什么情况,我的是openmv4 plus,图像都出来了,然后报这个错误发布在 OpenMV Cam

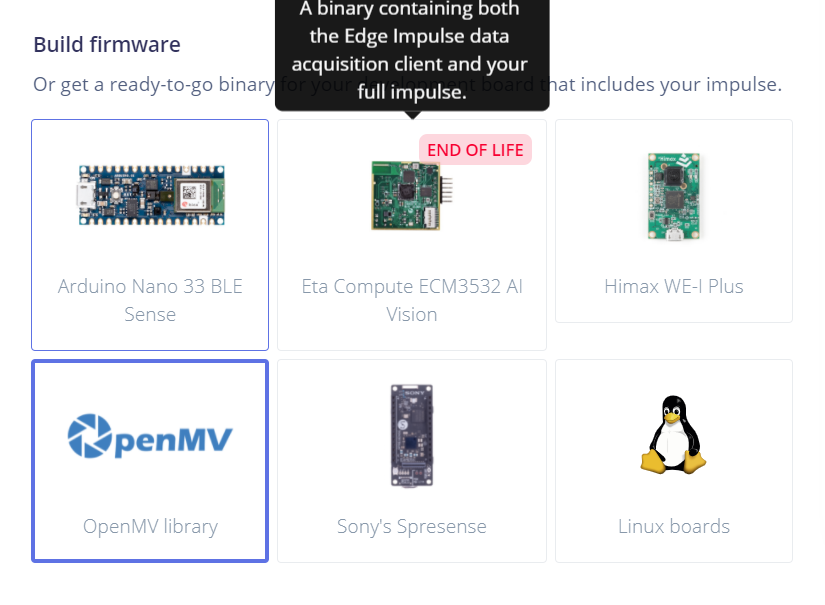

@kidswong999

我是拿这个生成的,library那个版本没了

我是拿这个生成的,library那个版本没了 -

RE: 运行一半,报这个错误是什么情况,我的是openmv4 plus,图像都出来了,然后报这个错误发布在 OpenMV Cam

@kidswong999 # Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.net = "trained.tflite"

labels = [line.rstrip('\n') for line in open("labels.txt")]clock = time.clock()

while(True):

clock.tick()img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps")

一直是这个错误

一直是这个错误