F

f6xi

@f6xi

0

声望

6

楼层

554

资料浏览

0

粉丝

1

关注

f6xi 发布的帖子

-

RE: 用edgeimpulse训练好的模型,怎么调用openmv实时的识别概率(即使这个值小于最小置信度也需要)发布在 OpenMV Cam

还有别的方法吗,因为我们要满足识别成熟果子抓取的条件,置信度不能太低

-

用edgeimpulse训练好的模型,怎么调用openmv实时的识别概率(即使这个值小于最小置信度也需要)发布在 OpenMV Cam

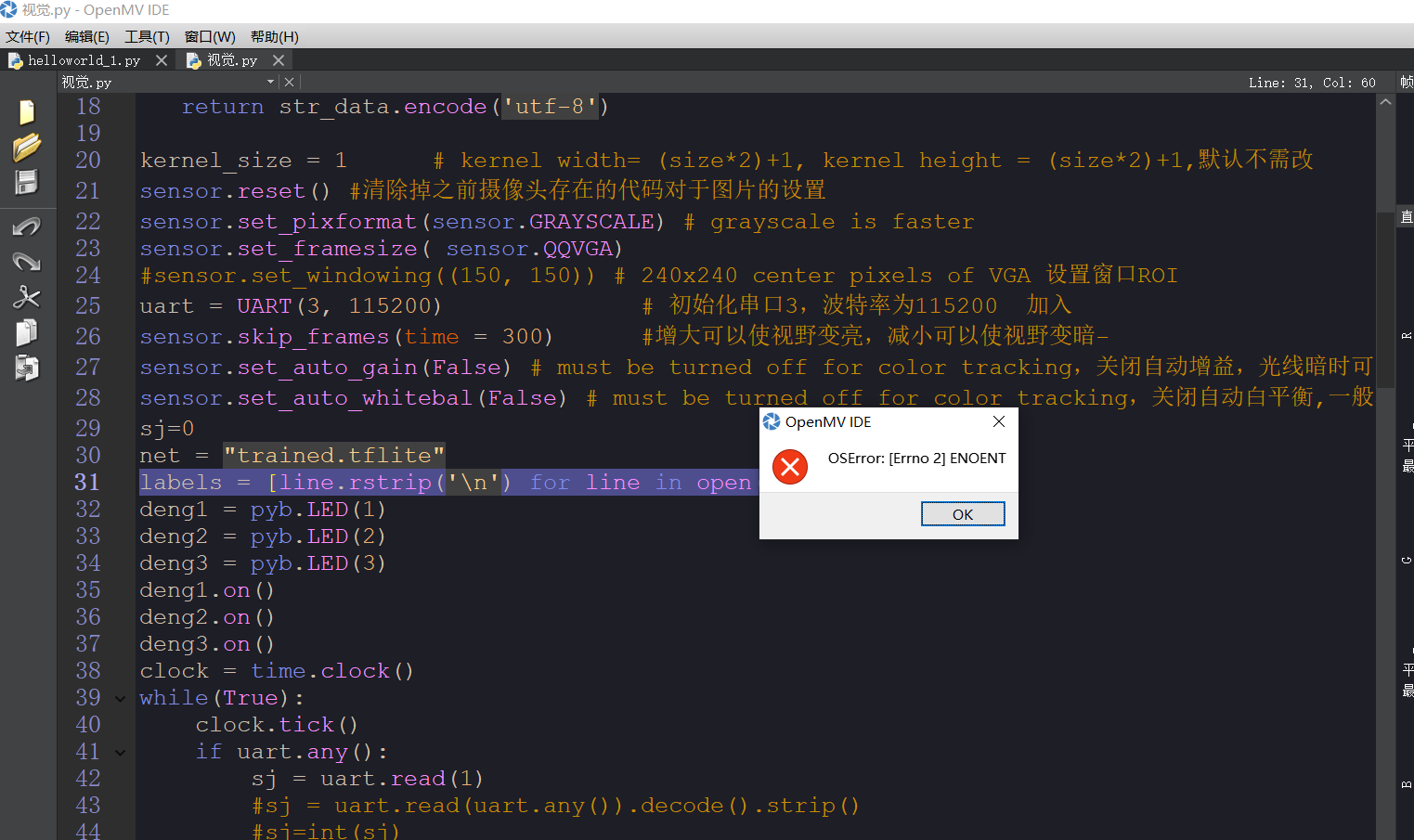

# Edge Impulse - OpenMV Object Detection Example import sensor, image, time, os, tf, math, uos, gc import pyb import sensor, image, time import json from pyb import UART sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. def modified_data(data): data = int(data)# 将data转化为整数型变量 str_data = '' if data < 10: # 目标色块的中心点的横坐标、纵坐标或面积的开方<10 str_data = str_data + '000' + str(data) # 运用字符串的拼接把色块参数全部转化为长度为四个字符的字符串,如8->“0008” elif data >= 10 and data < 100: # 10<目标色块的中心点的横坐标、纵坐标或面积的开方<100 str_data = str_data + '00' + str(data) # 运用字符串的拼接把色块参数全部转化为长度为四个字符的字符串,如88->“0088” elif data >=100 and data <1000: # 100<目标色块的中心点的横坐标、纵坐标或面积的开方<1000 str_data = str_data + '0' + str(data) # 运用字符串的拼接把色块参数全部转化为长度为四个字符的字符串,如888->“0888” else: # 1000<目标色块的中心点的横坐标、纵坐标或面积的开方 str_data = str_data + str(data) # 运用字符串的拼接把色块参数全部转化为长度为四个字符的字符串,如8888->“8888” return str_data.encode('utf-8') # ******将字符串中的每一个字母转化为UTF-8码值(与ASCII码值基本一样)******* # ******十进制0对应ASCII码值的48,可进行换算 net = None labels = None min_confidence = 0.70 uart = UART(3, 115200) try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') colors = [ # Add more colors if you are detecting more than 7 types of classes at once. (255, 0, 0), ( 0, 255, 0), (255, 255, 0), ( 0, 0, 255), (255, 0, 255), ( 0, 255, 255), (255, 255, 255), ] clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # detect() returns all objects found in the image (splitted out per class already) # we skip class index 0, as that is the background, and then draw circles of the center # of our objects for i, detection_list in enumerate(net.detect(img, thresholds=[(math.ceil(min_confidence * 255), 255)])): if (i == 0): continue # background class if (len(detection_list) == 0): continue # no detections for this class? print("********** %s **********" % labels[i]) for d in detection_list: [x, y, w, h] = d.rect() center_x = math.floor(x + (w / 2)) center_y = math.floor(y + (h / 2)) print('x %d\ty %d' % (center_x, center_y)) img.draw_rectangle(d.rect()) print(w*h) print(detection_list[0][4]) s = detection_list[0][4] print(s) print(clock.fps(), "fps", end="\n\n") if s > 0.75: t = 1 x = modified_data(center_x) y = modified_data(center_y) p = modified_data(math.sqrt(w*h)) uart.write('st') # 向单片机发送’st’(应该是作为一个发送的起始标志) uart.write(x) # 向单片机发送目标色块的中心点横坐标(经过处理后) uart.write(y) # 向单片机发送目标色块的中心点纵坐标(经过处理后) uart.write(p) time.sleep(0.01) print(t) if s < 0.75 and t == 0: uart.write('wz') time.sleep(0.01)