import sensor, image, time, os, tf, math, uos, gc, ustruct,pyb#,thread

from pyb import UART,LED

from image import SEARCH_EX, SEARCH_DS

from pyb import LED

from pid import PID

rho_pid = PID(p=0.4, i=0)

theta_pid = PID(p=0.001, i=0)

THRESHOLD = (0, 36, -46, 50, 44, -83)

threshold = (245, 255)

sensor.reset()

sensor.set_vflip(False)

sensor.set_hmirror(False)

sensor.set_pixformat(sensor.GRAYSCALE)

sensor.set_framesize(sensor.QQVGA)

sensor.set_windowing((240,240))

sensor.skip_frames(time=2000)

sensor.set_contrast(1)

sensor.set_gainceiling(16)

sensor.set_auto_gain(False) # 颜色跟踪必须关闭自动增益

sensor.set_auto_whitebal(False) # 颜色跟踪必须关闭白平衡

clock = time.clock()

roi = (10,0,30,30)#,(140,50,90,100)]

led = pyb.LED(4)

net = None

labels = None

min_confidence = 0.3

flag = 0

rho = 0

theta = 0

def track(rho,theta):

img = sensor.snapshot().binary([THRESHOLD])

line = img.get_regression([(100,100)], robust = True)

if (line):

rho_err = abs(line.rho())-img.width()/2

if line.theta()>90:

theta_err = line.theta()-180

else:

theta_err = line.theta()

img.draw_line(line.line(), color = 127)

print(rho_err,line.magnitude(),rho_err)

if line.magnitude()>8:

#if -40<b_err<40 and -30<t_err<30:

rho_output = rho_pid.get_pid(rho_err,1)

theta_output = theta_pid.get_pid(theta_err,1)

output = rho_output+theta_output

# car.run(50+output, 50-output)

else:

car.run(0,0)

else:

car.run(50,-50)

pass

#print(clock.fps())

print(rho_err,line.magnitude(),rho_err)

outuart(rho,theta)

try:

net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024)))

except Exception as e:

raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')

try:

labels = [line.rstrip('\n') for line in open("labels.txt")]

except Exception as e:

raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')

colors = [

(255, 0, 0),

( 0, 255, 0),

(255, 255, 0),

( 0, 0, 255),

(255, 0, 255),

( 0, 255, 255),

(255, 255, 255),

]

uart = UART(3,115200)

uart.init(9600, bits=8, parity=None, stop=1)

def outuart(num):

global uart

data = ustruct.pack("<bbhhhhb",

0x2C,

0x12,

int(num),

int(88),

0x5B)

uart.write(data)

time.sleep_ms(1)

data = [0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00]

def UartReceiveDate():

global Find_Task

global Target_Num

global x

global data

data[0] = uart.readchar()

data[1] = uart.readchar()

Target_Num=int(data[0]-48)*10+int(data[1]-48)

#outuart(Target_Num)

clock = time.clock()

flag = 0

rho = 0

theta = 0

def track(rho,theta):

img = sensor.snapshot().binary([THRESHOLD])

line = img.get_regression([(100,100)], robust = True)

if (line):

rho_err = abs(line.rho())-img.width()/2

if line.theta()>90:

theta_err = line.theta()-180

else:

theta_err = line.theta()

img.draw_line(line.line(), color = 127)

print(rho_err,line.magnitude(),rho_err)

num = 0

temp_num = 0

UartReceiveDate

#track(rho,theta)

Target_Num = 0

def find_number(temp_num):

global tem

global num

while(True):

clock.tick()

img = sensor.snapshot()

if(num == 0):

sensor.set_windowing(1,1,100,100)

sensor.set_windowing(10,20,50,60)

if(num == 1):

sensor.set_windowing(60,40,180,180)

sensor.set_windowing(90,40,50,60)

for i, detection_list in enumerate(net.detect(img, thresholds=[(math.ceil(min_confidence * 255), 255)])):

if (i == 0): continue

if (len(detection_list) == 0): continue

print(" %s " % labels[i])

tem = labels[i]

templatei ="/"+str(labels[i])+".pgm"

print(templatei)

outuart(tem)

num = num + 1

for d in detection_list:

[x, y, w, h] = d.rect()

center_x = math.floor(x + (w / 2))

center_y = math.floor(y + (h / 2))

print('x %d\ty %d' % (center_x, center_y))

sensor.set_windowing(240,240)

if(num == 1):

temp_num=int(tem)*10

print(temp_num)

elif(num == 2):

temp_num+=int(tem)

print(temp_num)

outuart(temp_num)

break

if(num ==2):

break

if(Target_Num==temp_num):

print("okk")

outuart(1)

led = pyb.LED(2)

else:

print("error")

UartReceiveDate()

while(True):

clock.tick()

img = sensor.snapshot() # 拍一张照片并返回图像

blobs = img.find_blobs([threshold=(245, 255),roi=(10,0,30,30)])

if blobs==1:

#如果找到了目标颜色

for b in blobs:

#迭代找到的目标颜色区域

track(rho,theta)

else:

outuart("stop")

find_number(temp_num)

#track(rho,theta)

#find_number(temp_num)

D

d5ln

@d5ln

0

声望

7

楼层

406

资料浏览

0

粉丝

0

关注

d5ln 发布的帖子

-

174行一直报错,怎么解决发布在 OpenMV Cam

-

运行很卡顿,有试过加if uart.any(): uart.readchar()但是没有改善还是掉帧很严重发布在 OpenMV Cam

import sensor, image, time, os, tf, math, uos, gc, ustruct, pyb from pyb import UART,LED from image import SEARCH_EX, SEARCH_DS sensor.reset() sensor.set_pixformat(sensor.GRAYSCALE) sensor.set_framesize(sensor.QQVGA) sensor.set_windowing((240,240)) sensor.skip_frames(time=2000) sensor.set_contrast(1) sensor.set_gainceiling(16) led = pyb.LED(4) # Red LED = 1, Green LED = 2, Blue LED = 3, IR LEDs = 4. ''' template1 = image.Image("/1.pgm") template2 = image.Image("/2.pgm") template3 = image.Image("/3.pgm") template4 = image.Image("/4.pgm") templates = ["/1.pgm","/2.pgm","/3.pgm","/4.pgm"] ''' rois = [(20,20,100,150),(140,50,90,100)] net = None labels = None min_confidence = 0.5 try: net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') colors = [ (255, 0, 0), ( 0, 255, 0), (255, 255, 0), ( 0, 0, 255), (255, 0, 255), ( 0, 255, 255), (255, 255, 255), ] uart = UART(3,115200) uart.init(9600, bits=8, parity=None, stop=1) def outuart(num): global uart data = ustruct.pack("<bbhhhhb", 0x2C, 0x12, int(num), int(88), 0x5B) uart.write(data) time.sleep_ms(1) data = [0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00] def UartReceiveDate(): global Find_Task global Target_Num global x global data data[0] = uart.readchar() data[1] = uart.readchar() Target_Num=int(data[0]-48)*10+int(data[1]-48) outuart(Target_Num) clock = time.clock() flag = 0 num = 0 temp_num = 0 while(True): clock.tick() img = sensor.snapshot() UartReceiveDate() if(num == 0): sensor.set_windowing(1,1,100,100) sensor.set_windowing(10,20,50,60) if(num == 1): sensor.set_windowing(60,40,180,180) sensor.set_windowing(90,40,50,60) for i, detection_list in enumerate(net.detect(img, thresholds=[(math.ceil(min_confidence * 255), 255)])): if (i == 0): continue if (len(detection_list) == 0): continue print(" %s " % labels[i]) tem = labels[i] templatei ="/"+str(labels[i])+".pgm" print(templatei) outuart(tem) num = num + 1 for d in detection_list: [x, y, w, h] = d.rect() center_x = math.floor(x + (w / 2)) center_y = math.floor(y + (h / 2)) print('x %d\ty %d' % (center_x, center_y)) sensor.set_windowing(240,240) if(num == 1): temp_num=int(tem)*10 print(temp_num) elif(num == 2): temp_num+=int(tem) print(temp_num) outuart(temp_num) if(num==2): break led.on() time.sleep_ms(150) led.off() time.sleep_ms(100) led.on() time.sleep_ms(150) led.off() time.sleep_ms(600) ''' else: print(temp_num) temp_num = 0 if (Target_Num == temp_num): #发送回去 print("ok") outuart("ok") #print(clock.fps(), "fps", end="\n\n")''' -

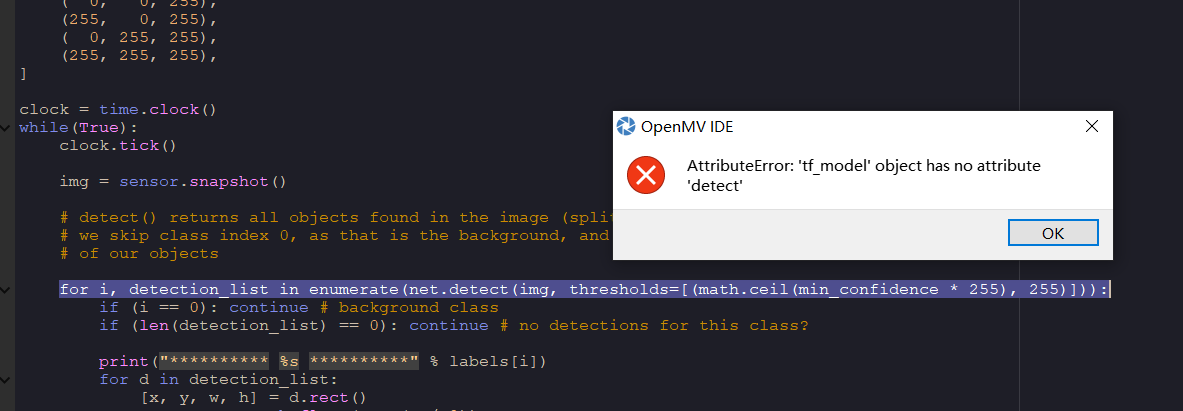

fomo检测可以分区域检测吗?为什么对roi区域划分循环识别无法实现?没有报错但是实现不了分区域,可以怎么改进?发布在 OpenMV Cam

import sensor, image, time, os, tf, math, uos, gc, ustruct from pyb import UART,LED from image import SEARCH_EX, SEARCH_DS sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.GRAYSCALE) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QQVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. sensor.set_contrast(1) sensor.set_gainceiling(16) net = None labels = None min_confidence = 0.5 rois = [(0,30,80,80),(70,30,85,85)] try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') colors = [ # Add more colors if you are detecting more than 7 types of classes at once. (255, 0, 0), ( 0, 255, 0), (255, 255, 0), ( 0, 0, 255), (255, 0, 255), ( 0, 255, 255), (255, 255, 255), ] #rois = [(0,20,190,200),(150,35,160,170)] for roi in rois: clock = time.clock() num = 0 while(True): clock.tick() img = sensor.snapshot() # detect() returns all objects found in the image (splitted out per class already) # we skip class index 0, as that is the background, and then draw circles of the center # of our objects for i, detection_list in enumerate(net.detect(img, thresholds=[(math.ceil(min_confidence * 255), 255)])): if (i == 0): continue # background class if (len(detection_list) == 0): continue # no detections for this class? print(" %s " % labels[i]) tem = labels[i] #print(tem) num = 1 for d in detection_list: [x, y, w, h] = d.rect() center_x = math.floor(x + (w / 2)) center_y = math.floor(y + (h / 2)) print('x %d\ty %d' % (center_x, center_y)) img.draw_circle((center_x, center_y, 12), color=colors[i], thickness=2) if (num != 0):break -

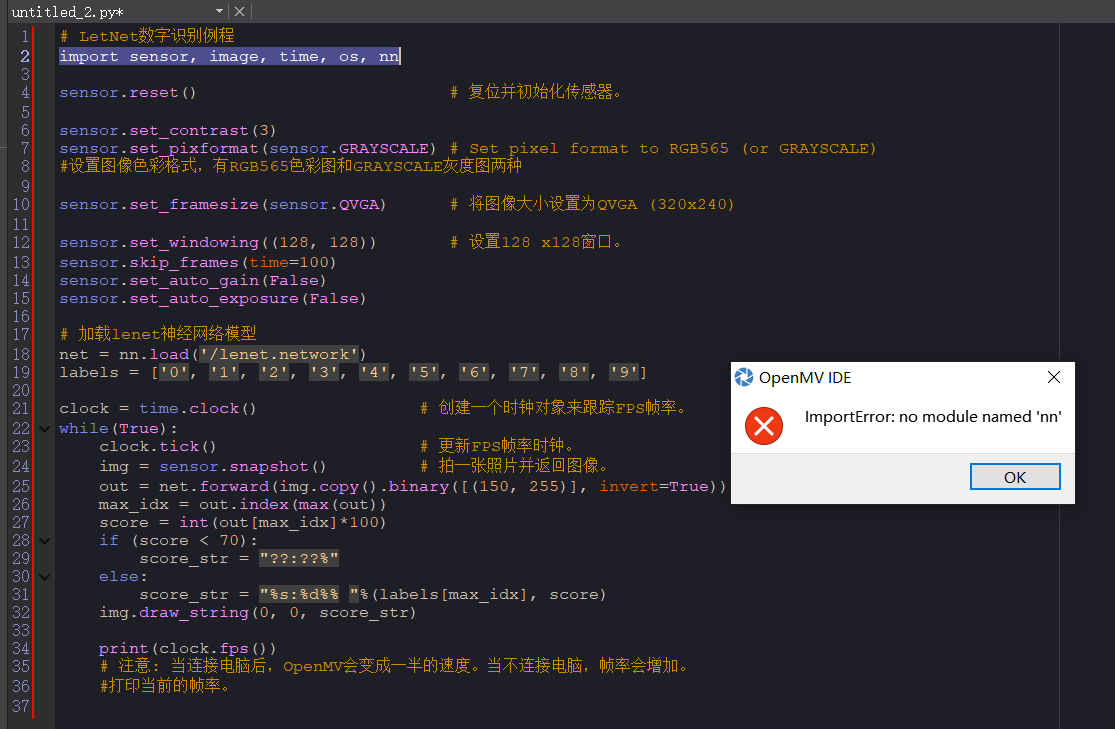

FOMO学习之后一直提示错误是为什么?跟着视频的步骤一步步来的发布在 OpenMV Cam

# Edge Impulse - OpenMV Object Detection Example import sensor, image, time, os, tf, math, uos, gc sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = None labels = None min_confidence = 0.5 try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') colors = [ # Add more colors if you are detecting more than 7 types of classes at once. (255, 0, 0), ( 0, 255, 0), (255, 255, 0), ( 0, 0, 255), (255, 0, 255), ( 0, 255, 255), (255, 255, 255), ] clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # detect() returns all objects found in the image (splitted out per class already) # we skip class index 0, as that is the background, and then draw circles of the center # of our objects for i, detection_list in enumerate(net.detect(img, thresholds=[(math.ceil(min_confidence * 255), 255)])): if (i == 0): continue # background class if (len(detection_list) == 0): continue # no detections for this class? print("********** %s **********" % labels[i]) for d in detection_list: [x, y, w, h] = d.rect() center_x = math.floor(x + (w / 2)) center_y = math.floor(y + (h / 2)) print('x %d\ty %d' % (center_x, center_y)) img.draw_circle((center_x, center_y, 12), color=colors[i], thickness=2) print(clock.fps(), "fps", end="\n\n")