# Template Matching Example - Normalized Cross Correlation (NCC)

#

# This example shows off how to use the NCC feature of your OpenMV Cam to match

# image patches to parts of an image... expect for extremely controlled enviorments

# NCC is not all to useful.

#

# WARNING: NCC supports needs to be reworked! As of right now this feature needs

# a lot of work to be made into somethin useful. This script will reamin to show

# that the functionality exists, but, in its current state is inadequate.

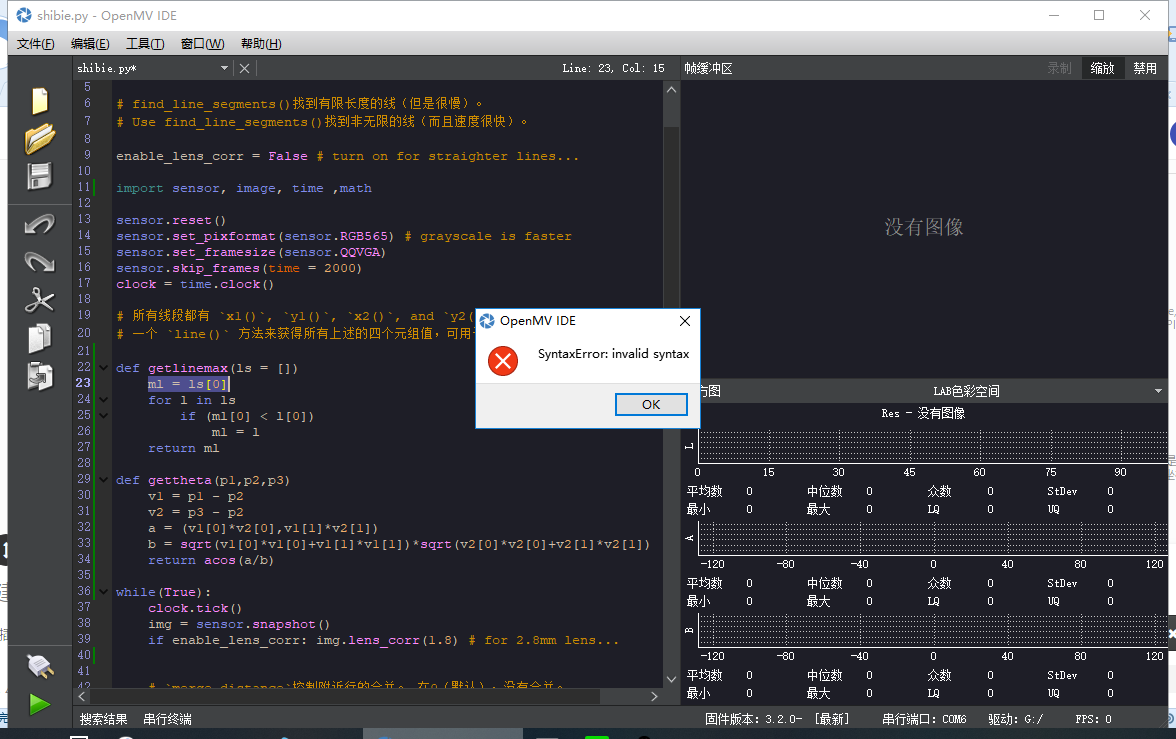

import time, sensor, image

from image import SEARCH_EX, SEARCH_DS

#从imgae模块引入SEARCH_EX和SEARCH_DS。使用from import仅仅引入SEARCH_EX,

#SEARCH_DS两个需要的部分,而不把image模块全部引入。

# Reset sensor

sensor.reset()

# Set sensor settings

sensor.set_contrast(1)

sensor.set_gainceiling(16)

# Max resolution for template matching with SEARCH_EX is QQVGA

sensor.set_framesize(sensor.QQVGA)

# You can set windowing to reduce the search image.

#sensor.set_windowing(((640-80)//2, (480-60)//2, 80, 60))

sensor.set_pixformat(sensor.GRAYSCALE)

# Load template.

# Template should be a small (eg. 32x32 pixels) grayscale image.

template = image.Image("/template.pgm")

#加载模板图片

clock = time.clock()

# Run template matching

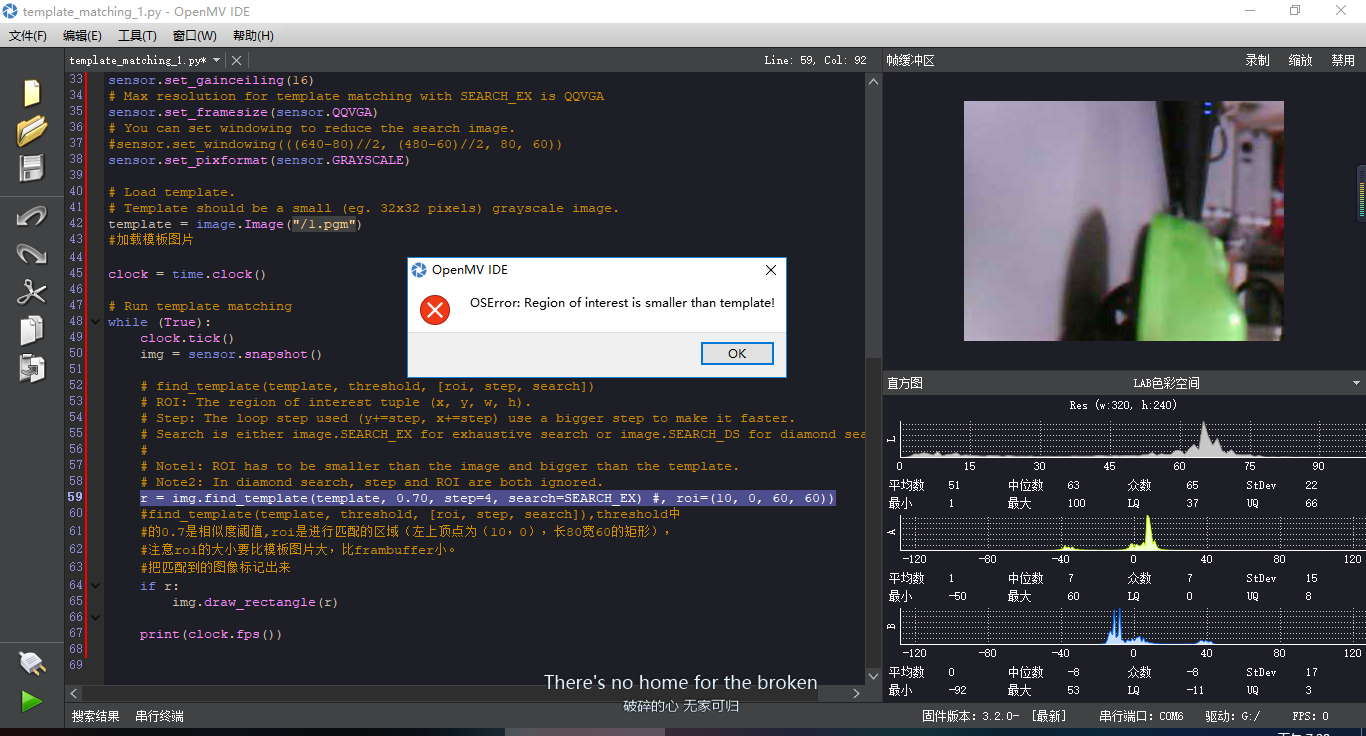

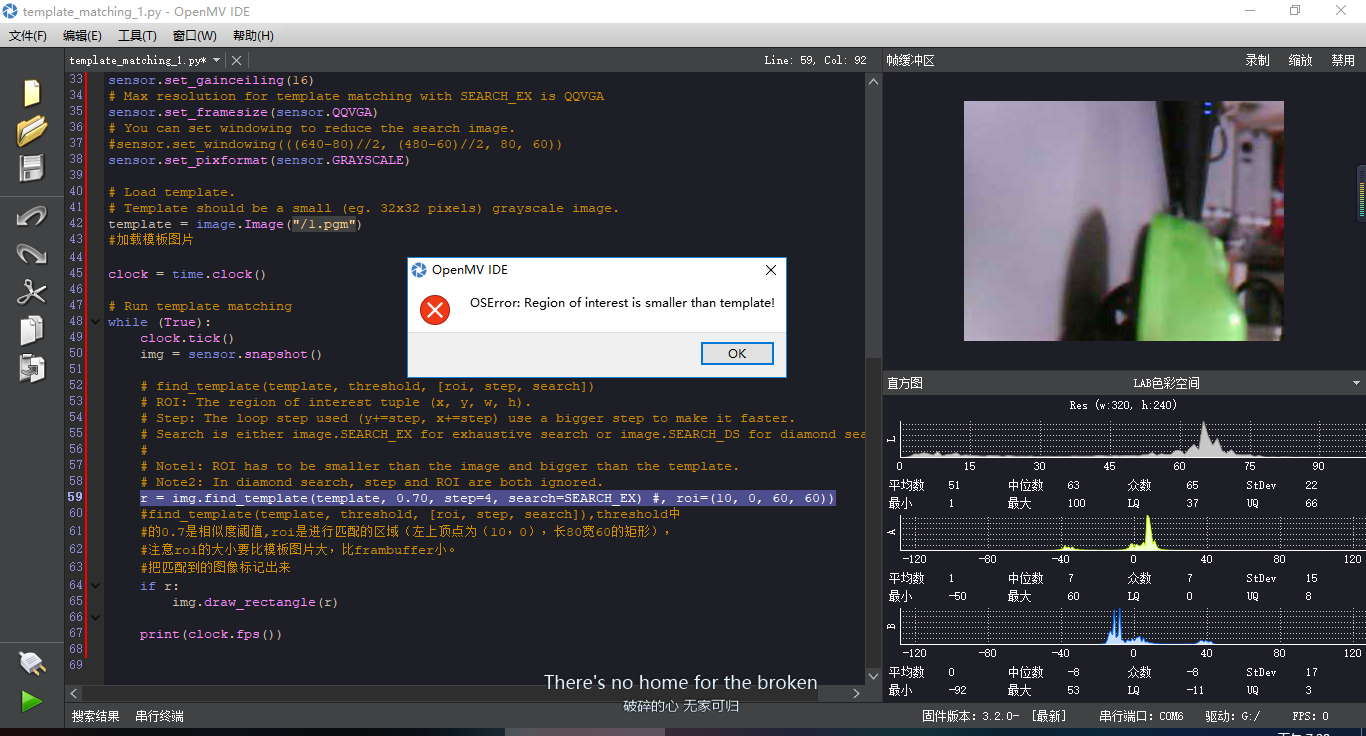

while (True):

clock.tick()

img = sensor.snapshot()

# find_template(template, threshold, [roi, step, search])

# ROI: The region of interest tuple (x, y, w, h).

# Step: The loop step used (y+=step, x+=step) use a bigger step to make it faster.

# Search is either image.SEARCH_EX for exhaustive search or image.SEARCH_DS for diamond search

#

# Note1: ROI has to be smaller than the image and bigger than the template.

# Note2: In diamond search, step and ROI are both ignored.

r = img.find_template(template, 0.70, step=4, search=SEARCH_EX) #, roi=(10, 0, 60, 60))

#find_template(template, threshold, [roi, step, search]),threshold中

#的0.7是相似度阈值,roi是进行匹配的区域(左上顶点为(10,0),长80宽60的矩形),

#注意roi的大小要比模板图片大,比frambuffer小。

#把匹配到的图像标记出来

if r:

img.draw_rectangle(r)

print(clock.fps())