跟教程在Edge Impulse上训练的模型运行报错

-

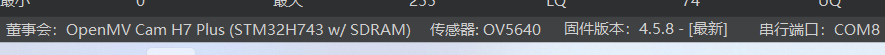

固件信息:

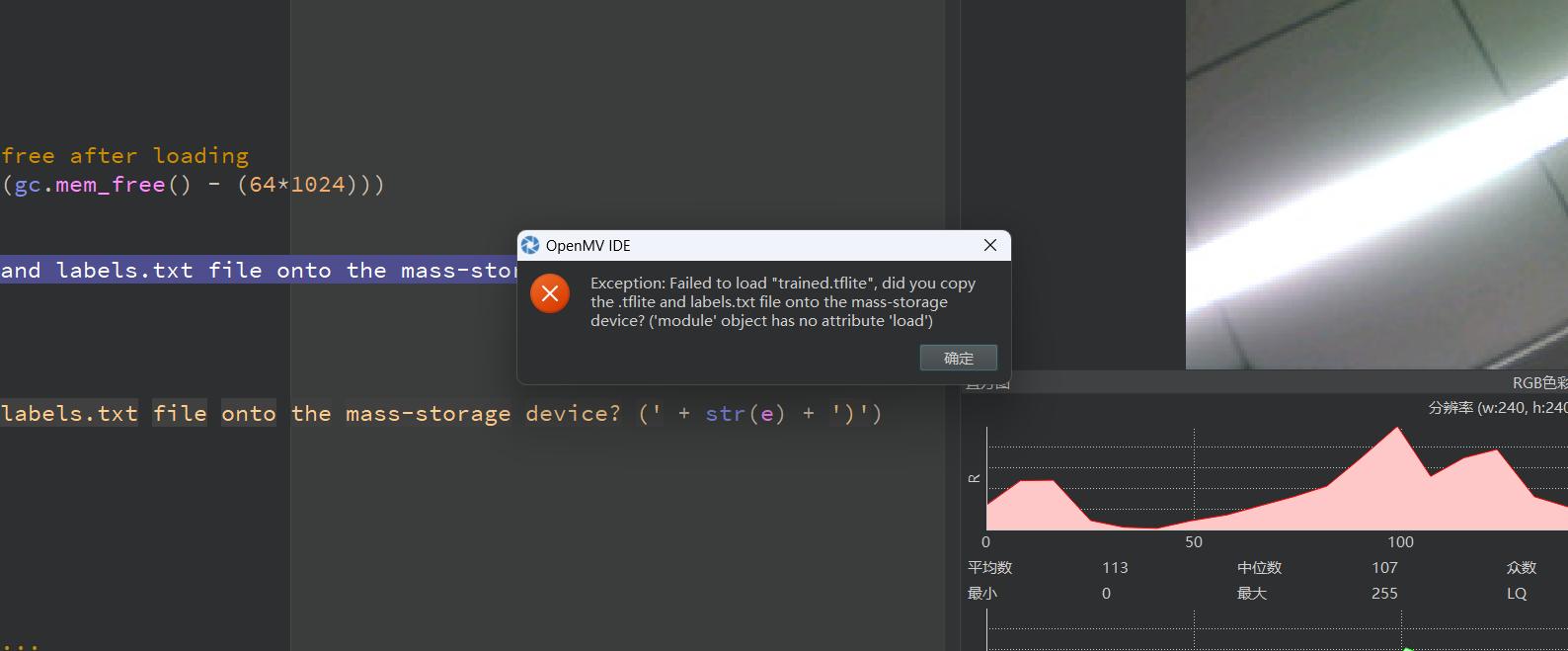

报错信息:

代码:

#Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf, uos, gc

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.net = None

labels = Nonetry:

# load the model, alloc the model file on the heap if we have at least 64K free after loading

net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024)))

except Exception as e:

print(e)

raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')try:

labels = [line.rstrip('\n') for line in open("labels.txt")]

except Exception as e:

raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')clock = time.clock()

while(True):

clock.tick()img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps")Edge Impulse训练信息:

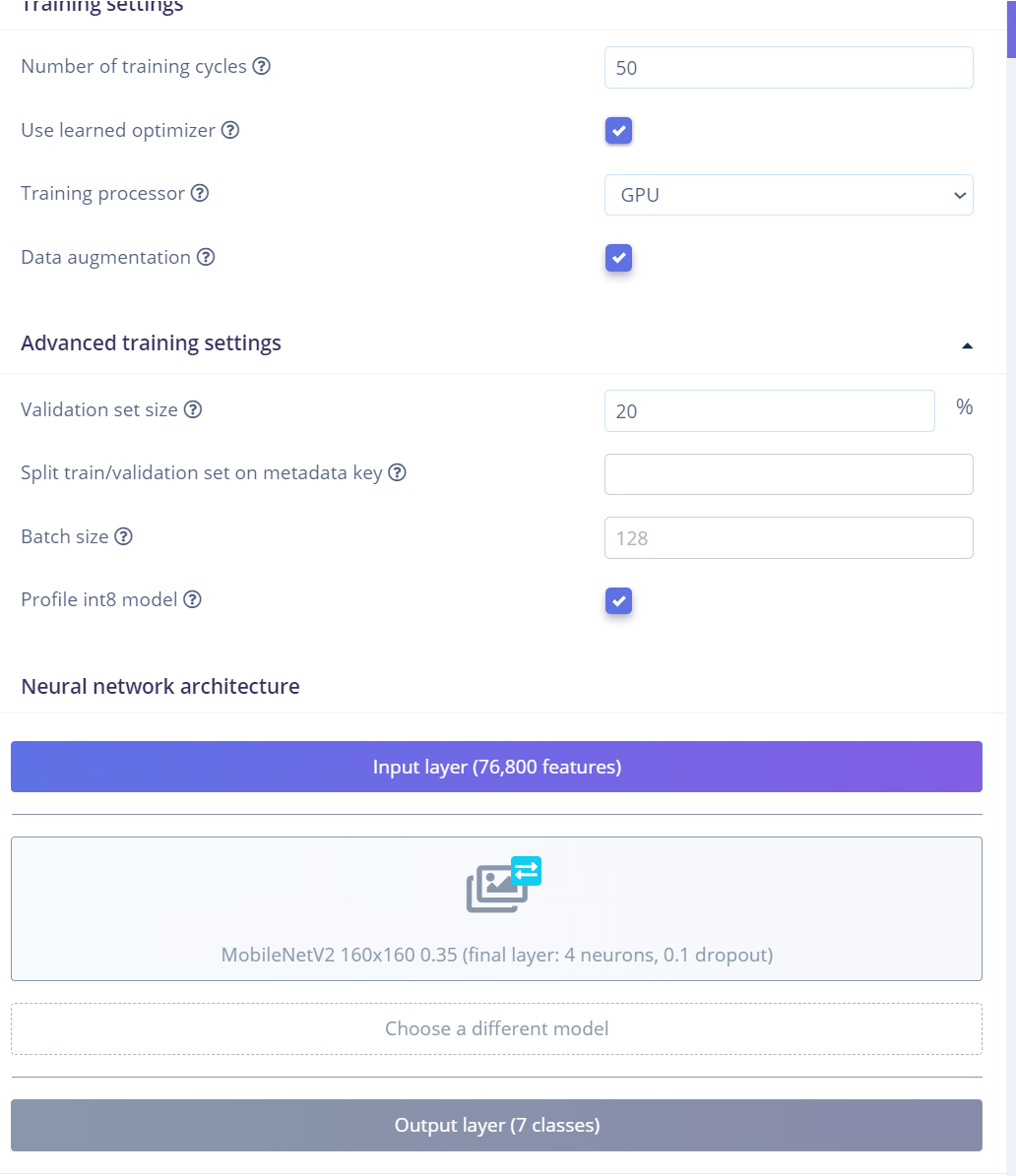

-

你用的是OpenMV4 H7,不能运行edge impulse上的分类模型,OpenMV4 H7 Plus可以用。

建议用星瞳AI云服务,可以支持OpenMV4 H7

-

@kidswong999 我这个PLUS也有这个问题啊

-

我这个新买的Plus也有这个问题,已经把MobileNet设置到资源最小了也不行

-

版本问题,4.6.0也会出现,回退版本4.5.4就可以了

-

新的固件,使用下面新的代码:

import sensor import time import ml from ml.utils import NMS import math import image sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. min_confidence = 0.4 threshold_list = [(math.ceil(min_confidence * 255), 255)] print(model) model = ml.Model("trained.tflite", load_to_fb=True) labels = [line.rstrip('\n') for line in open("labels.txt")] colors = [ # Add more colors if you are detecting more than 7 types of classes at once. (255, 0, 0), (0, 255, 0), (255, 255, 0), (0, 0, 255), (255, 0, 255), (0, 255, 255), (255, 255, 255), ] # FOMO outputs an image per class where each pixel in the image is the centroid of the trained # object. So, we will get those output images and then run find_blobs() on them to extract the # centroids. We will also run get_stats() on the detected blobs to determine their score. # The Non-Max-Supression (NMS) object then filters out overlapping detections and maps their # position in the output image back to the original input image. The function then returns a # list per class which each contain a list of (rect, score) tuples representing the detected # objects. def fomo_post_process(model, inputs, outputs): n, oh, ow, oc = model.output_shape[0] nms = NMS(ow, oh, inputs[0].roi) for i in range(oc): img = image.Image(outputs[0][0, :, :, i] * 255) blobs = img.find_blobs( threshold_list, x_stride=1, area_threshold=1, pixels_threshold=1 ) for b in blobs: rect = b.rect() x, y, w, h = rect score = ( img.get_statistics(thresholds=threshold_list, roi=rect).l_mean() / 255.0 ) nms.add_bounding_box(x, y, x + w, y + h, score, i) return nms.get_bounding_boxes() clock = time.clock() while True: clock.tick() img = sensor.snapshot() for i, detection_list in enumerate(model.predict([img], callback=fomo_post_process)): if i == 0: continue # background class if len(detection_list) == 0: continue # no detections for this class? print("********** %s **********" % labels[i]) for (x, y, w, h), score in detection_list: center_x = math.floor(x + (w / 2)) center_y = math.floor(y + (h / 2)) print(f"x {center_x}\ty {center_y}\tscore {score}") img.draw_circle((center_x, center_y, 12), color=colors[i]) print(clock.fps(), "fps", end="\n")