@kidswong999 可以帮忙看一下,这个代码的问题在哪吗?一直显示Waiting for connections..

socket error: -116

Z

ztvu

@ztvu

0

声望

9

楼层

508

资料浏览

0

粉丝

0

关注

ztvu 发布的帖子

-

RE: 怎么用wifi模块传人脸追踪的视频流发布在 OpenMV Cam

-

RE: 怎么用wifi模块传人脸追踪的视频流发布在 OpenMV Cam

import sensor, image, time, network, usocket, sys from pyb import UART SSID ='OPENMV_AP' # Network SSID KEY ='1234567890' # Network key (must be 10 chars) HOST = '' # Use first available interface PORT = 8080 # Arbitrary non-privileged port # Reset sensor sensor.reset() # Set sensor settings sensor.set_contrast(1) sensor.set_brightness(1) sensor.set_saturation(1) sensor.set_gainceiling(16) sensor.set_framesize(sensor.HQVGA) sensor.set_pixformat(sensor.GRAYSCALE) sensor.skip_frames(time=10) sensor.set_auto_whitebal(False) uart = UART(3, 115200) # 在AP模式下启动wlan模块。 wlan = network.WINC(mode=network.WINC.MODE_AP) wlan.start_ap(SSID, key=KEY, security=wlan.WEP, channel=2) #您可以阻止等待客户端连接 #print(wlan.wait_for_sta(10000)) def start_streaming(s): print ('Waiting for connections..') client, addr = s.accept() # 将客户端套接字超时设置为2秒 client.settimeout(2.0) print ('Connected to ' + addr[0] + ':' + str(addr[1])) # 从客户端读取请求 data = client.recv(1024) # 应该在这里解析客户端请求 # 发送多部分head client.send("HTTP/1.1 200 OK\r\n" \ "Server: OpenMV\r\n" \ "Content-Type: multipart/x-mixed-replace;boundary=openmv\r\n" \ "Cache-Control: no-cache\r\n" \ "Pragma: no-cache\r\n\r\n") # FPS clock face_cascade = image.HaarCascade("frontalface", stages=25) def find_max(blobs): max_size=0 for blob in blobs: if blob[2]*blob[3] > max_size: max_blob=blob max_size = blob[2]*blob[3] return max_blob clock = time.clock() # 开始流媒体图像 #注:禁用IDE预览以增加流式FPS。 while (True): clock.tick() # Track elapsed milliseconds between snapshots(). frame = sensor.snapshot() cframe = frame.compressed(quality=35) header = "\r\n--openmv\r\n" \ "Content-Type: image/jpeg\r\n"\ "Content-Length:"+str(cframe.size())+"\r\n\r\n" client.send(header) client.send(cframe) blobs = frame.find_features(face_cascade, threshold=0.75, scale=1.35) if blobs: max_blob = find_max(blobs) frame.draw_rectangle(max_blob) frame.draw_cross(int(max_blob[0]+max_blob[2]/2), int(max_blob[1]+max_blob[3]/2)) a=int(max_blob[0]+max_blob[2]/2) b=int(max_blob[1]+max_blob[3]/2) print("a:%d b:%d"%(a, b)) output_str="[%d,%d]" % (a,b) print('you send:',output_str) uart.write(output_str+'\r\n') else: print('not found!') while (True): # 创建服务器套接字 s = usocket.socket(usocket.AF_INET, usocket.SOCK_STREAM) try: # Bind and listen s.bind([HOST, PORT]) s.listen(5) # 设置服务器套接字超时 # 注意:由于WINC FW bug,如果客户端断开连接,服务器套接字必须 # 关闭并重新打开。在这里使用超时关闭并重新创建套接字。 s.settimeout(3) start_streaming(s) except OSError as e: s.close() print("socket error: ", e) -

怎么用wifi模块传人脸追踪的视频流发布在 OpenMV Cam

回复: 怎么用WiFi传人脸识别的视频@kidswong999 这个链接失效了....还有吗?把人脸追踪和wifi传视频流的写到一个里怎么改呢?或者我直接在flash里放两个文件,在人脸追踪的程序(main.py)里用语句import uuu导入另一个文件(uuu.py)上电两个会都运行吗

-

RE: 为什么对人脸追踪的程序只是加入了打印人脸坐标,每次循环的结果都是一样的没有变化?发布在 OpenMV Cam

串口显示结果

{"size":54, "threshold":10, "normalized":1} matched:18 dt:0 (46, 8, 182, 182) {"size":50, "threshold":10, "normalized":1} matched:17 dt:0 (46, 8, 182, 182) {"size":53, "threshold":10, "normalized":1} matched:18 dt:0 (46, 8, 182, 182) {"size":52, "threshold":10, "normalized":1} matched:17 dt:0 (46, 8, 182, 182) {"size":56, "threshold":10, "normalized":1} matched:18 dt:0 (46, 8, 182, 182) {"size":62, "threshold":10, "normalized":1} matched:18 dt:0 (46, 8, 182, 182) {"size":55, "threshold":10, "normalized":1} matched:15 dt:0 (46, 8, 182, 182) {"size":70, "threshold":10, "normalized":1} matched:10 dt:0 (46, 8, 182, 182) {"size":85, "threshold":10, "normalized":1} matched:11 dt:0 (46, 8, 182, 182) {"size":61, "threshold":10, "normalized":1} matched:9 dt:0 (46, 8, 182, 182) {"size":58, "threshold":10, "normalized":1} matched:8 dt:0 (46, 8, 182, 182) {"size":49, "threshold":10, "normalized":1} matched:7 dt:0 (46, 8, 182, 182) {"size":51, "threshold":10, "normalized":1} matched:8 dt:0 (46, 8, 182, 182) {"size":30, "threshold":10, "normalized":1} matched:7 dt:0 (46, 8, 182, 182) {"size":44, "threshold":10, "normalized":1} matched:6 dt:0 (46, 8, 182, 182) {"size":45, "threshold":10, "normalized":1} matched:8 dt:0 (46, 8, 182, 182) {"size":45, "threshold":10, "normalized":1} matched:9 dt:0 (46, 8, 182, 182) {"size":37, "threshold":10, "normalized":1} matched:6 dt:15 (46, 8, 182, 182) {"size":41, "threshold":10, "normalized":1} matched:6 dt:15 (46, 8, 182, 182) {"size":45, "threshold":10, "normalized":1} matched:6 dt:270 (46, 8, 182, 182) {"size":38, "threshold":10, "normalized":1} matched:8 dt:15 (46, 8, 182, 182) {"size":46, "threshold":10, "normalized":1} matched:8 dt:15 (46, 8, 182, 182) {"size":43, "threshold":10, "normalized":1} matched:7 dt:0 (46, 8, 182, 182) {"size":40, "threshold":10, "normalized":1} matched:7 dt:15 (46, 8, 182, 182) {"size":49, "threshold":10, "normalized":1} matched:9 dt:30 (46, 8, 182, 182) {"size":38, "threshold":10, "normalized":1} matched:7 dt:0 (46, 8, 182, 182) {"size":63, "threshold":10, "normalized":1} matched:8 dt:0 (46, 8, 182, 182) {"size":53, "threshold":10, "normalized":1} matched:8 dt:0 (46, 8, 182, 182) {"size":49, "threshold":10, "normalized":1} matched:9 dt:0 (46, 8, 182, 182) {"size":43, "threshold":10, "normalized":1} matched:6 dt:0 (46, 8, 182, 182) {"size":47, "threshold":10, "normalized":1} matched:7 dt:0 (46, 8, 182, 182) {"size":42, "threshold":10, "normalized":1} matched:6 dt:0 (46, 8, 182, 182) {"size":41, "threshold":10, "normalized":1} matched:7 dt:0 (46, 8, 182, 182) {"size":34, "threshold":10, "normalized":1} matched:7 dt:0 (46, 8, 182, 182) {"size":21, "threshold":10, "normalized":1} matched:8 dt:0 (46, 8, 182, 182) {"size":29, "threshold":10, "normalized":1} matched:6 dt:0 (46, 8, 182, 182) {"size":24, "threshold":10, "normalized":1} matched:9 dt:0 (46, 8, 182, 182) {"size":29, "threshold":10, "normalized":1} matched:6 dt:0 (46, 8, 182, 182) {"size":23, "threshold":10, "normalized":1} matched:7 dt:0 (46, 8, 182, 182) {"size":29, "threshold":10, "normalized":1} matched:8 dt:0 (46, 8, 182, 182) {"size":29, "threshold":10, "normalized":1} matched:6 dt:0 (46, 8, 182, 182) Traceback (most recent call last): File "<stdin>", line 56, in <module> Exception: IDE interrupt MicroPython v1.9.4-4553-gb4eccdfe3 on 2019-05-02; OPENMV4 with STM32H743 -

RE: 为什么对人脸追踪的程序只是加入了打印人脸坐标,每次循环的结果都是一样的没有变化?发布在 OpenMV Cam

只是加了这一句

for face in objects:

print(face) -

为什么对人脸追踪的程序只是加入了打印人脸坐标,每次循环的结果都是一样的没有变化?发布在 OpenMV Cam

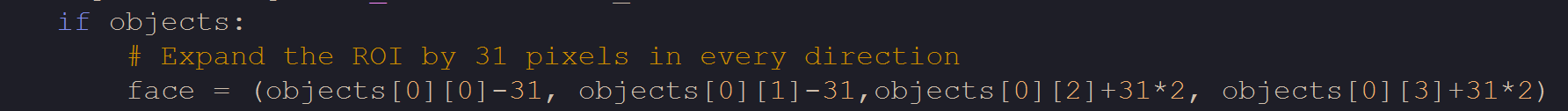

# Face Tracking Example # # This example shows off using the keypoints feature of your OpenMV Cam to track # a face after it has been detected by a Haar Cascade. The first part of this # script finds a face in the image using the frontalface Haar Cascade. # After which the script uses the keypoints feature to automatically learn your # face and track it. Keypoints can be used to automatically track anything. import sensor, time, image # Reset sensor sensor.reset() sensor.set_contrast(3) sensor.set_gainceiling(16) sensor.set_framesize(sensor.VGA) sensor.set_windowing((320, 240)) sensor.set_pixformat(sensor.GRAYSCALE) # Skip a few frames to allow the sensor settle down sensor.skip_frames(time = 2000) # Load Haar Cascade # By default this will use all stages, lower satges is faster but less accurate. face_cascade = image.HaarCascade("frontalface", stages=25) print(face_cascade) # First set of keypoints kpts1 = None # Find a face! while (kpts1 == None): img = sensor.snapshot() img.draw_string(0, 0, "Looking for a face...") # Find faces objects = img.find_features(face_cascade, threshold=0.5, scale=1.25) if objects: # Expand the ROI by 31 pixels in every direction face = (objects[0][0]-31, objects[0][1]-31,objects[0][2]+31*2, objects[0][3]+31*2) # Extract keypoints using the detect face size as the ROI kpts1 = img.find_keypoints(threshold=10, scale_factor=1.1, max_keypoints=100, roi=face) # Draw a rectangle around the first face img.draw_rectangle(objects[0]) # Draw keypoints print(kpts1) img.draw_keypoints(kpts1, size=24) img = sensor.snapshot() time.sleep(2000) # FPS clock clock = time.clock() while (True): clock.tick() img = sensor.snapshot() # Extract keypoints from the whole frame kpts2 = img.find_keypoints(threshold=10, scale_factor=1.1, max_keypoints=100, normalized=True) if (kpts2): # Match the first set of keypoints with the second one c=image.match_descriptor(kpts1, kpts2, threshold=85) match = c[6] # C[6] contains the number of matches. if (match>5): img.draw_rectangle(c[2:6]) img.draw_cross(c[0], c[1], size=10) t=img.draw_rectangle(c[2:6]) print(kpts2, "matched:%d dt:%d"%(match, c[7])) for face in objects: print(face) # Draw FPS img.draw_string(0, 0, "FPS:%.2f"%(clock.fps())) -

RE: 请问人脸追踪例程里这句话是什么意思?发布在 OpenMV Cam

@kidswong999 我看了这个,可是我不太懂[0][0]和[0][1]都代表着什么,教学视频里是只有0是x,1是y,2是w,3是h,那这里的[0][0]是什么?