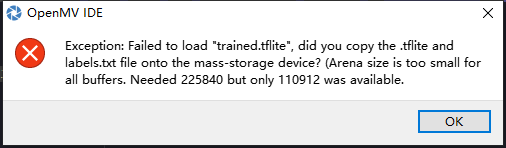

错误是:

Arena size is too small forall buffers.Needed 225840 but only 110912 was available.

这怎么解决

X

x2aq

@x2aq

0

声望

4

楼层

377

资料浏览

0

粉丝

0

关注

x2aq 发布的帖子

-

RE: 这个报错好像是flash不够,这问题怎么解决?发布在 OpenMV Cam

-

运行时卡顿帧率只有几这么解决?发布在 OpenMV Cam

from pyb import UART,LED ###串口要用 import json import ustruct ###串口要用 LED_R = pyb.LED(1) # Red LED = 1, Green LED = 2, Blue LED = 3, IR LEDs = 4. LED_G = pyb.LED(2) LED_B = pyb.LED(3) LED_R.off() LED_G.off() LED_B.off() uart = UART(1, 115200) # init with given baudrate uart.init(115200, bits=8,parity=None, stop=1) # init with given parameters def mask_face(): net = None labels = None try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: print(e) raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') clock = time.clock() clock.tick() img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) uart.write("mask:%.2f" % predictions_list[1][1]) print(clock.fps(), "fps") LED_G.on() def min(pmin, a, s): global num if a<pmin: pmin=a num=s return pmin def people_shibie(): #SUB = "s1" NUM_SUBJECTS = 2 #图像库中不同人数,一共2人 NUM_SUBJECTS_IMGS = 20 #每人有20张样本图片 # 拍摄当前人脸。 img = sensor.snapshot() #img = image.Image("singtown/%s/1.pgm"%(SUB)) d0 = img.find_lbp((0, 0, img.width(), img.height())) #d0为当前人脸的lbp特征 img = None pmin = 999999 num=0 for s in range(1, NUM_SUBJECTS+1): dist = 0 for i in range(2, NUM_SUBJECTS_IMGS+1): img = image.Image("singtown/s%d/%d.pgm"%(s, i)) d1 = img.find_lbp((0, 0, img.width(), img.height())) #d1为第s文件夹中的第i张图片的lbp特征 dist += image.match_descriptor(d0, d1)#计算d0 d1即样本图像与被检测人脸的特征差异度。 print("Average dist for subject %d: %d"%(s, dist/NUM_SUBJECTS_IMGS)) pmin = min(pmin, dist/NUM_SUBJECTS_IMGS, s)#特征差异度越小,被检测人脸与此样本更相似更匹配。 print(pmin) print(num) # num为当前最匹配的人的编号。 uart.write("face:%d" % num) def main(): mode = 0 omode = 1 while(True): if mode == 0: if omode == 1: omode = 0 sensor.reset() # Initialize the camera sensor. sensor.set_pixformat(sensor.GRAYSCALE) # or sensor.GRAYSCALE sensor.set_framesize(sensor.B128X128) # or sensor.QQVGA (or others) sensor.set_windowing((92,112)) sensor.skip_frames(10) # Let new settings take affect. sensor.skip_frames(time = 5000) #等待5s people_shibie() else: if omode == 0: omode = 1 sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. mask_face() if uart.read() != None: print("Change") mode = not mode main()