另外还有一个问题,我又两个Openmv4 plusi,出现报错的这台插入SD卡后无法被电脑识别

W

wxgv 发布的帖子

-

OpenMV4 plus脱机运行报错发布在 OpenMV Cam

在IDE上正常运行,脱机运行后出现了ERROR_log文件

报错内容是SDRAM failed testing!

代码如下enable_lens_corr = False # turn on for straighter lines... import sensor, image, time from pyb import UART import ujson sensor.reset() sensor.set_contrast(3) sensor.set_pixformat(sensor.GRAYSCALE) # grayscale is faster sensor.set_framesize(sensor.QVGA) sensor.skip_frames(time = 2000) clock = time.clock() uart=UART(3,9600) # All line objects have a `theta()` method to get their rotation angle in degrees. # You can filter lines based on their rotation angle. min_degree = 0 max_degree = 179 rx1_l=[] rx2_l=[] ry1_l=[] ry2_l=[] rx1_r=[] rx2_r=[] ry1_r=[] ry2_r=[] # All lines also have `x1()`, `y1()`, `x2()`, and `y2()` methods to get their end-points # and a `line()` method to get all the above as one 4 value tuple for `draw_line()`. while(True): clock.tick() img = sensor.snapshot() if enable_lens_corr: img.lens_corr(0.7) # for 2.8mm lens... # `threshold` controls how many lines in the image are found. Only lines with # edge difference magnitude sums greater than `threshold` are detected... # More about `threshold` - each pixel in the image contributes a magnitude value # to a line. The sum of all contributions is the magintude for that line. Then # when lines are merged their magnitudes are added togheter. Note that `threshold` # filters out lines with low magnitudes before merging. To see the magnitude of # un-merged lines set `theta_margin` and `rho_margin` to 0... # `theta_margin` and `rho_margin` control merging similar lines. If two lines # theta and rho value differences are less than the margins then they are merged. for l in img.find_lines(threshold = 2800, theta_margin = 35, rho_margin = 35): if (min_degree <= l.theta()) and (l.theta() <= max_degree): img.draw_line(l.line(), color = (255, 0, 0)) # print(l) #print("x1:",l.x1,"x2:",l.x2) #print(l.theta()) #k=(l.y1()-l.y2())/(l.x1()-l.x2()) if(l.x1()<125 and l.theta()<45): rx1_l.append(l.x1()) rx2_l.append(l.x2()) ry1_l.append(l.y1()) ry2_l.append(l.y2()) #print("left:",l.theta()) #print(l.x1(),l.y1(),l.x2(),l.y2()) if(l.x1()>125 and l.theta()>135): rx1_r.append(l.x1()) rx2_r.append(l.x2()) ry1_r.append(l.y1()) ry2_r.append(l.y2()) #print(l.x1(),l.y1(),l.x2(),l.y2()) if rx1_l: if rx1_r: A_1=ry2_l[-1]-ry1_l[-1] B_1=rx1_l[-1]-rx2_l[-1] C_1=rx1_l[-1]*(ry1_l[-1]-ry2_l[-1])+ry1_l[-1]*(rx2_l[-1]-rx1_l[-1]) A_2=ry2_r[-1]-ry1_r[-1] B_2=rx1_r[-1]-rx2_r[-1] C_2=rx1_r[-1]*(ry1_r[-1]-ry2_r[-1])+ry1_r[-1]*(rx2_r[-1]-rx1_r[-1]) cross_x=(B_1*C_2-B_2*C_1)/(B_2*A_1-B_1*A_2) if(cross_x>0 and cross_x<320): rcx=cross_x-125 print(cross_x-125) rxy={"rx":rcx,"ry":0} uart.write(ujson.dumps(rxy)+'\r\n') #print("FPS %f" % clock.fps()) # About negative rho values: # # A [theta+0:-rho] tuple is the same as [theta+180:+rho]. -

Openmv4p使用MT9V034模块以后特征点检测数量太少发布在 OpenMV Cam

把原装模块换成MT9V034以后能检测到的特征点数量就少了很多

代码为教程代码,参数几乎没有改动,把threshold都设置到1了还是只能检测到几个点

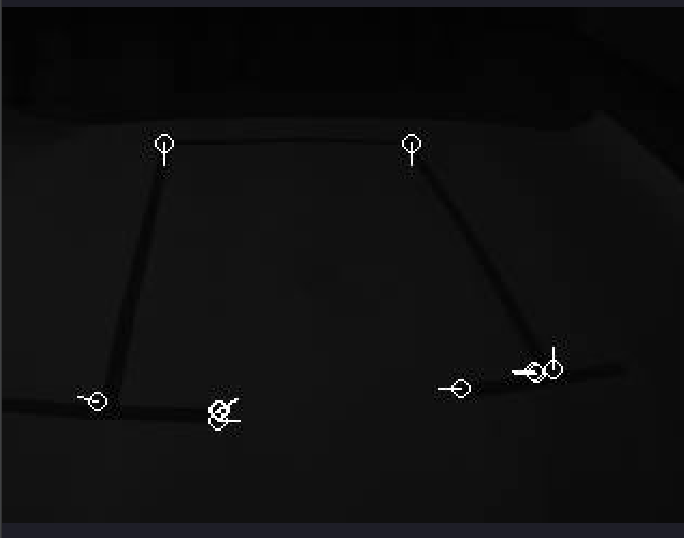

检测特征点的时候屏幕会变暗一下(如图)

请问这个问题怎么解决?

import sensor, time, image from pyb import UART import ujson # Reset sensor sensor.reset() # Sensor settings sensor.set_contrast(3) sensor.set_gainceiling(16) sensor.set_pixformat(sensor.GRAYSCALE) sensor.set_framesize(sensor.VGA) #sensor.set_windowing((320, 240)) uart=UART(3,9600) sensor.skip_frames(time = 10) #sensor.set_auto_gain(True, value=100) def draw_keypoints(img, kpts): if kpts: print(kpts) img.draw_keypoints(kpts) img = sensor.snapshot() time.sleep(1000) kpts1 = None #NOTE: uncomment to load a keypoints descriptor from file #kpts1 = image.load_descriptor("/parking_lot.orb") img = sensor.snapshot() #draw_keypoints(img, kpts1) clock = time.clock() while (True): clock.tick() img = sensor.snapshot() if (kpts1 == None): # NOTE: By default find_keypoints returns multi-scale keypoints extracted from an image pyramid. kpts1 = img.find_keypoints(normalize=True,max_keypoints=150, threshold=1, scale_factor=1.5) draw_keypoints(img, kpts1) else: # NOTE: When extracting keypoints to match the first descriptor, we use normalized=True to extract # keypoints from the first scale only, which will match one of the scales in the first descriptor. kpts2 = img.find_keypoints(max_keypoints=150, threshold=3, normalized=True) if (kpts2): match = image.match_descriptor(kpts1, kpts2, threshold=90) if (match.count()>10): # If we have at least n "good matches" # Draw bounding rectangle and cross. img.draw_rectangle(match.rect()) img.draw_cross(match.cx(), match.cy(), size=10) lcx=match.cx() lcy=match.cy() lxy={"lx":lcx,"ly":lcy} uart.write(ujson.dumps(lxy)+'\r\n') print(kpts2, "matched:%d dt:%d"%(match.count(), match.theta())) # NOTE: uncomment if you want to draw the keypoints #img.draw_keypoints(kpts2, size=KEYPOINTS_SIZE, matched=True) # Draw FPS img.draw_string(0, 0, "FPS:%.2f"%(clock.fps())) -

OpenMV如何识别停车位?发布在 OpenMV Cam

使用的是1.7mm的广角摄像头

需要识别如图的停车位

因为识别中平台会移动,所以没有使用模板匹配

使用过了矩形检测和特征点检测

矩形检测无法识别出矩形

特征点检测效果不佳

请问有没有更好的解决方案