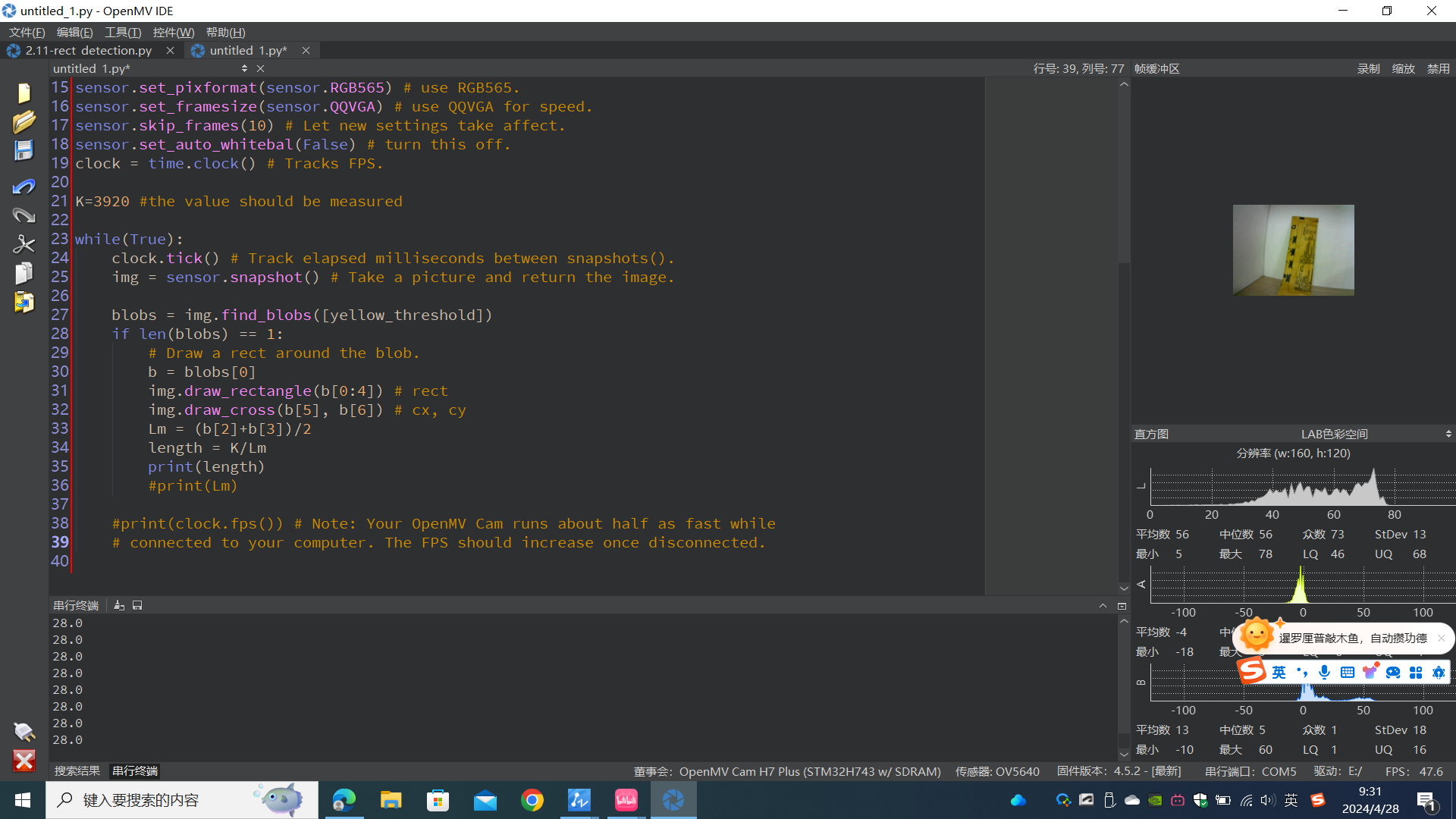

# Measure the distance

#

# This example shows off how to measure the distance through the size in imgage

# This example in particular looks for yellow pingpong ball.

import sensor, image, time

# For color tracking to work really well you should ideally be in a very, very,

# very, controlled enviroment where the lighting is constant...

yellow_threshold = (33, 96, -34, 42, -13, 28)

# You may need to tweak the above settings for tracking green things...

# Select an area in the Framebuffer to copy the color settings.

sensor.reset() # Initialize the camera sensor.

sensor.set_pixformat(sensor.RGB565) # use RGB565.

sensor.set_framesize(sensor.QQVGA) # use QQVGA for speed.

sensor.skip_frames(10) # Let new settings take affect.

sensor.set_auto_whitebal(False) # turn this off.

clock = time.clock() # Tracks FPS.

K=3920 #the value should be measured

while(True):

clock.tick() # Track elapsed milliseconds between snapshots().

img = sensor.snapshot() # Take a picture and return the image.

blobs = img.find_blobs([yellow_threshold])

if len(blobs) == 1:

# Draw a rect around the blob.

b = blobs[0]

img.draw_rectangle(b[0:4]) # rect

img.draw_cross(b[5], b[6]) # cx, cy

Lm = (b[2]+b[3])/2

length = K/Lm

print(length)

#print(Lm)请在这里粘贴代码

T

tesv 发布的帖子

-

使用这个例程之后,移动物体与摄像头之间的距离,串口返回的数值不变的,这是为什么?发布在 OpenMV Cam

-

RE: 打印出来的(x,y,w,h)中的w,h的被测物体宽度和高度像素点的大小吗?是的话怎样转换成实际物体大小?发布在 OpenMV Cam

而且使用上面的例程,摄像头捕捉到的是整个显示框,不是物体大小的size

-

RE: 打印出来的(x,y,w,h)中的w,h的被测物体宽度和高度像素点的大小吗?是的话怎样转换成实际物体大小?发布在 OpenMV Cam

使用这个例程之后,移动物体与摄像头之间的距离,串口返回的数值不变的,这是为什么?

# Measure the distance # # This example shows off how to measure the distance through the size in imgage # This example in particular looks for yellow pingpong ball. import sensor, image, time # For color tracking to work really well you should ideally be in a very, very, # very, controlled enviroment where the lighting is constant... yellow_threshold = (33, 96, -34, 42, -13, 28) # You may need to tweak the above settings for tracking green things... # Select an area in the Framebuffer to copy the color settings. sensor.reset() # Initialize the camera sensor. sensor.set_pixformat(sensor.RGB565) # use RGB565. sensor.set_framesize(sensor.QQVGA) # use QQVGA for speed. sensor.skip_frames(10) # Let new settings take affect. sensor.set_auto_whitebal(False) # turn this off. clock = time.clock() # Tracks FPS. K=3920 #the value should be measured while(True): clock.tick() # Track elapsed milliseconds between snapshots(). img = sensor.snapshot() # Take a picture and return the image. blobs = img.find_blobs([yellow_threshold]) if len(blobs) == 1: # Draw a rect around the blob. b = blobs[0] img.draw_rectangle(b[0:4]) # rect img.draw_cross(b[5], b[6]) # cx, cy Lm = (b[2]+b[3])/2 length = K/Lm print(length) #print(Lm) #print(clock.fps()) # Note: Your OpenMV Cam runs about half as fast while # connected to your computer. The FPS should increase once disconnected.请在这里粘贴代码 -

打印出来的(x,y,w,h)中的w,h的被测物体宽度和高度像素点的大小吗?是的话怎样转换成实际物体大小?发布在 OpenMV Cam

寻找最大的矩形

沿矩形边框绘制线条

import sensor, image, time

相机初始化

sensor.reset()

sensor.set_pixformat(sensor.GRAYSCALE) # 设置图像格式为灰度

sensor.set_framesize(sensor.QQQVGA) # 设置图像大小

sensor.skip_frames(time=2000) # 等待设置生效

clock = time.clock() # 用于跟踪帧率while(True):

clock.tick()

img = sensor.snapshot().lens_corr(1.8)# 使用find_rects()方法寻找图像中的矩形 rects = img.find_rects(threshold = 10000) # 初始化变量来存储最大矩形的信息 max_area = 0 max_rect = None # 遍历所有找到的矩形,找出面积最大的矩形 for rect in rects: # 计算当前矩形的面积 area = rect.w() * rect.h() # 如果当前矩形的面积大于之前记录的最大面积,则更新最大矩形和最大面积 if area > max_area: max_area = area max_rect = rect # 如果找到了最大的矩形,则绘制它的边框 if max_rect: corners = max_rect.corners() # 获取最大矩形的四个角点 # 绘制最大矩形的四条边 for i in range(len(corners)): start_point = corners[i] end_point = corners[(i+1) % 4] img.draw_line(start_point[0], start_point[1], end_point[0], end_point[1], color = 255) print(corners) -

为什么Openmv样例代码不能够测距和物体大小发布在 OpenMV Cam

为什么openmv不能进行物体距离测量

import sensor, image, time # 此处通过识别物体的颜色来测大小 target_threshold = (91, 65, -15, 33, 89, 47) # 此处以黄色为例 K = 0.07 # 当测另一个物体的大小时,需要将该物体放在求比例系数K时的那个距离处,此处我们使用的是10cm处 # 实际大小 = 直径的像素 * K。 # 第一次时,可以将小球放在距离摄像头10cm处的位置,测得Lm的大小之后,K = 实际大小 / Lm # 比如在10cm处时,直径的像素为43,小球实际大小为3cm,则K = 3 / 43 = 0.07,具体情况以你的实际情况为准 # 初始化函数 def init_setup(): global sensor, clock # 设置为全局变量 sensor.reset() # 初始化感光元件 sensor.set_pixformat(sensor.RGB565) # 将图像格式设置为彩色 sensor.set_framesize(sensor.QQVGA) # 将图像大小设置为160x120 sensor.skip_frames(10) # 跳过10帧,使以上设置生效 sensor.set_auto_whitebal(False) # 关闭白平衡 clock = time.clock() # 创建时钟对象 # 主函数 def main(): while 1: clock.tick() # 更新FPS帧率时钟 img = sensor.snapshot() # 拍一张照片并返回图像 blobs = img.find_blobs([target_threshold]) # 找到图像中的目标区域 if len(blobs) == 1: b = blobs[0] img.draw_rectangle(b[0:4]) # 将目标区域框出来 img.draw_cross(b[5], b[6]) # 在目标中心位置画十字 Lm = (b[2]+b[3])/2 # 获取像素点 w = K * b[2] # 通过比例系数K和像素值得到物体实际宽度 h = K * b[3] # 通过比例系数K和像素值得到物体实际高度 print('物体实际宽度为 %s cm' % w) # 打印物体实际宽度值 print('物体实际高度为 %s cm' % h) # 打印物体实际高度值 #print(Lm) # 打印像素点数,在你对一个新的物体进行测大小时,需要将该物体放在求比例系数K时的那个距离处,此处我们使用的是10cm处 #print(clock.fps()) # 打印帧率,注:实际FPS更高,流FB使它更慢。 # 程序入口 if __name__ == '__main__': init_setup() # 执行初始化函数 try: main() # 执行主函数 except: pass