import sensor, image, time, os, tf, math, uos, gc

import json

from pyb import UART

from pyb import Pin, Timer

light = Timer(2, freq=50000).channel(1, Timer.PWM, pin=Pin("P6")) #灯光基础参数引脚,频率,pwm(不用改)

light.pulse_width_percent(10) #亮度

sensor.reset() #复位和初始化传感器

sensor.set_pixformat(sensor.RGB565) #设置像素格式为RGB565(或GRAYSCALE)

sensor.set_framesize(sensor.QVGA) #设置帧大小为QVGA (320x240)

sensor.set_windowing((320, 240)) #设置320x240窗口

sensor.skip_frames(time=2000) #让相机调整一下。

uart = UART(3, 115200) #串口3,波特率115200

net = None

labels = None

min_confidence = 0.5 #最小置信度(区分识别物与背景)!!!不知道别改

#以下为空定义,防止报错

mjzb=''

xzb=''

yzb=''

rect_area=0

center_x=0

center_y=0

blobs=0

str1="A雄"

str2="B雄"

str3="A雌"

str4="B雌"

left_roi = [0,0,320,240]

try:#加载模型,在堆上分配模型文件,如果加载后我们至少有64K的空闲空间

net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024)))

except Exception as e:

raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')

try:

labels = [line.rstrip('\n') for line in open("labels.txt")]

except Exception as e:

raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')

yellow_thresholdA = (76, 99, -25, 83, 35, 111) #色块追踪颜色阈值,可根据环境光不同修改,参数在tools-machine vision-threshold editor

#yellow_thresholdB = (34,85,-48,77,-8,87)

yellow_thresholdC = (100, 61, -105, 127, 35, 88)

white_threshold = (28, 33, -89, 127, -128, -58) #识别方框的颜色阈值

pixel_size_mm = 0.1 / 25 #0.1mm\25像素点

colors = [

(255, 0, 0),#RED

( 0, 255, 0),#GREEN

(255, 255, 0),#YELLOW

( 0, 0, 255),#BLUE

(255, 0, 255),#PINK

( 0, 255, 255),#CYAN

(255, 255, 255),#WHITE

] #如果你同时检测到7种以上的类,添加更多的颜色。

clock = time.clock()

sj=9

def modified_data(data): #整型函数,将返回主控板的面积整型为四位数(根据手眼协调函数修改)

data = int(data)

str_data=''

if data < 10:

str_data = str_data + '000' + str(data)

elif data >= 10 and data < 100:

str_data = str_data + '00' + str(data)

elif data >=100 and data <1000:

str_data = str_data + '0' + str(data)

else:

str_data = str_data + str(data)

return str_data.encode('utf-8') #返回编码通用转换格式

while(True):

clock.tick()

img = sensor.snapshot()#.lens_corr(1.8)

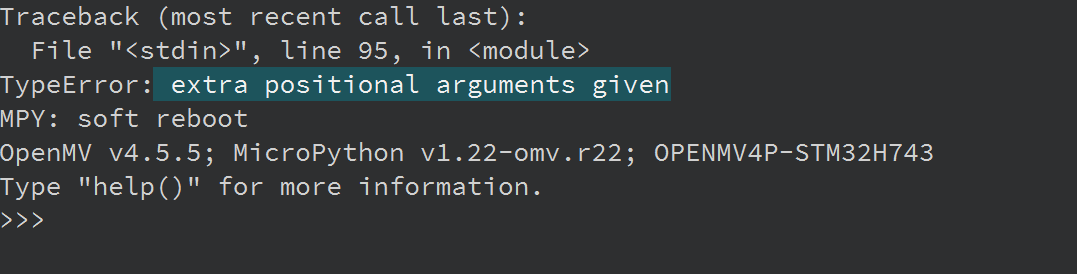

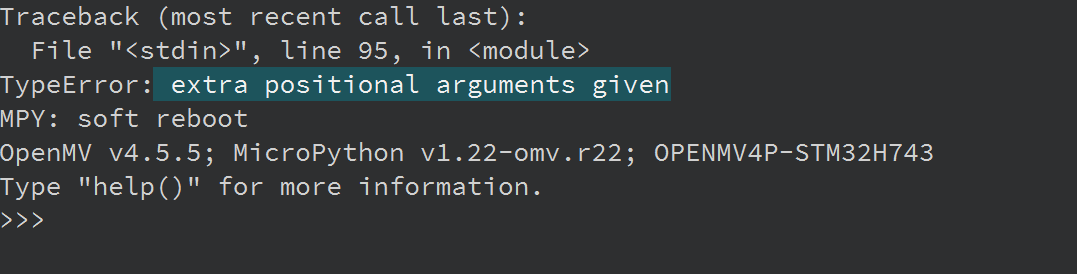

for i, detection_list in enumerate(net.detect(img, thresholds=[(math.ceil(min_confidence * 255), 255)])):

if (i == 0): continue

if (len(detection_list) == 0): continue

#print(labels[i])

for d in detection_list:

[x, y, w, h] = d.rect()

center_x = math.floor(x + (w / 2))

center_y = math.floor(y + (h / 2))

xzb = modified_data(center_x)

yzb = modified_data(center_y)

img.draw_circle((center_x, center_y, 8), color=(0,0,0), thickness=2)

print('识别到%s' % labels[i])

if labels[i]== 'B雄' or labels[i]== 'A雄' :

print('这是雄花')

rect_area=0

break

elif labels[i]== 'A雌' or labels[i]== 'B雌' :

blobs = img.find_blobs([yellow_thresholdA,yellow_thresholdC])

if blobs:

largest_blob = max(blobs, key = lambda b: b.pixels())

rect = largest_blob.rect()

img.draw_rectangle(rect, color = (0,0,255))

white_region = img.crop(rect).find_blobs([white_threshold], pixels_threshold=100)

if white_region:

largest_white_region = max(white_region, key = lambda b: b.pixels())

rect_area = largest_white_region.pixels()

img.draw_rectangle(largest_white_region.rect(), color = (0, 0, 255))

mjzb = modified_data(rect_area)

print('*******')

uart.write('st')

uart.write(xzb)

uart.write(yzb)

uart.write(mjzb)

print('x==%d\ty==%d\tmj==%d' % (center_x, center_y, 10*rect_area))

print(xzb, yzb, mjzb)

print('这是%s\n' % labels[i])

time.sleep(0.15)