@kidswong999 感觉帧率没有找线快

R

rm4a 发布的帖子

-

RE: 大佬您好,我想通过找直角来确定矩形的四个顶点,请问有什么办法来记录每次拍摄时最距离摄像头最近的两个直角的顶点吗?发布在 OpenMV Cam

这个想通过找直角来记录矩形的四个顶点求平均,来找矩形的中心,但是我像通过找最低的两个直角顶点来求平均 ,以此确定整个矩形最低边的中心,但是不知道如何实现,请问大佬能指点一下吗?

-

大佬您好,我想通过找直角来确定矩形的四个顶点,请问有什么办法来记录每次拍摄时最距离摄像头最近的两个直角的顶点吗?发布在 OpenMV Cam

enable_lens_corr = False # turn on for straighter lines... import sensor, image, time sensor.reset() sensor.set_pixformat(sensor.GRAYSCALE) # grayscale is faster sensor.set_framesize(sensor.QVGA) sensor.skip_frames(time = 2000) clock = time.clock() right_angle_threshold = (60, 90) binary_threshold = [(0, 180)] forget_ratio = 0.8 move_threshold = 5 k=0 x=[0,0,0,0] y=[0,0,0,0] def calculate_angle(line1, line2): # 利用四边形的角公式, 计算出直线夹角 angle = (180 - abs(line1.theta() - line2.theta())) if angle > 90: angle = 180 - angle return angle def is_right_angle(line1, line2): global right_angle_threshold # 判断两个直线之间的夹角是否为直角 angle = calculate_angle(line1, line2) if angle >= right_angle_threshold[0] and angle <= right_angle_threshold[1]: # 判断在阈值范围内 return True return False def find_verticle_lines(lines): line_num = len(lines) for i in range(line_num -1): for j in range(i, line_num): if is_right_angle(lines[i], lines[j]): return (lines[i], lines[j]) return (None, None) def calculate_intersection(line1, line2): # 计算两条线的交点 a1 = line1.y2() - line1.y1() b1 = line1.x1() - line1.x2() c1 = line1.x2()*line1.y1() - line1.x1()*line1.y2() a2 = line2.y2() - line2.y1() b2 = line2.x1() - line2.x2() c2 = line2.x2() * line2.y1() - line2.x1()*line2.y2() if (a1 * b2 - a2 * b1) != 0 and (a2 * b1 - a1 * b2) != 0: cross_x = int((b1*c2-b2*c1)/(a1*b2-a2*b1)) cross_y = int((c1*a2-c2*a1)/(a1*b2-a2*b1)) return (cross_x, cross_y) return (-1, -1) def draw_cross_point(cross_x, cross_y): img.draw_cross(cross_x, cross_y) img.draw_circle(cross_x, cross_y, 5) img.draw_circle(cross_x, cross_y, 10) old_cross_x = 0 old_cross_y = 0 while(True): clock.tick() img = sensor.snapshot() #img.binary(binary_threshold) lines = img.find_lines(threshold = 2000, theta_margin = 20, rho_margin = 20, roi=(0, 0, 320,200)) for line in lines: pass # img.draw_line(line.line(), color = (255, 0, 0)) # 如果画面中有两条直线 if len(lines) >= 2: (line1, line2) = find_verticle_lines(lines) if (line1 == None or line2 == None): # 没有垂直的直线 continue # 画线 #img.draw_line(line1.line(), color = (255, 0, 0)) #img.draw_line(line2.line(), color = (255, 0, 0)) # 计算交点 (cross_x, cross_y) = calculate_intersection(line1, line2) print("cross_x: %d, cross_y: %d"%(cross_x, cross_y)) if cross_x != -1 and cross_y != -1: if(k<4): x[k]=cross_x y[k]=cross_y k=k+1 if(k==4): xz=int((x[0]+x[1]+x[2]+x[3])/4) yz=int((y[0]+y[1]+y[2]+y[3])/4) draw_cross_point(xz, yz) k=0 -

大佬 请问有没有什么办法找出图中所有线段中最接近摄像头底端的线段?发布在 OpenMV Cam

我现在是记录七个线段的x.y值然后找y1+y2的最小值 然后按y在数组中的位置找x, 但是这样测的很飘 不知道有没有什么好一些的思路

-

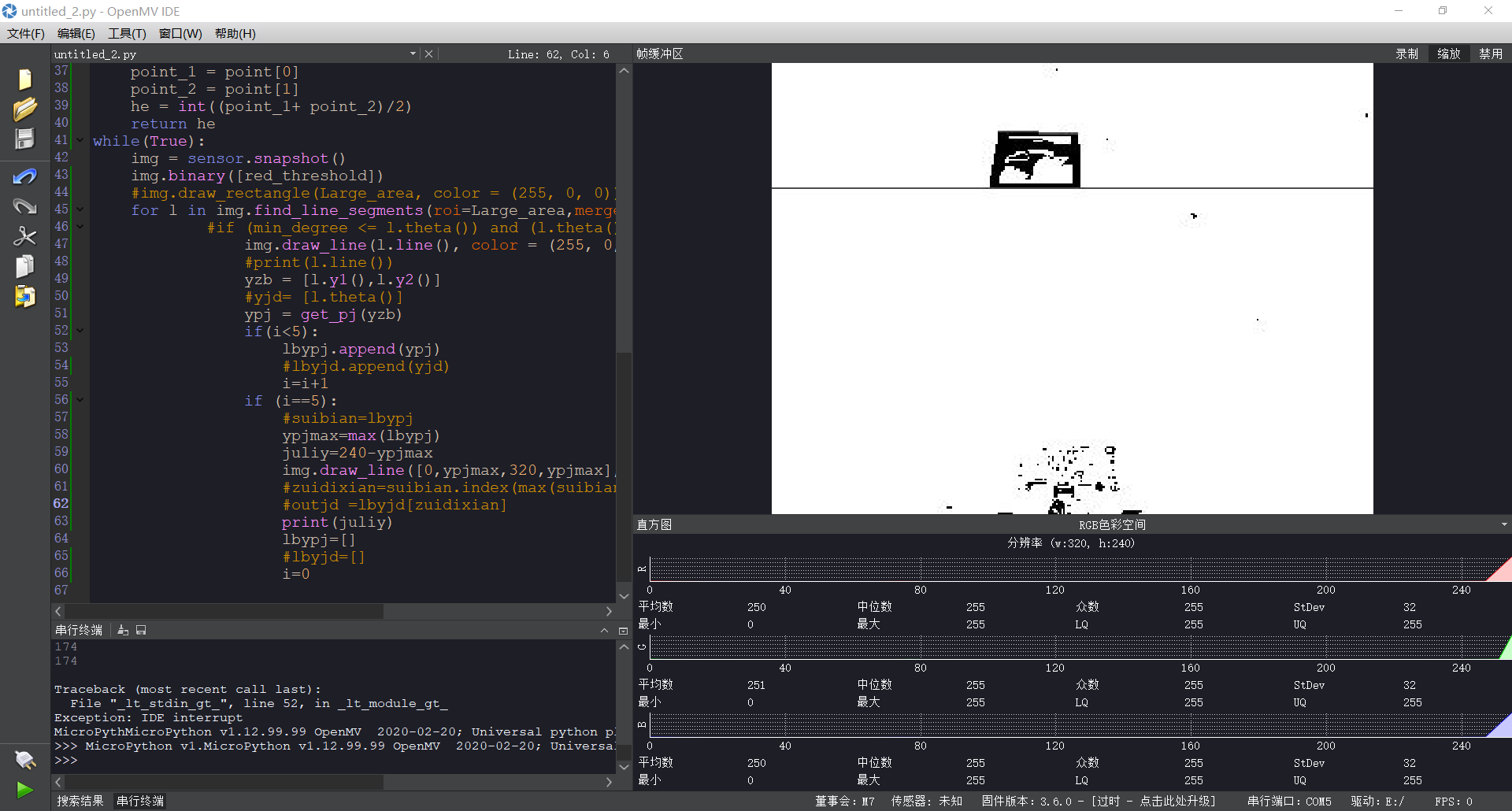

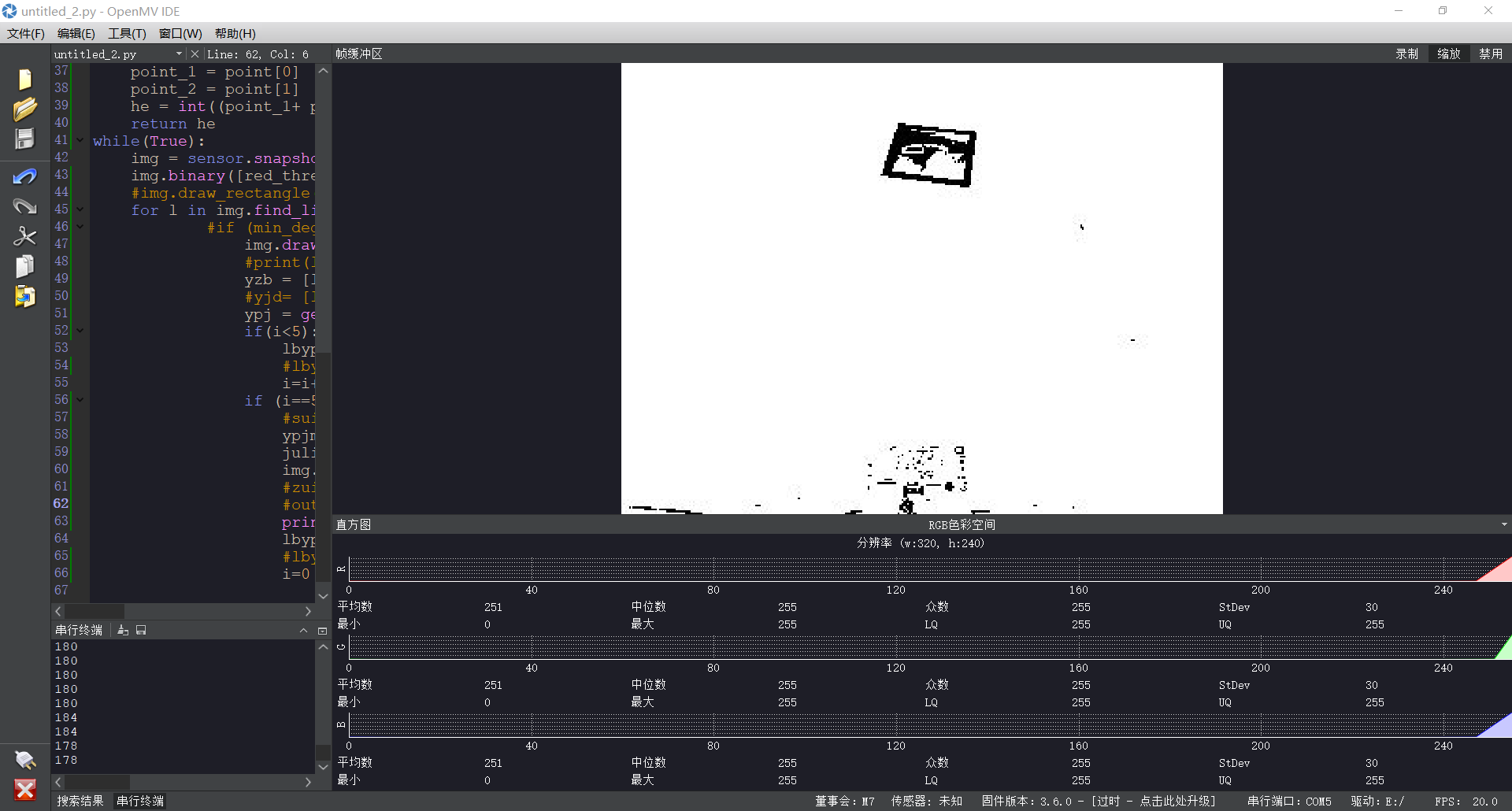

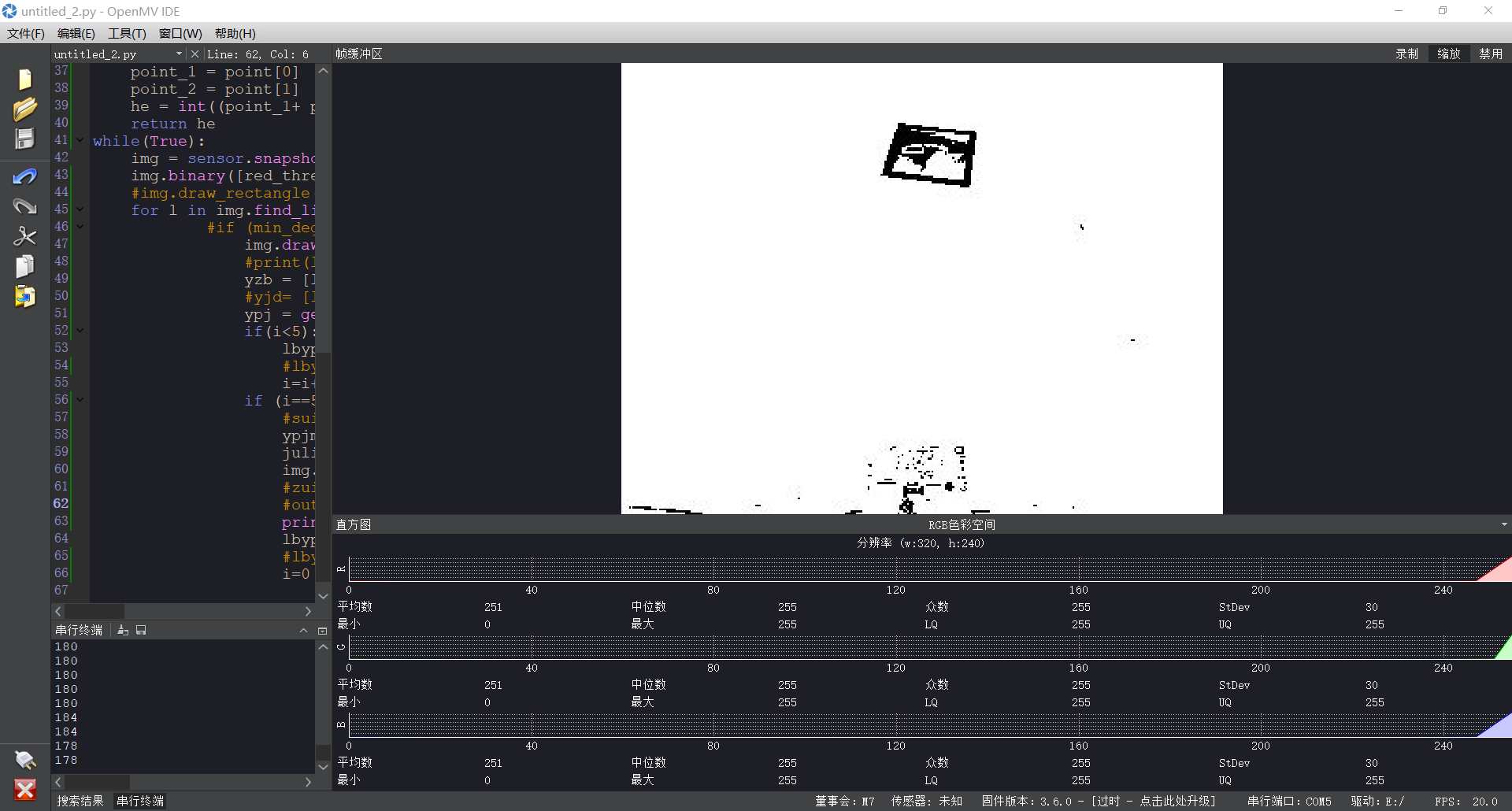

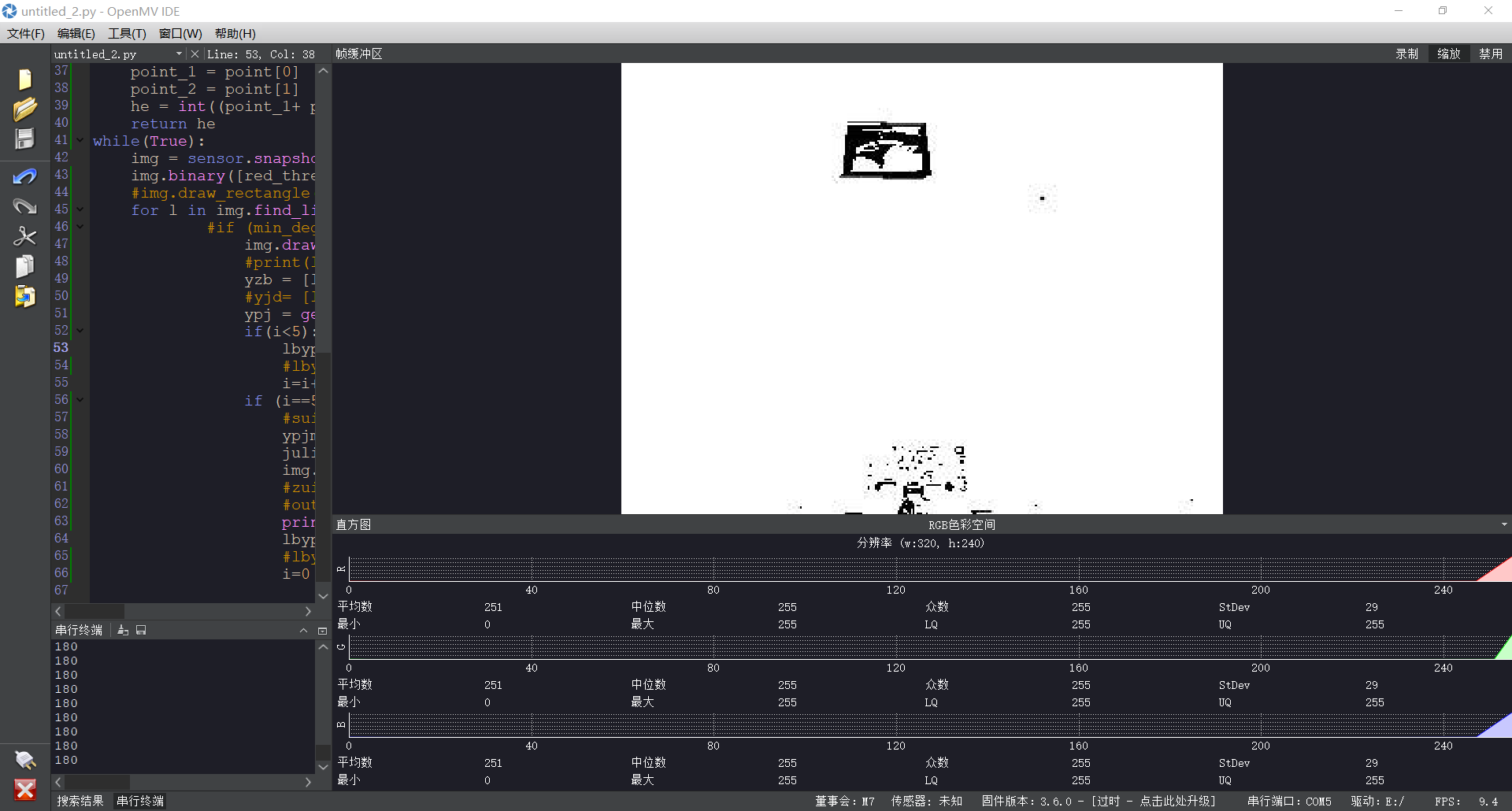

大佬请问为什么二值化之后找线段在正对矩形的时候找的到 歪了就不行发布在 OpenMV Cam

import pyb from pyb import LED #导入LED from machine import UART import sensor, image, time, math, os, tf uart = UART(1, baudrate=115200) sensor.reset() sensor.set_pixformat(sensor.GRAYSCALE) sensor.set_framesize(sensor.QVGA) sensor.set_auto_exposure(False, 1000) sensor.__write_reg(0x03,0x04) sensor.__write_reg(0x04,0x05) sensor.__write_reg(0xB2,0x40) sensor.__write_reg(0xB3,0x00) sensor.__write_reg(0xB4,0x40) sensor.__write_reg(0xB5,0x00) sensor.__write_reg(0x03,0x03) sensor.__write_reg(0x5B,0x60) sensor.__write_reg(0x03,0x08) sensor.set_auto_whitebal(False, (0x80,135,0x80)) sensor.set_brightness(500) sensor.set_auto_gain(False) sensor.skip_frames(time = 2000) clock = time.clock() Large_area=[0,0,320,180] min_degree = 45 max_degree = 135 i=0 yzb=[0,0] he=[] ypj=[] yjd=[] lbypj=[] lbyjd=[] suibian=[] red_threshold = (0,150) def get_pj(point): point_1 = point[0] point_2 = point[1] he = int((point_1+ point_2)/2) return he while(True): img = sensor.snapshot() img.binary([red_threshold]) #img.draw_rectangle(Large_area, color = (255, 0, 0)) for l in img.find_line_segments(roi=Large_area,merge_distance = 10, max_theta_diff = 10): #if (min_degree <= l.theta()) and (l.theta() <= max_degree): img.draw_line(l.line(), color = (255, 0, 0)) #print(l.line()) yzb = [l.y1(),l.y2()] #yjd= [l.theta()] ypj = get_pj(yzb) if(i<5): lbypj.append(ypj) #lbyjd.append(yjd) i=i+1 if (i==5): #suibian=lbypj ypjmax=max(lbypj) juliy=240-ypjmax img.draw_line([0,ypjmax,320,ypjmax], color = (255, 0, 0)) #zuidixian=suibian.index(max(suibian)) #outjd =lbyjd[zuidixian] print(juliy) lbypj=[] #lbyjd=[] i=0

-

RE: 大佬们 请问为什么按照例程手动设置白平衡后 rgb的增益不变 现在在蓝布上拍图泛蓝光 请问用什么方法来显示正常的颜色发布在 OpenMV Cam

import sensor, image, time sensor.reset() # 复位并初始化传感器。 sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) #设置图像色彩格式,有RGB565色彩图和GRAYSCALE灰度图两种 sensor.set_framesize(sensor.QVGA) # 将图像大小设置为QVGA (320x240) sensor.skip_frames(time = 2000) # 等待设置生效。 clock = time.clock() # 创建一个时钟对象来跟踪FPS帧率。 # 您可以在此处控制白平衡增益。 第一个值是db中的R增益,然后是db中的G增益,然后是db中的B增益。 # # 取消注释以下行使用您喜欢的增益值(从打印输出中获取)。 sensor.set_auto_whitebal(False) # 注意:为增益设置(0.0,0.0,0.0)会产生接近于零的值。不要指望进入的确切值等于出来的值。 while(True): clock.tick() rgb_gain_db = (-6.02073, -6.02073, -2.58981)# 更新FPS帧率时钟。 img = sensor.snapshot() # 拍一张照片并返回图像。 print(clock.fps(), \ sensor.get_rgb_gain_db()) # 输出AWB当前RGB增益。 -

RE: 大佬们 请问为什么按照例程手动设置白平衡后 rgb的增益不变 现在在蓝布上拍图泛蓝光 请问用什么方法来显示正常的颜色发布在 OpenMV Cam

@kidswong999 ![0_1645684792362_A7P]UI2452%X])YZOR]@W`8.png](https://fcdn.singtown.com/e73eb70c-6546-434d-91b2-823f3114c5bd.png) 大佬不好意思 我不知道是不是我没有理解清楚 我这里打印输出的还是一样的 我用的是openartmini

-

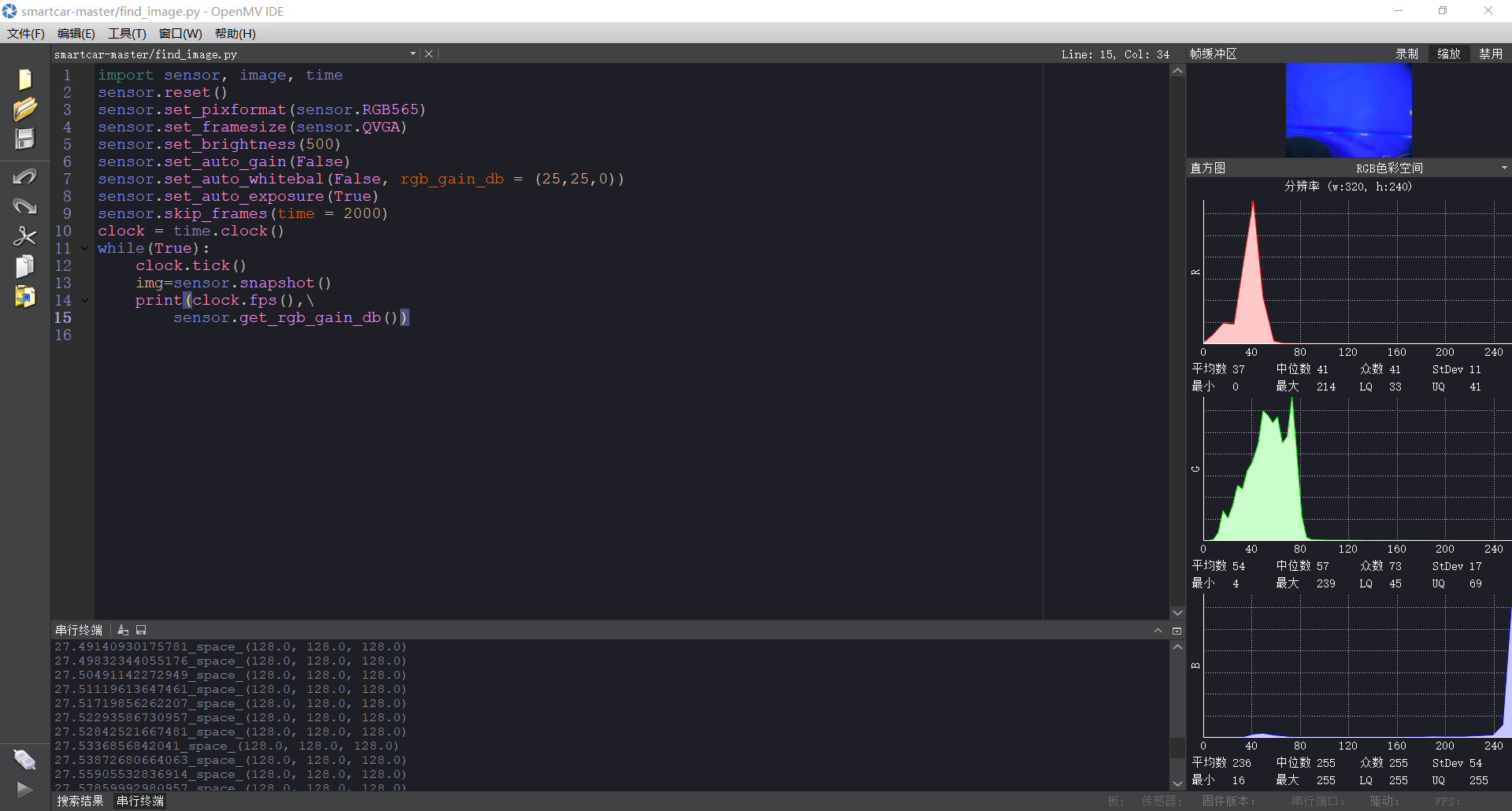

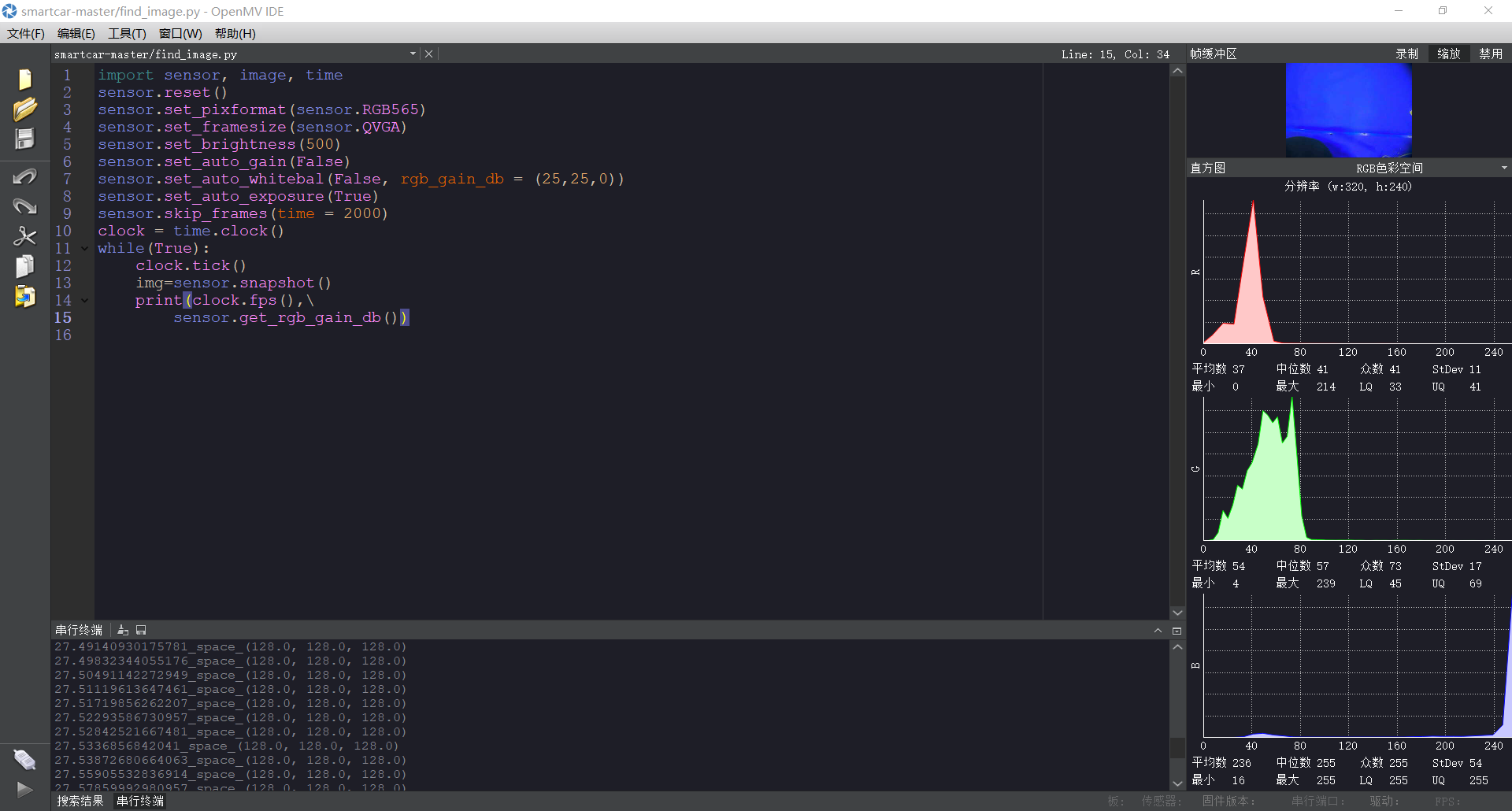

大佬们 请问为什么按照例程手动设置白平衡后 rgb的增益不变 现在在蓝布上拍图泛蓝光 请问用什么方法来显示正常的颜色发布在 OpenMV Cam

import sensor, image, time sensor.reset() sensor.set_pixformat(sensor.RGB565) sensor.set_framesize(sensor.QVGA) sensor.set_brightness(500) sensor.set_auto_gain(False) sensor.set_auto_whitebal(False, rgb_gain_db = (25,25,0)) sensor.set_auto_exposure(True) sensor.skip_frames(time = 2000) clock = time.clock() while(True): clock.tick() img=sensor.snapshot() print(clock.fps(),\ sensor.get_rgb_gain_db())