@kidswong999 我这边是这样的,我连接了两个电机,正常是电机1先旋转,然后电机2旋转之后转回原位,然后电机1旋转回原位,但是第二次识别之后,电机2先旋转了,并且角度大于我设置的角度。

D

dob6

@dob6

0

声望

8

楼层

370

资料浏览

0

粉丝

0

关注

dob6 发布的帖子

-

RE: 请问一下我的程序当中为什么只有第一次识别的时候电机的转动是按照要求的,第二次识别的时候电机旋转不符合要求?发布在 OpenMV Cam

-

RE: 请问一下我的程序当中为什么只有第一次识别的时候电机的转动是按照要求的,第二次识别的时候电机旋转不符合要求?发布在 OpenMV Cam

@kidswong999 我在每一个if语句的最后都加了print,发现每一次进入的if都是正确的,请问还可以在哪个位置去加print去检测运行到哪了?

-

请问一下我的程序当中为什么只有第一次识别的时候电机的转动是按照要求的,第二次识别的时候电机旋转不符合要求?发布在 OpenMV Cam

# Edge Impulse - OpenMV Image Classification Example import sensor, image, time, os, tf, pyb,math from pyb import UART import json pinADir0 = pyb.Pin('P3', pyb.Pin.OUT_PP, pyb.Pin.PULL_NONE) pinADir1 = pyb.Pin('P2', pyb.Pin.OUT_PP, pyb.Pin.PULL_NONE) pinBDir0 = pyb.Pin('P1', pyb.Pin.OUT_PP, pyb.Pin.PULL_NONE) pinBDir1 = pyb.Pin('P0', pyb.Pin.OUT_PP, pyb.Pin.PULL_NONE) pinADir0.value(0) pinADir1.value(1) pinBDir0.value(0) pinBDir1.value(1) tim = pyb.Timer(4, freq=1000) chA = tim.channel(1, pyb.Timer.PWM, pin=pyb.Pin("P7")) chB = tim.channel(2, pyb.Timer.PWM, pin=pyb.Pin("P8")) sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = "trained.tflite" labels = [line.rstrip('\n') for line in open("labels.txt")] clock = time.clock() while(True): clock.tick() img = sensor.snapshot() uart1=UART(3,9600) # default settings just do one detection... change them to search the image... for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples list1=list(labels) list2=list(obj.output()) position=list2.index(max(list2)) print(list1[position]) output_str=json.dumps(list1[position]) #方式2 print(output_str[1:2]) if list1[position]=='y' or list1[position]=='k': chA.pulse_width_percent(100) pyb.delay(2500)#电机1正转90度 pinADir0.value(0) pinADir1.value(0)#电机1停转 pyb.delay(1500) chB.pulse_width_percent(100) pyb.delay(360)#电机2正转90度 pinBDir0.value(1) pyb.delay(4000) pinBDir1.value(0)#电机2反向,停4000ms chB.pulse_width_percent(100) pyb.delay(360)#电机2反转90度 pinBDir0.value(0) pinBDir1.value(0)#电机2停转 pyb.delay(2500) pinADir0.value(1) pinADir1.value(0)#电机1反向,停2500ms chA.pulse_width_percent(100) pyb.delay(2500)#电机1反转90度 pinADir0.value(0) pinADir1.value(0)#电机1停转 elif list1[position]=='s' or list1[position]=='g': chA.pulse_width_percent(100) pyb.delay(5000)#电机1正转90度 pinADir0.value(0) pinADir1.value(0)#电机1停转 pyb.delay(1500) chB.pulse_width_percent(100) pyb.delay(360)#电机2正转90度 pinBDir0.value(1) pyb.delay(4000) pinBDir1.value(0)#电机2反向,停5000ms chB.pulse_width_percent(100) pyb.delay(360)#电机2反转90度 pinBDir0.value(0) pinBDir1.value(0)#电机2停转 pyb.delay(2500) pinADir0.value(1) pinADir1.value(0)#电机1反向,停2500ms chA.pulse_width_percent(100) pyb.delay(5000)#电机1反转90度 pinADir0.value(0) elif list1[position]=='d': chA.pulse_width_percent(100) pyb.delay(7500)#电机1正转90度 pinADir0.value(0) pinADir1.value(0)#电机1停转 pyb.delay(1500) chB.pulse_width_percent(100) pyb.delay(360)#电机2正转90度 pinBDir0.value(1) pyb.delay(4000) pinBDir1.value(0)#电机2反向,停5000ms chB.pulse_width_percent(100) pyb.delay(360)#电机2反转90度 pinBDir0.value(0) pinBDir1.value(0)#电机2停转 pyb.delay(2500) pinADir0.value(1) pinADir1.value(0)#电机1反向,停2500ms chA.pulse_width_percent(100) pyb.delay(7500)#电机1反转90度 pinADir0.value(0) elif list1[position]=='z' or list1[position]=='t': chB.pulse_width_percent(100) pyb.delay(360)#电机2正转90度 pinBDir0.value(1) pyb.delay(4000) pinBDir1.value(0)#电机2反向,停5000ms chB.pulse_width_percent(100) pyb.delay(360)#电机2反转90度 pinBDir0.value(0) pinBDir1.value(0)#电机2停转 elif list1[position]=='n': chA.pulse_width_percent(0) chB.pulse_width_percent(0) uart1.write(output_str[1:2]) time.sleep_ms(10000) #predictions_list = list(zip(labels, obj.output())) #for i in range(len(predictions_list)): #print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) #print(clock.fps(), "fps") -

openmv和单片机机通讯的过程当中在串口当中输出的数据在PC显示正常,但是单片机接受不到,请问是有什么问题?发布在 OpenMV Cam

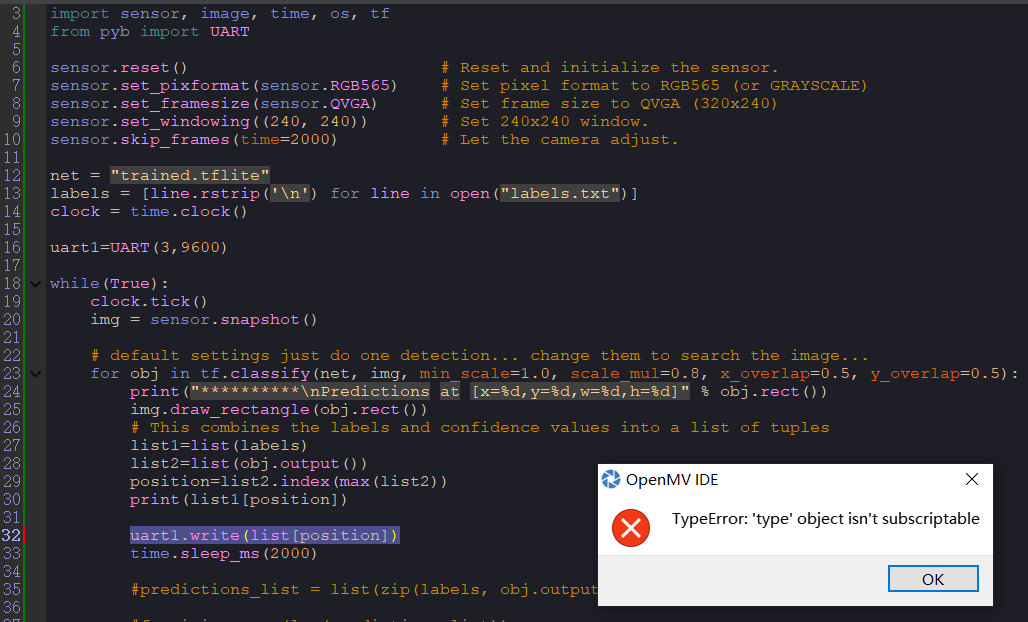

import sensor, image, time, os, tf, pyb from pyb import UART import json sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = "trained.tflite" labels = [line.rstrip('\n') for line in open("labels.txt")] clock = time.clock() uart1=UART(3,19200) while(True): clock.tick() img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples list1=list(labels) list2=list(obj.output()) position=list2.index(max(list2)) print(list1[position]) output_str=json.dumps(list1[position]) #方式2 print(output_str) print(output_str[1:2]) print(hex(ord(output_str[1:2]))) data=bytearray(hex(ord(output_str[1:2]))) uart1.write(data) time.sleep_ms(2500) -

想要通过从Edge Impulse上导入的程序实现通过图像识别的结果来控制电机的转动要怎么实现?发布在 OpenMV Cam

import sensor, image, time, os, tf,pyb from pyb import UART sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. pinADir0 = pyb.Pin('P3', pyb.Pin.OUT_PP, pyb.Pin.PULL_NONE) pinADir1 = pyb.Pin('P2', pyb.Pin.OUT_PP, pyb.Pin.PULL_NONE) pinBDir0 = pyb.Pin('P1', pyb.Pin.OUT_PP, pyb.Pin.PULL_NONE) pinBDir1 = pyb.Pin('P0', pyb.Pin.OUT_PP, pyb.Pin.PULL_NONE) net = "trained.tflite" labels = [line.rstrip('\n') for line in open("labels.txt")] clock = time.clock() pinADir0.value(0) pinADir1.value(1) pinBDir0.value(0) pinBDir1.value(1) tim = pyb.Timer(4, freq=1000) chA = tim.channel(1, pyb.Timer.PWM, pin=pyb.Pin("P7")) chB = tim.channel(2, pyb.Timer.PWM, pin=pyb.Pin("P8")) tim = pyb.Timer(4, freq=1000) chA = tim.channel(1, pyb.Timer.PWM, pin=pyb.Pin("P7")) chB = tim.channel(2, pyb.Timer.PWM, pin=pyb.Pin("P8")) uart1=UART(3,115200) while(True): clock.tick() img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples list1=list(labels) list2=list(obj.output()) position=list2.index(max(list2)) print(list1[position]) if list1[position]=dianchi pyb.delay(100) chA.pulse_width_percent(20) pyb.delay(100) chA.pulse_width_percent(0) chB.pulse_width_percent(20) pyb.delay(100) chB.pulse_width_percent(0) uart.write(position) time.sleep_ms(1000) 请在这里粘贴代码