B

bzvo 发布的帖子

-

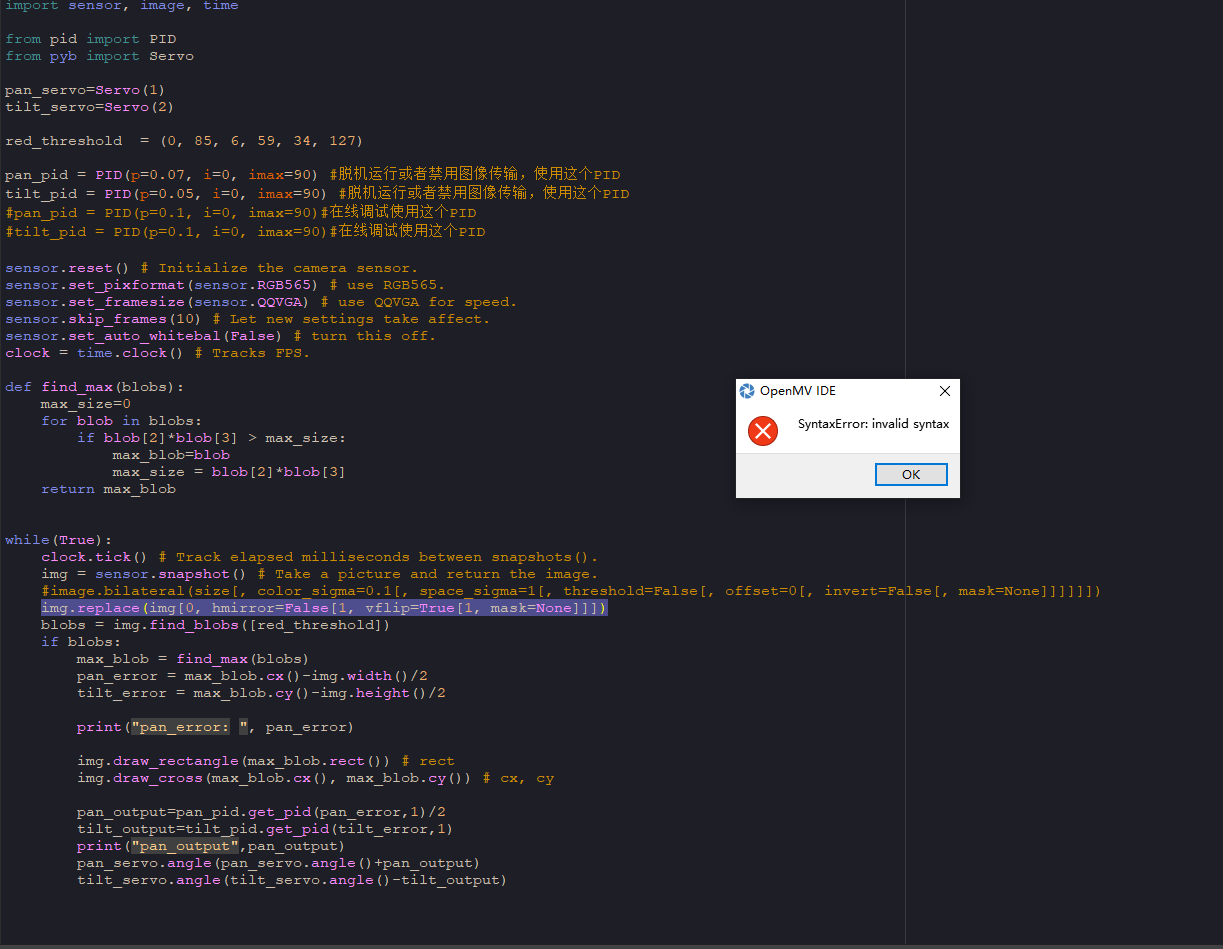

请问如何填写翻转图像函数的参数啊?我填写过后显示语法错误。发布在 OpenMV Cam

while(True): clock.tick() # Track elapsed milliseconds between snapshots(). img = sensor.snapshot() # Take a picture and return the image. #image.bilateral(size[, color_sigma=0.1[, space_sigma=1[, threshold=False[, offset=0[, invert=False[, mask=None]]]]]]) img.replace(img[0, hmirror=False[1, vflip=True[1, mask=None]]]) blobs = img.find_blobs([red_threshold]) if blobs:

-

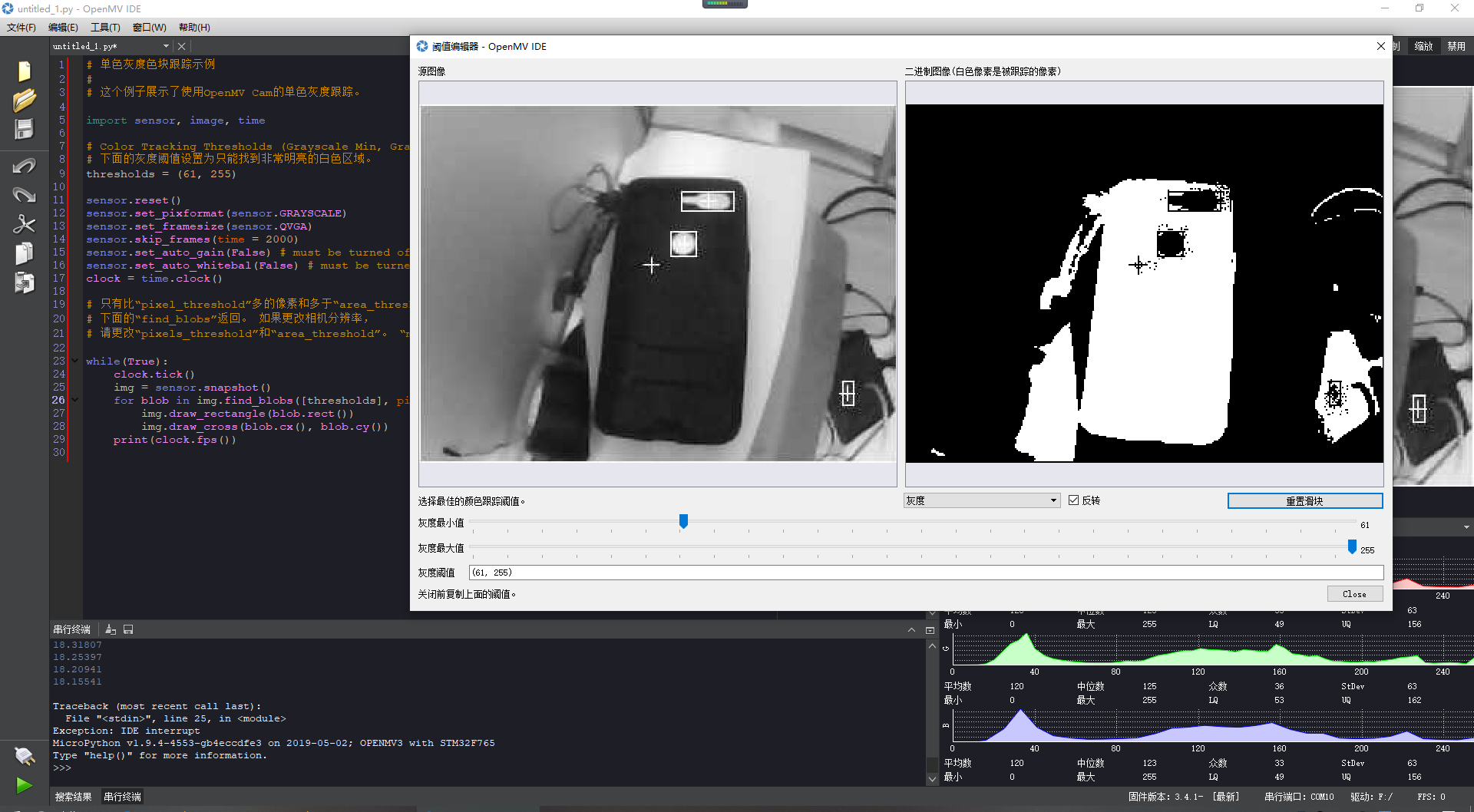

在灰度图单颜色色块识别中,明明设置的黑色,为什么检测却是白色?发布在 OpenMV Cam

# 单色灰度色块跟踪示例 # # 这个例子展示了使用OpenMV Cam的单色灰度跟踪。 import sensor, image, time # Color Tracking Thresholds (Grayscale Min, Grayscale Max) # 下面的灰度阈值设置为只能找到非常明亮的白色区域。 thresholds = (61, 255) sensor.reset() sensor.set_pixformat(sensor.GRAYSCALE) sensor.set_framesize(sensor.QVGA) sensor.skip_frames(time = 2000) sensor.set_auto_gain(False) # must be turned off for color tracking sensor.set_auto_whitebal(False) # must be turned off for color tracking clock = time.clock() # 只有比“pixel_threshold”多的像素和多于“area_threshold”的区域才被 # 下面的“find_blobs”返回。 如果更改相机分辨率, # 请更改“pixels_threshold”和“area_threshold”。 “merge = True”合并图像中所有重叠的色块。 while(True): clock.tick() img = sensor.snapshot() for blob in img.find_blobs([thresholds], pixels_threshold=50, area_threshold=50, merge=False): img.draw_rectangle(blob.rect()) img.draw_cross(blob.cx(), blob.cy()) print(clock.fps())

-

小球表面反光导致OPEN MV识别不准,有没有什么方法可以将识别到的小球色块区域在高于某个值的时候让它默认为是小球全部?发布在 OpenMV Cam

# Measure the distance # # This example shows off how to measure the distance through the size in imgage # This example in particular looks for yellow pingpong ball. import sensor, image, time # For color tracking to work really well you should ideally be in a very, very, # very, controlled enviroment where the lighting is constant...+ yellow_threshold =(0, 30, 127, -128, -128, 127) # You may need to tweak the above settings for tracking green things... # Select an area in the Framebuffer to copy the color settings. sensor.reset() # Initialize the camera sensor. sensor.set_pixformat(sensor.RGB565) # use RGB565. sensor.set_framesize(sensor.QQVGA) # use QQVGA for speed. sensor.skip_frames(time = 2000) # Let new settings take affect. sensor.set_auto_whitebal(False) # turn this off. clock = time.clock() # Tracks FPS. K=325#the value should be measured #设定一个距离值,根据该距离值的像素值得到K值 K=20*25 K2=0.16 #K2值等于物体的高度(长度)/像素值 如K2=4/25 while(True): clock.tick() # Track elapsed milliseconds between snapshots(). #img = sensor.snapshot() # Take a picture and return the image. img = sensor.snapshot() blobs = img.find_blobs([yellow_threshold]) if len(blobs) == 1: # Draw a rect around the blob. b = blobs[0] img.draw_rectangle(b[0:4]) # rect img.draw_cross(b[5], b[6]) # cx, cy Lm = (b[2]+b[3])/2 #小球的长加宽/2得到LM的值 length = K/Lm print(length) size=K2*Lm #print(size) #print(clock.fps()) # Note: Your OpenMV Cam runs about half as fast while # connected to your computer. The FPS should increase once disconnected.

-

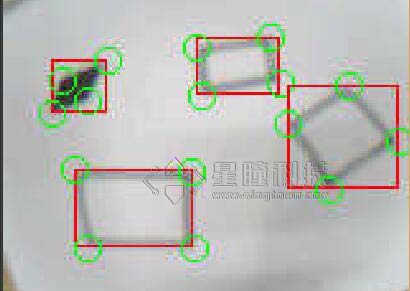

各位大神,我想问一下如何将图像标记的白色矩形框的坐标值提取出来?发布在 OpenMV Cam

if 90<statistics.l_mode()<110 and -35<statistics.a_mode()<16 and -28<statistics.b_mode()<20:#if the circle is white img.draw_circle(c.x(), c.y(), c.r(), color = (255, 0, 0))#识别到的白色圆形用红色的圆形框出来 print(circle.x(),circle.y()) else: img.draw_rectangle(area, color = (255, 255, 255))#将非黑、白色的圆用白色的矩形框出来 wai = img.find_rects(threshold = 10000) x_error = wai[0]+wai[2]/2-img.width()/2 #左右偏移量 y_error = wai[1]+wai[3]/2-img.height()/2 #上下偏移量 print(x_error,y_error) -

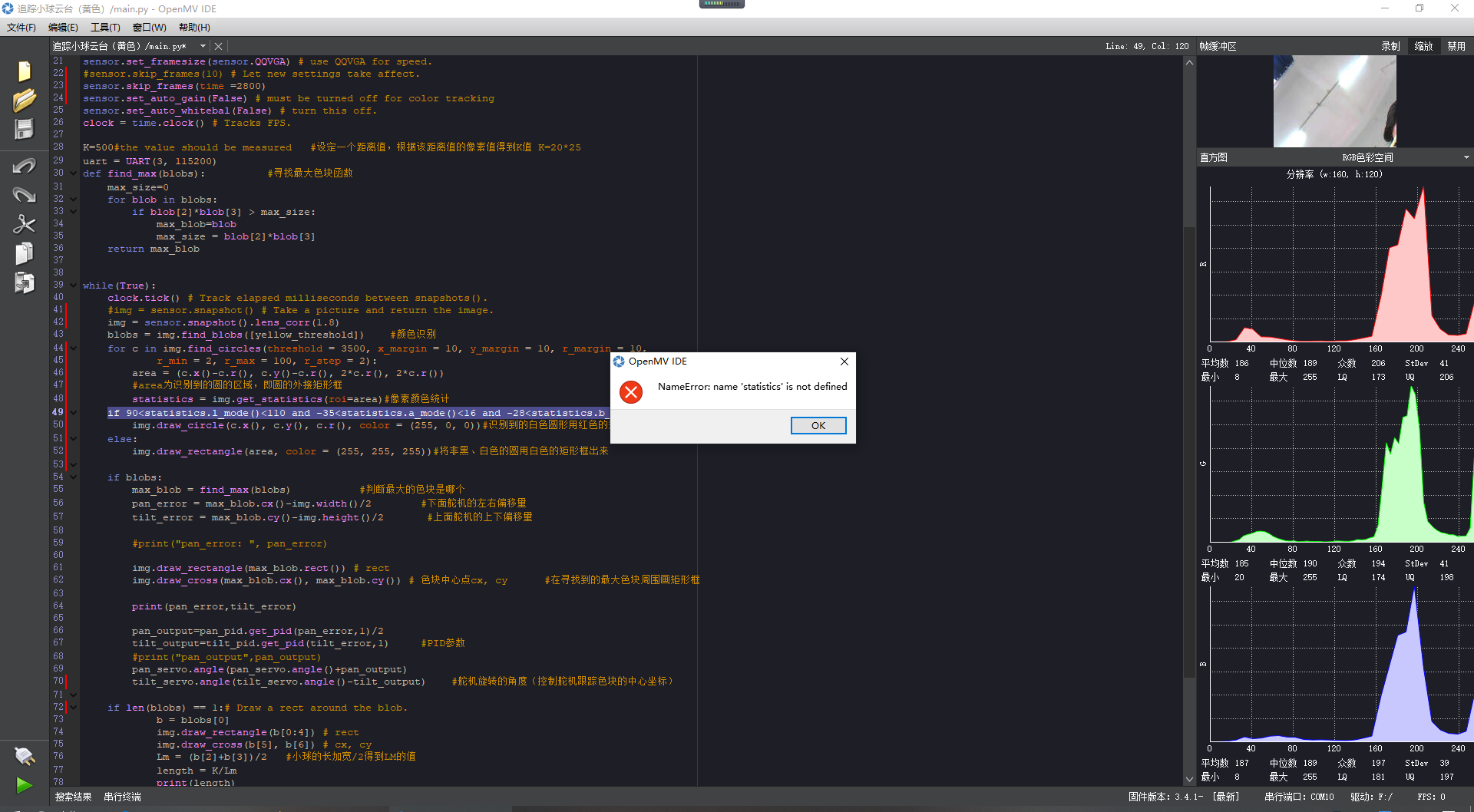

有哪位大神能告诉我这个问题怎么解决吗?在编译下载到OPENMV中时弹出的。发布在 OpenMV Cam

import sensor, image, time from pid import PID from pyb import Servo from pyb import UART import json pan_servo=Servo(1) #下 tilt_servo=Servo(2) #上 yellow_threshold = (100, 0, -128, 53, 39, 127) pan_pid = PID(p=0.07, i=0, imax=90) #脱机运行或者禁用图像传输,使用这个PID tilt_pid = PID(p=0.05, i=0, imax=90) #脱机运行或者禁用图像传输,使用这个PID #pan_pid = PID(p=0.1, i=0, imax=90)#在线调试使用这个PID #tilt_pid = PID(p=0.1, i=0, imax=90)#在线调试使用这个PID sensor.reset() # Initialize the camera sensor. sensor.set_pixformat(sensor.RGB565) # use RGB565. sensor.set_framesize(sensor.QQVGA) # use QQVGA for speed. #sensor.skip_frames(10) # Let new settings take affect. sensor.skip_frames(time =2800) sensor.set_auto_gain(False) # must be turned off for color tracking sensor.set_auto_whitebal(False) # turn this off. clock = time.clock() # Tracks FPS. K=500#the value should be measured #设定一个距离值,根据该距离值的像素值得到K值 K=20*25 uart = UART(3, 115200) def find_max(blobs): #寻找最大色块函数 max_size=0 for blob in blobs: if blob[2]*blob[3] > max_size: max_blob=blob max_size = blob[2]*blob[3] return max_blob while(True): clock.tick() # Track elapsed milliseconds between snapshots(). #img = sensor.snapshot() # Take a picture and return the image. img = sensor.snapshot().lens_corr(1.8) blobs = img.find_blobs([yellow_threshold]) #颜色识别 for c in img.find_circles(threshold = 3500, x_margin = 10, y_margin = 10, r_margin = 10, r_min = 2, r_max = 100, r_step = 2): area = (c.x()-c.r(), c.y()-c.r(), 2*c.r(), 2*c.r()) #area为识别到的圆的区域,即圆的外接矩形框 statistics = img.get_statistics(roi=area)#像素颜色统计 if 90<statistics.l_mode()<110 and -35<statistics.a_mode()<16 and -28<statistics.b_mode()<20:#if the circle is white img.draw_circle(c.x(), c.y(), c.r(), color = (255, 0, 0))#识别到的白色圆形用红色的矩形框出来 else: img.draw_rectangle(area, color = (255, 255, 255))#将非黑、白色的圆用白色的矩形框出来 if blobs: max_blob = find_max(blobs) #判断最大的色块是哪个 pan_error = max_blob.cx()-img.width()/2 #下面舵机的左右偏移量 tilt_error = max_blob.cy()-img.height()/2 #上面舵机的上下偏移量 #print("pan_error: ", pan_error) img.draw_rectangle(max_blob.rect()) # rect img.draw_cross(max_blob.cx(), max_blob.cy()) # 色块中心点cx, cy #在寻找到的最大色块周围画矩形框 print(pan_error,tilt_error) pan_output=pan_pid.get_pid(pan_error,1)/2 tilt_output=tilt_pid.get_pid(tilt_error,1) #PID参数 #print("pan_output",pan_output) pan_servo.angle(pan_servo.angle()+pan_output) tilt_servo.angle(tilt_servo.angle()-tilt_output) #舵机旋转的角度(控制舵机跟踪色块的中心坐标) if len(blobs) == 1:# Draw a rect around the blob. b = blobs[0] img.draw_rectangle(b[0:4]) # rect img.draw_cross(b[5], b[6]) # cx, cy Lm = (b[2]+b[3])/2 #小球的长加宽/2得到LM的值 length = K/Lm print(length) head="00" output_str="[%d,%d,%.2f]" % (pan_error,tilt_error,length) #方式1 print('you send:',output_str) uart.write(head+'\r\n') uart.write(output_str+'\r\n') uart.write(head+'\r\n') else: print('not found!') :folded_hands: :folded_hands: :folded_hands:

-

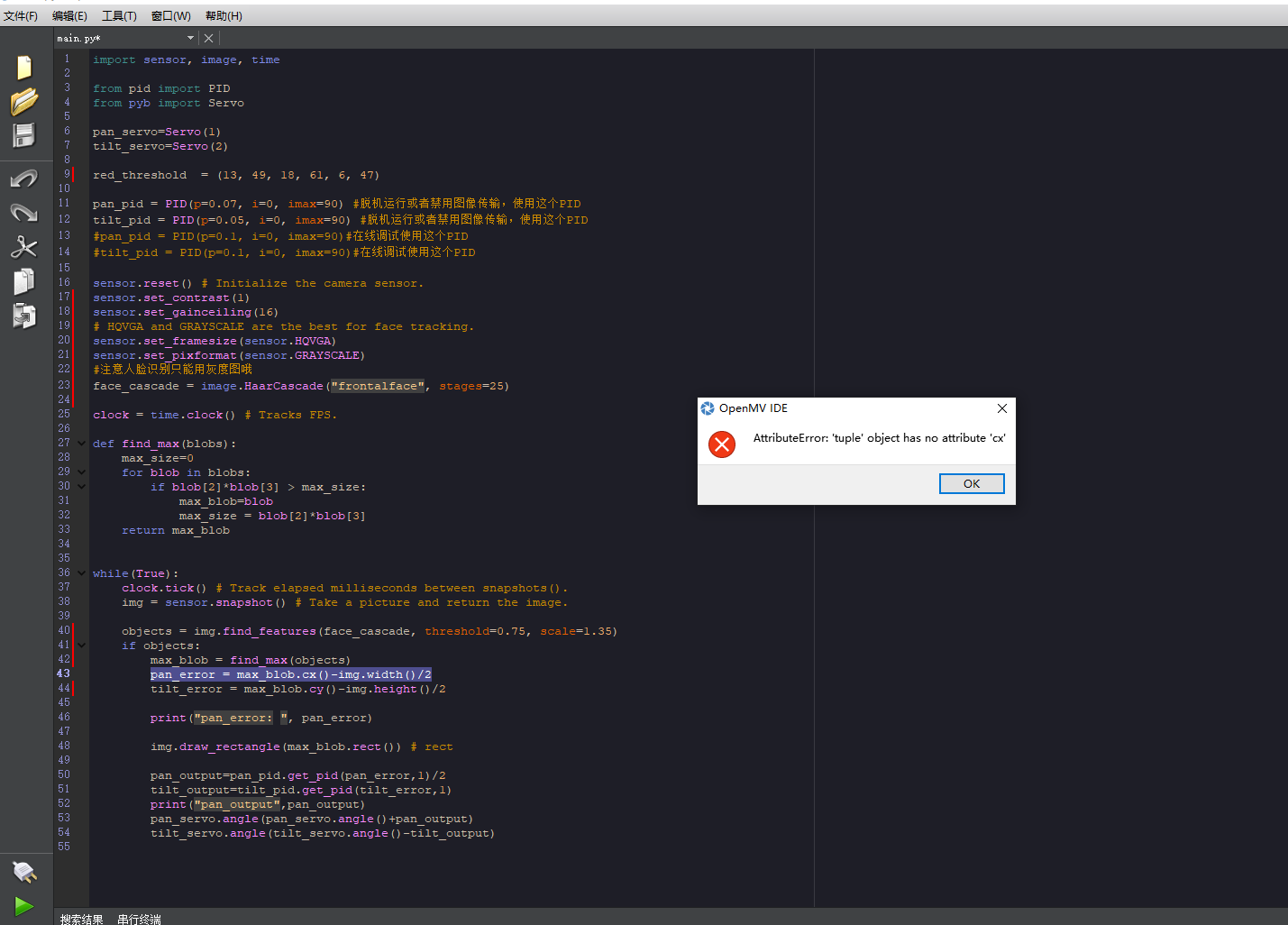

如何将采集到的人脸信息的坐标位置调用出来?我跟着人脸识别的教学视频操作,发现并不能正常运行。发布在 OpenMV Cam

while(True): clock.tick() # Track elapsed milliseconds between snapshots(). img = sensor.snapshot() # Take a picture and return the image. objects = img.find_features(face_cascade, threshold=0.75, scale=1.35) if objects: max_blob = find_max(objects) pan_error = max_blob.cx()-img.width()/2 tilt_error = max_blob.cy()-img.height()/2 print("pan_error: ", pan_error) img.draw_rectangle(max_blob.rect()) # rect pan_output=pan_pid.get_pid(pan_error,1)/2 tilt_output=tilt_pid.get_pid(tilt_error,1) print("pan_output",pan_output) pan_servo.angle(pan_servo.angle()+pan_output) tilt_servo.angle(tilt_servo.angle()-tilt_output) ![0_1561518235957_}`8~7DP7X6_]2FW`IA%J3{M.png](https://fcdn.singtown.com/4654577d-b6fc-4356-8548-328ea224ffa7.png)