@kidswong999 神经网络模型是可以分区域识别的吗?

B

burh 发布的帖子

-

RE: img后面这个roi参数,是0,0的时候才能正常识别,是其他的时候就识别不了,要怎么才能实现不同区域分开识别呢?发布在 OpenMV Cam

@kidswong999

确实是可以分块的,但是他好像就识别不了了,请问是什么原因呢 -

RE: img后面这个roi参数,是0,0的时候才能正常识别,是其他的时候就识别不了,要怎么才能实现不同区域分开识别呢?发布在 OpenMV Cam

import sensor, image, time, os, tf, lcd from pyb import UART sensor.reset() sensor.set_pixformat(sensor.RGB565) sensor.set_framesize(sensor.QVGA) sensor.set_windowing((128, 128)) sensor.skip_frames(time=2000) lcd.init() net = "trained.tflite" labels = [line.rstrip('\n') for line in open("labels.txt")] uart = UART(3, 19200) clock = time.clock() while(True): clock.tick() img = sensor.snapshot() lcd.display(img) for obj in tf.classify(net, img,[0,0,128,68],min_scale=1, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) print("1") img.draw_rectangle(obj.rect()) predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) if predictions_list[0][1] > 0.99: uart.write("0") print(0) if predictions_list[1][1] > 0.97: uart.write("1") print(1)就是for obj in tf.classify(net, img,[0,0,128,68],min_scale=1, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5):中img后面那个roi参数是0,0的时候才能正常识别,换成其他的时候就不能识别

-

RE: img后面这个roi参数,是0,0的时候才能正常识别,是其他的时候就识别不了,要怎么才能实现不同区域分开识别呢?发布在 OpenMV Cam

@kidswong999 我的问题不是很这个哈,主要是哪个roi参数?为啥我换成这些区域就识别不了呢?

-

RE: img后面这个roi参数,是0,0的时候才能正常识别,是其他的时候就识别不了,要怎么才能实现不同区域分开识别呢?发布在 OpenMV Cam

@kidswong999 那如果要识别四个区域,这个应该怎么划分呢?不用划区域的线吗

-

img后面这个roi参数,是0,0的时候才能正常识别,是其他的时候就识别不了,要怎么才能实现不同区域分开识别呢?发布在 OpenMV Cam

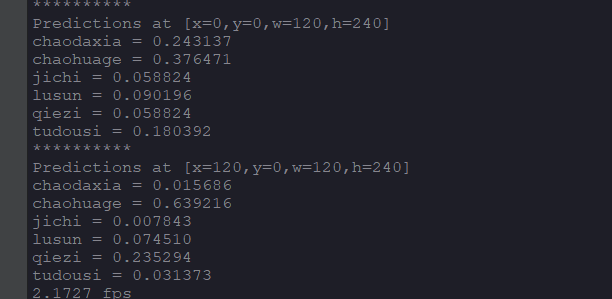

# Edge Impulse - OpenMV Image Classification Example import sensor, image, time, os, tf, lcd sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.GRAYSCALE) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. lcd.init() net = "trained.tflite" labels = [line.rstrip('\n') for line in open("labels.txt")] clock = time.clock() while(True): clock.tick() img = sensor.snapshot() img.draw_rectangle([0,0,240,120]) img.draw_rectangle([0,120,240,240]) for obj in tf.classify(net, img ,[0,0,240,120],min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) for obj in tf.classify(net, img ,[0,120,240,240],min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps")