@kidswong999  这样吖,好的

这样吖,好的

blf6

@blf6

blf6 发布的帖子

-

RE: 通过云端自动生成openmv的神经网络模型,进行目标检测。网页上训练效果很好,最后在openmv调试运行返回值都接近1发布在 OpenMV Cam

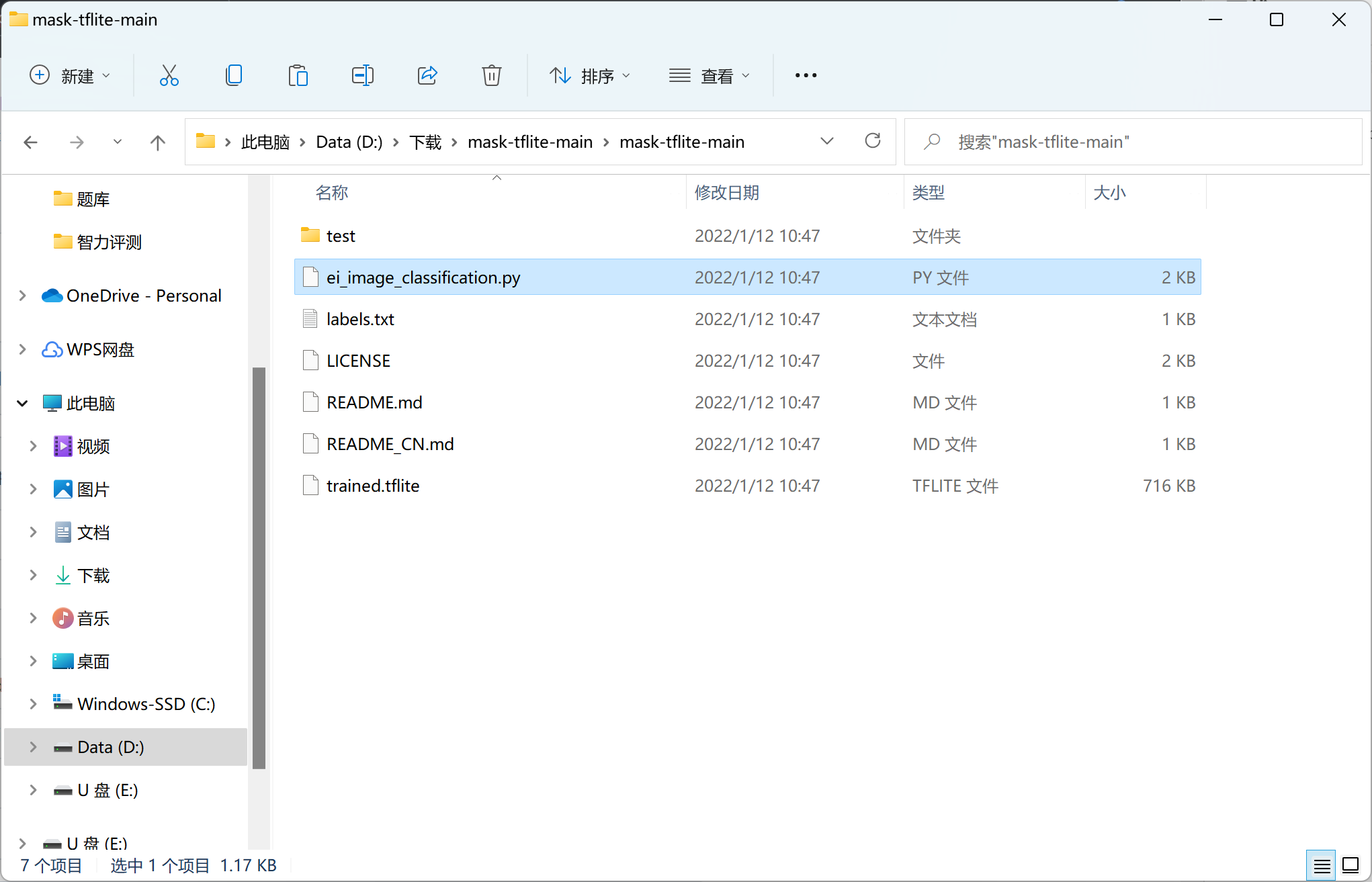

@kidswong999 我下载了github上的这个模型和代码

但是测试的结果依然不理想 咋回事捏

咋回事捏

(上图为测试配戴口罩时的结果) -

RE: 通过云端自动生成openmv的神经网络模型,进行目标检测。网页上训练效果很好,最后在openmv调试运行返回值都接近1发布在 OpenMV Cam

@kidswong999 带口罩与不带口罩2中分别各230张,一共400多张,应该够了吖(因为我看教程是各200多张)

-

通过云端自动生成openmv的神经网络模型,进行目标检测。网页上训练效果很好,最后在openmv调试运行返回值都接近1发布在 OpenMV Cam

我按照教程学习识别口罩目标检测 通过云端自动生成openmv的神经网络模型 判断是否佩戴口罩。 前面除了API Key 密钥无法使用 好在可以文件上传 (不知道会不会有影响),然后一切顺利,最后生成的3个文件复制到openmv里 运行py脚本 测试结果奇怪 图片中是否佩戴 它的2个识别概率都十分接近1(甚至还有超过1的,这比识别错了还难受

)

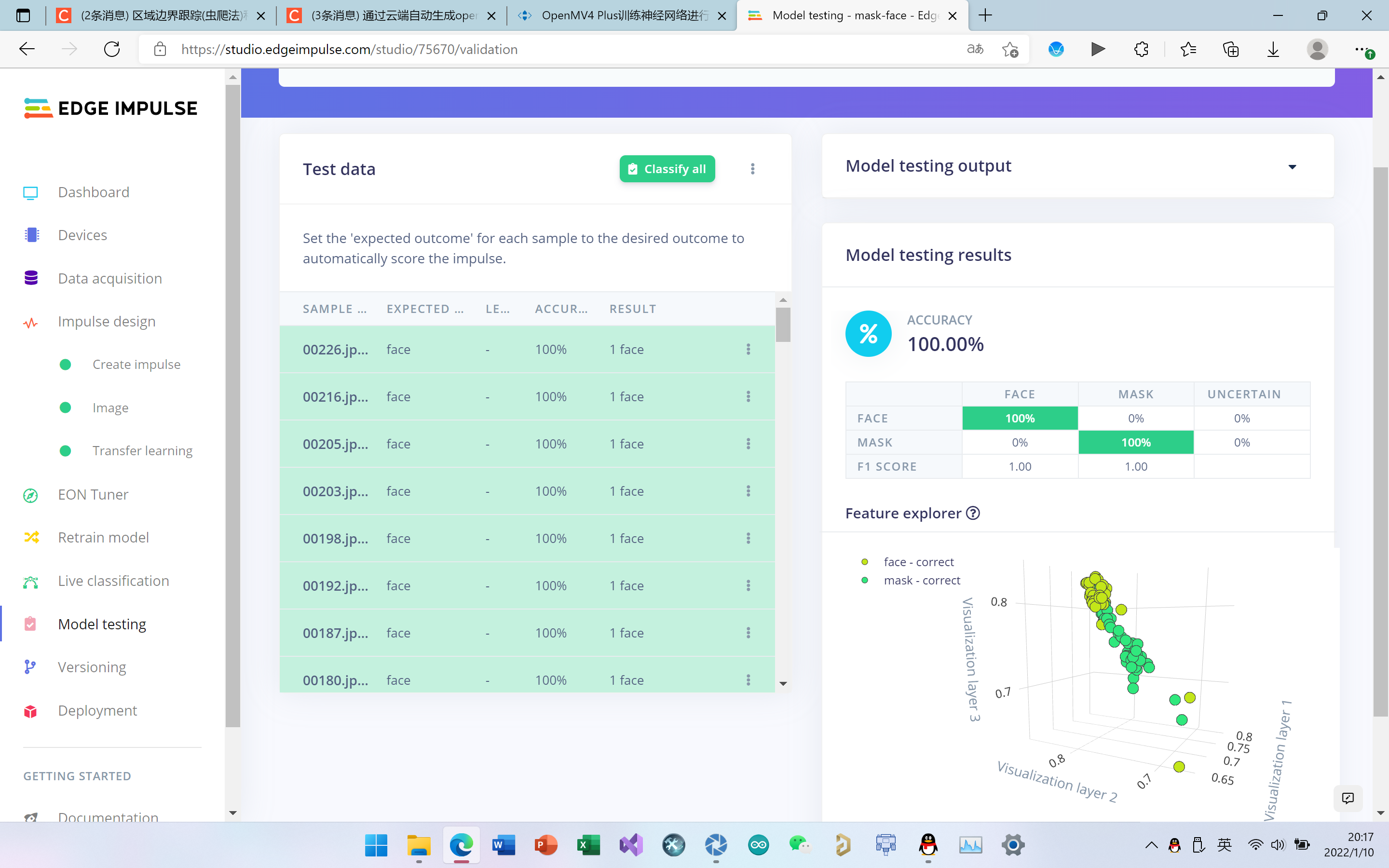

)网上的训练效果

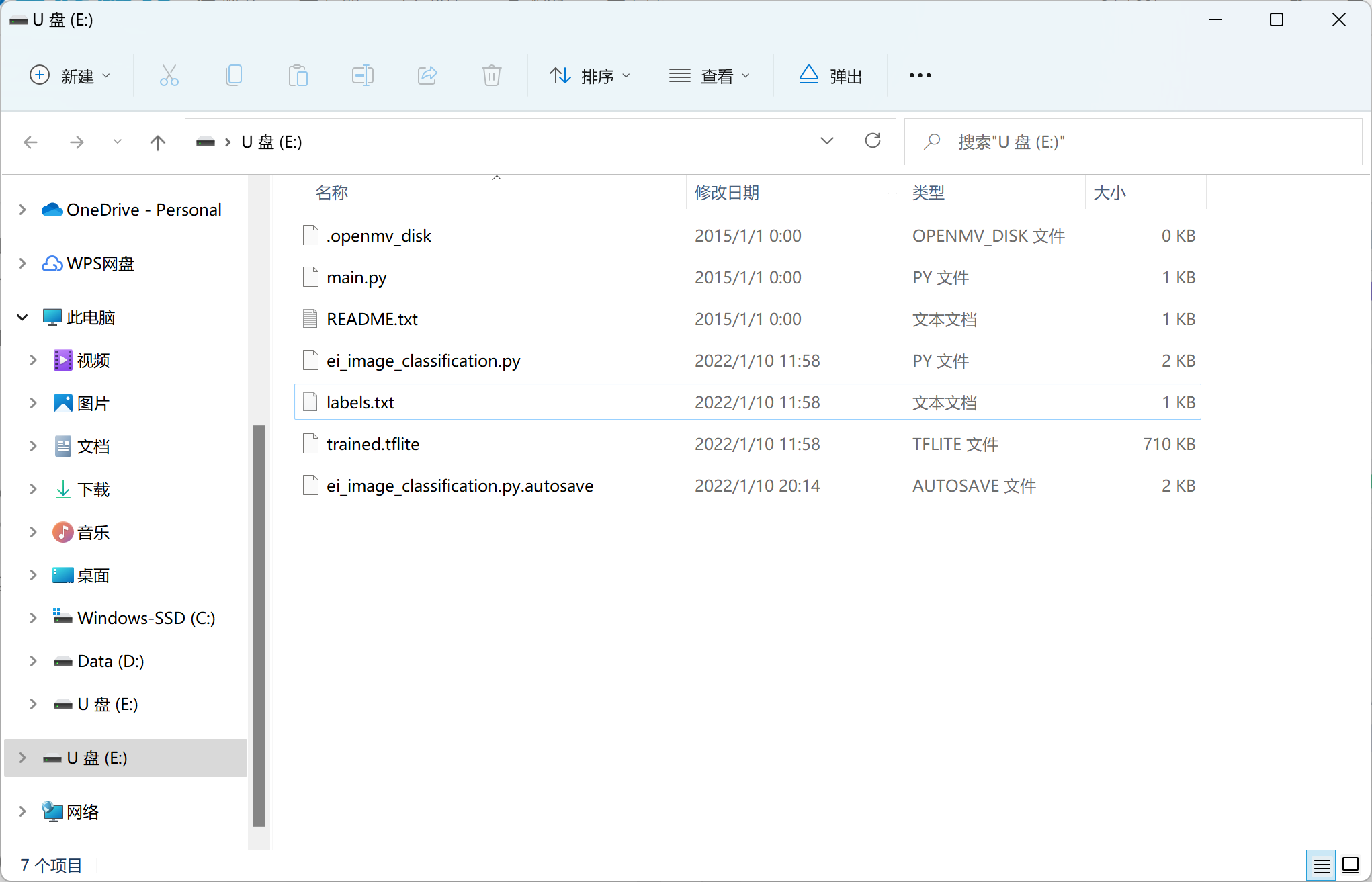

生成的3个文件 添加至openmv的sd卡

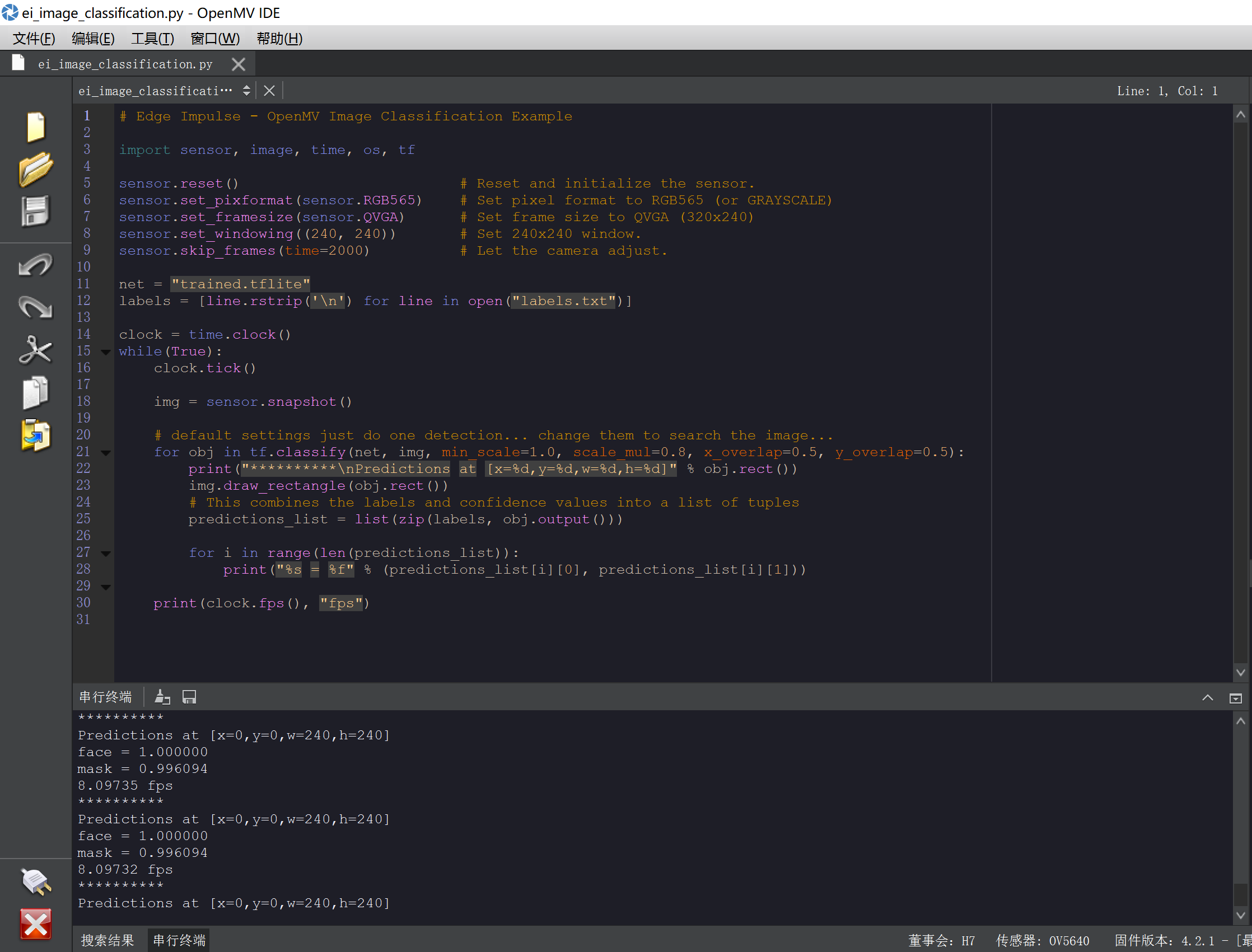

代码# Edge Impulse - OpenMV Image Classification Example import sensor, image, time, os, tf sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = "trained.tflite" labels = [line.rstrip('\n') for line in open("labels.txt")] clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in tf.classify(net, img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) print(obj.output()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps")程序运行后的效果(2种情况的识别率很相近)

下面是未带口罩的返回值

Predictions at [x=0,y=0,w=240,h=240]

[1.05078, 0.949219]

face = 1.050781

mask = 0.949219

8.2121 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.03906, 0.960938]

face = 1.039063

mask = 0.960938

8.21203 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.01563, 0.984375]

face = 1.015625

mask = 0.984375

8.21228 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.03516, 0.964844]

face = 1.035156

mask = 0.964844

8.2122 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.00781, 0.992188]

face = 1.007813

mask = 0.992188

8.21213 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.01563, 0.984375]

face = 1.015625

mask = 0.984375

8.21238 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.01563, 0.984375]

face = 1.015625

mask = 0.984375

8.2123 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.01563, 0.984375]

face = 1.015625

mask = 0.984375

8.21223 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.01563, 0.984375]

face = 1.015625

mask = 0.984375

8.21247 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.00781, 0.992188]

face = 1.007813

mask = 0.992188

8.21239 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.01563, 0.984375]

face = 1.015625

mask = 0.984375

8.21233 fps

带上口罩后的返回值(识别率和不戴口罩差不多)

Predictions at [x=0,y=0,w=240,h=240]

[1.02344, 0.976563]

face = 1.023438

mask = 0.976563

8.21273 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.02344, 0.976563]

face = 1.023438

mask = 0.976563

8.21266 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.01172, 0.988281]

face = 1.011719

mask = 0.988281

8.21259 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.02344, 0.976563]

face = 1.023438

mask = 0.976563

8.21282 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.01563, 0.984375]

face = 1.015625

mask = 0.984375

8.21275 fps

Predictions at [x=0,y=0,w=240,h=240]

[1.01563, 0.984375]

face = 1.015625

mask = 0.984375

8.21268 fps