openmv在IDE运行时,串口接收和发送数据都是正常的,但是脱机运行时,能正常发送数据却不能正常接收数据,这是什么原因?

import sensor, image, time, os, tf, math, uos, gc

from pyb import UART, Timer, LED

sensor.reset()

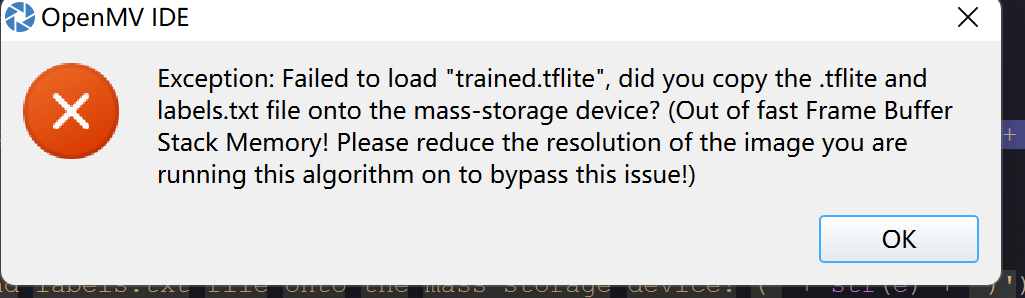

sensor.set_pixformat(sensor.GRAYSCALE)

sensor.set_framesize(sensor.QQVGA)

sensor.set_windowing((240, 240))

sensor.skip_frames(time=2000)

net = None

labels = None

min_confidence = 0.5

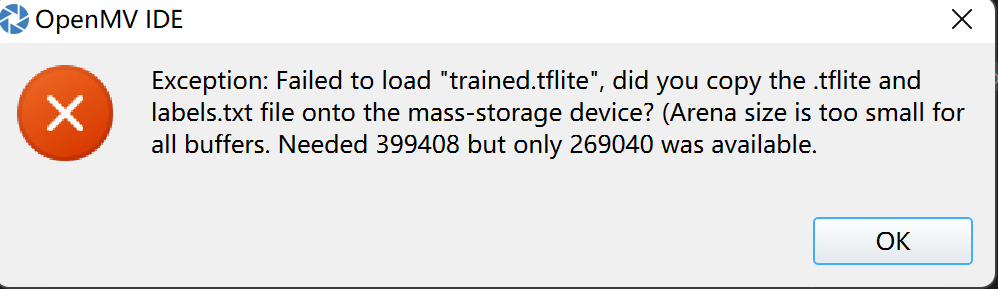

try:

net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024)))

except Exception as e:

raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')

try:

labels = [line.rstrip('\n') for line in open("labels.txt")]

except Exception as e:

raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')

colors = [

(255, 0, 0),

( 0, 255, 0),

(255, 255, 0),

( 0, 0, 255),

(255, 0, 255),

( 0, 255, 255),

(255, 255, 255),

]

clock = time.clock()

global uart_r

uart_r = [0xAA, 0xAF, 0x05, 0x02, 0x00, 0x00, 0x00, 0x00]

class Saveimage(object):

def __init__(self):

pass

def Recognize(self,img):

self.img = img

for i, detection_list in enumerate(net.detect(self.img, thresholds=[(math.ceil(min_confidence * 255), 255)])):

if (i == 0): continue

if (len(detection_list) == 0): continue

print("recognize to: "+labels[i])

if labels[i]=='lin':

sensor.snapshot().save("lin.jpg")

uart_bufl[6] = 0xAA

print("Save lin.")

elif labels[i]=='ke':

sensor.snapshot().save("ke.jpg")

uart_bufl[6] = 0xBB

print("Save ke.")

elif labels[i]=='da':

sensor.snapshot().save("da.jpg")

uart_bufl[6] = 0xCC

print("Save da.")

sensor.snapshot().save("other.jpg")

print("Save other.")

Saveimg=Saveimage()

uart = UART(3,115200)

uart.init(115200,8, parity=None, stop=1)

def ReceiveB():

global uart_r

size = uart.any()

if size<=len(uart_r):

for i in range(0,size):

uart_r[i] = uart.readchar()

if uart_r[0]!=0xAA:

uart_r = [0xAA, 0xAF, 0x05, 0x02, 0x00, 0x00, 0x00, 0x00]

LED(1).on()

LED(2).off()

elif uart_r[1]!=0xAF:

uart_r = [0xAA, 0xAF, 0x05, 0x02, 0x00, 0x00, 0x00, 0x00]

LED(1).on()

LED(2).off()

elif uart_r[2]!=0x05:

uart_r = [0xAA, 0xAF, 0x05, 0x02, 0x00, 0x00, 0x00, 0x00]

LED(1).on()

LED(2).off()

else:

LED(1).off()

LED(2).on()

uart_bufl = bytearray([0xAA,0xFF, 0xAA, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00])

def uartsend(timer):

uart.write(uart_bufl)

def updatesend(custom, cx, cy, distance):

i=0

sum = 0

add = 0

uart_bufl[2] = custom;

uart_bufl[4] = cx;

uart_bufl[5] = cy;

uart_bufl[6] = distance;

uart_bufl[3] = len(uart_bufl)-6;

while i<(len(uart_bufl)-2):

sum = sum+uart_bufl[i]

add = add+sum

i+=1

uart_bufl[-2] = sum;

uart_bufl[-1] = add;

tim = Timer(2, freq=20)

tim.callback(uartsend)

while(True):

clock.tick()

ReceiveB()

print(str(uart_r))

if uart_r[5]==0xDD:

img0 = sensor.snapshot()

Saveimg.Recognize(img0)

LED(3).on()

LED(1).off()

LED(2).off()

print("Saving......")

elif uart_r[5]==0xEE:

if uart_r[4]!=0x06:

print("Now is locking mode\r\n")

img1 = sensor.snapshot()

lockimg = ''

for i, detection_list in enumerate(net.detect(img1, thresholds=[(math.ceil(min_confidence * 255), 255)])):

if (i == 0): continue

if (len(detection_list) == 0): continue

lockimg = labels[i]

uart_bufl[7] = 0xCC

print('reading:'+lockimg)

else:

img2 = sensor.snapshot()

for k, detection_list in enumerate(net.detect(img2, thresholds=[(math.ceil(min_confidence * 255), 255)])):

if (k == 0): continue

if (len(detection_list) == 0): continue

if labels[k]==lockimg:

print(lockimg+labels[k]+'\r\n')

for d in detection_list:

[x, y, w, h] = d.rect()

center_x = math.floor(x + (w / 2))

center_y = math.floor(y + (h / 2))

distance = int((80-center_x)*100*0.0024/0.42)

uart_bufl[4] = center_x

uart_bufl[5] = center_y

uart_bufl[6] = distance

LED(3).on()

LED(1).off()

LED(2).off()

print('%d',distance)

else:

uart_bufl[6] = 0x3C

print("Don't get picture!")

else:

print("Wait enter a recognization mode!")

print(clock.fps(), "fps", end="\n\n")