有,但还是报错

A

agxn

@agxn

0

声望

16

楼层

474

资料浏览

0

粉丝

0

关注

agxn 发布的帖子

-

请问我要将中心坐标以字符串的形式发送出去,不以数字的形式,还需要加帧头帧尾吗,怎么加??发布在 OpenMV Cam

这是以int类型发送出去的,但我改成str(x),str(a)就会报错,请问怎么改

def send_data(x,a): global uart data =ustruct.pack("<bbhhb", 0x2c, 0x12, int(x), int(a), 0x5b ) uart.write(data) -

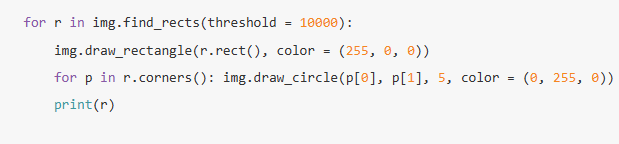

关于寻找矩形阈值问题?发布在 OpenMV Cam

就是 for r in img.find_rects(threshold = 10000):里面的阈值threshold按照什么取值呀,有的取3000,有的取80000,按照什么来取的 -

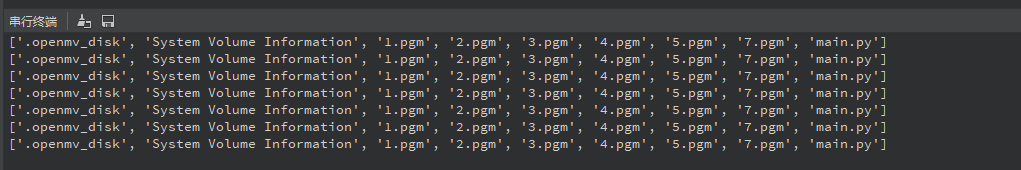

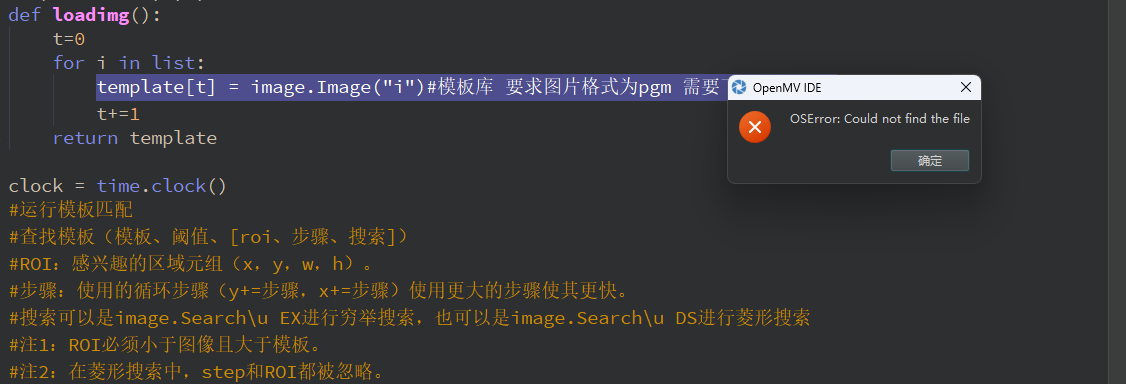

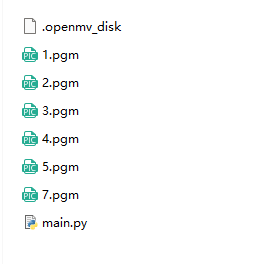

明明导进去了为什么还提示这个报错呀发布在 OpenMV Cam

import time, sensor, image from image import SEARCH_EX, SEARCH_DS #重启传感器 sensor.reset() #传感器配置 sensor.set_contrast(1) sensor.set_gainceiling(16) sensor.set_framesize(sensor.QQVGA) #由于运算量较大,因此只能使用QQVGA #可以通过设置窗口来减少搜索的图像 #sensor.set_windowing(((640-80)//2, (480-60)//2, 80, 60)) sensor.set_pixformat(sensor.GRAYSCALE)#黑白 #加载模板 #模板应该是一个小的灰度图像,如32×32. list = ["/1.pgm", "/2.pgm", "/3.pgm", "/4.pgm","/5.pgm", "/7.pgm"] template = [0,0,0,0,0,0] def loadimg(): t=0 for i in list: template[t] = image.Image("i")#模板库 要求图片格式为pgm 需要下载到sd卡中 t+=1 return template clock = time.clock() template = loadimg() while (True): clock.tick() img = sensor.snapshot()# 获取当前帧 # roi=(0, 0, 400, 400) #设置感兴趣区域 for i in template: r = img.find_template(i, 0.70, step=4, search=SEARCH_EX) #, roi=(10, 0, 60, 60)) #设置感兴趣区域 if r: #如果找到模板图片旧框选出来 img.draw_rectangle(r) print(clock.fps()) -

RE: LCD显示和光源扩展板冲突吗??发布在 OpenMV Cam

@kidswong999 不管怎么调,他都最亮,调不了,但是LCD能显示

# Untitled - By: Administrator - Fri Apr 12 2024 import sensor, image, time import display from pyb import UART, Pin,Timer light = Timer(4, freq=50000).channel(1, Timer.PWM, pin=Pin("P7")) light.pulse_width_percent(10) # 控制亮度 0~100 sensor.reset() sensor.set_pixformat(sensor.RGB565) sensor.set_framesize(sensor.QQVGA) sensor.skip_frames(time = 2000) lcd = display.SPIDisplay() clock = time.clock() while(True): clock.tick() lcd.write(sensor.snapshot()) print(clock.fps()) -

LCD显示和光源扩展板冲突吗??发布在 OpenMV Cam

为什么加上LCD显示就没法调光源的亮度了,light.pulse_width_percent(10) # 控制亮度 0~100更改里面的一直是非常亮的,但是不加LCD显示代码更改就可以,就是用例程的代码更改里面的就可以为什么呀???

-

用openmvplus训练模型两种物体目标检测,为啥跑出来帧率这么低发布在 OpenMV Cam

用edge impulse训练,就两种模型,训练出来就8帧,好低啊怎么让他高

# Edge Impulse - OpenMV Object Detection Example import sensor, image, time, os, tf, math, uos, gc sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = None labels = None min_confidence = 0.5 try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') colors = [ # Add more colors if you are detecting more than 7 types of classes at once. (255, 0, 0), ( 0, 255, 0), (255, 255, 0), ( 0, 0, 255), (255, 0, 255), ( 0, 255, 255), (255, 255, 255), ] clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # detect() returns all objects found in the image (splitted out per class already) # we skip class index 0, as that is the background, and then draw circles of the center # of our objects for i, detection_list in enumerate(net.detect(img, thresholds=[(math.ceil(min_confidence * 255), 255)])): if (i == 0): continue # background class if (len(detection_list) == 0): continue # no detections for this class? print("********** %s **********" % labels[i]) for d in detection_list: [x, y, w, h] = d.rect() center_x = math.floor(x + (w / 2)) center_y = math.floor(y + (h / 2)) print('x %d\ty %d' % (center_x, center_y)) img.draw_circle((center_x, center_y, 12), color=colors[i], thickness=2) print(clock.fps(), "fps", end="\n\n")