# Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf, uos, gc

from pyb import UART

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.

uart = UART(3,9600)

net = None

labels = None

try:

# load the model, alloc the model file on the heap if we have at least 64K free after loading

net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024)))

except Exception as e:

print(e)

raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')

try:

labels = [line.rstrip('\n') for line in open("labels.txt")]

except Exception as e:

raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')

clock = time.clock()

while(True):

clock.tick()

img = sensor.snapshot()

# default settings just do one detection... change them to search the image...

for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5):

print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect())

img.draw_rectangle(obj.rect())

b = obj.output() # a[(),(),()];print(a[0])

c = b.index(max(b))

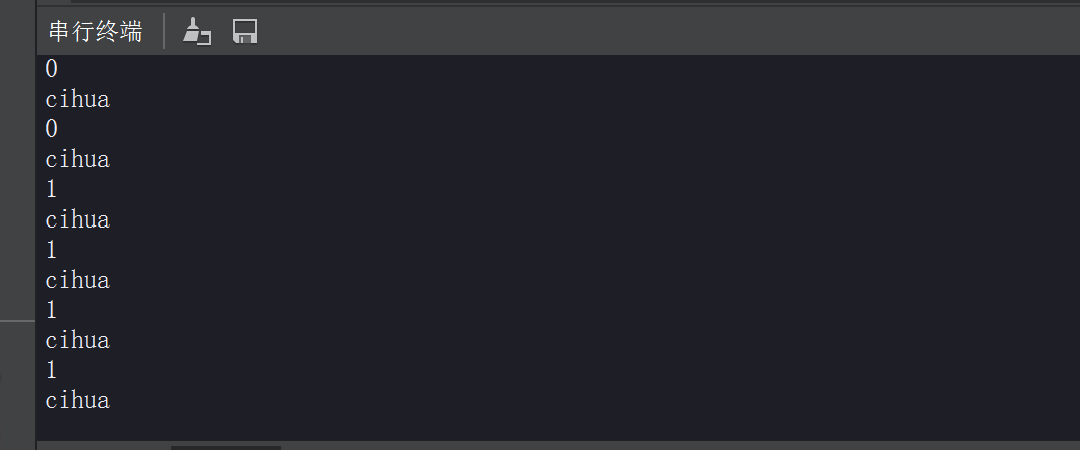

if c == 0 or c == 1:

uart.write('n'+'\n')

print(c)

print("cihua")

time.sleep_ms(500)

else :

uart.write('y'+'\n')

print(c)

print("xionghua")

time.sleep_ms(500# Edge Impulse - OpenMV Image Classification Example

import sensor, image, time, os, tf, uos, gc

from pyb import UART

sensor.reset() # Reset and initialize the sensor.

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_windowing((240, 240)) # Set 240x240 window.

sensor.skip_frames(time=2000) # Let the camera adjust.

uart = UART(3,9600)

net = None

labels = None

try:

# load the model, alloc the model file on the heap if we have at least 64K free after loading

net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024)))

except Exception as e:

print(e)

raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')

try:

labels = [line.rstrip('\n') for line in open("labels.txt")]

except Exception as e:

raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')')

clock = time.clock()

while(True):

clock.tick()

img = sensor.snapshot()

for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5):

img.draw_rectangle(obj.rect())

b = obj.output() # a[(),(),()];print(a[0])

c = b.index(max(b))

if c == 0 or c == 1:

uart.write('n'+'\n')

print(c)

print("cihua")

time.sleep_ms(500)

else :

uart.write('y'+'\n')

print(c)

print("xionghua")

time.sleep_ms(500)

11a7-image.png](https://fcdn.singtown.com/81070053-2ba3-4deb-8fbd-c2b3e077dd0c.png)

11a7-image.png](https://fcdn.singtown.com/81070053-2ba3-4deb-8fbd-c2b3e077dd0c.png)