或者支持基于 MobileNetV1 的网络的图像分割吗?

6

6zad

@6zad

0

声望

11

楼层

446

资料浏览

0

粉丝

0

关注

6zad 发布的帖子

-

openMVH7plus是否支持运行自己编写的基于 MobileNetV1 的网络的边缘检测模型?发布在 OpenMV Cam

openMVH7plus是否支持运行自己编写的基于 MobileNetV1 的网络的边缘检测模型?

-

OPENMV4 H7 Plus都支持哪些轻量级神经网络?发布在 OpenMV Cam

OPENMV4 H7 Plus都支持哪些轻量级神经网络?是否支持EfficientNet、SqueezeNet 、Tiny YOLO等模型的运行?

-

可以将自己训练的神经网络模型部署到openMV上运行嘛?发布在 OpenMV Cam

可以将自己训练的神经网络模型部署到openMV上运行嘛?还是必须使用edeg impluse官网上训练出来的模型?或者可以自己编写模型放到edge impluse官网上训练嘛?

-

RE: openMV 上可以部署MobilenetV3small并运行吗?发布在 OpenMV Cam

我自己写好的mobilenetV3-small上传至edge impluse训练,并部署到openMV上,这是可以操作的吗?

-

openMV 上可以部署MobilenetV3small并运行吗?发布在 OpenMV Cam

openMV上可以部署轻量级MobilenetV3 samall并运行吗?有没有大佬知道,求请教?谢谢

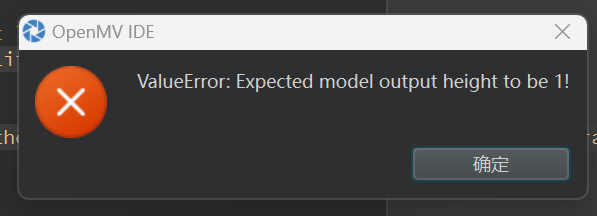

import sensor, image, time, os, tf, uos, gc sensor.reset() # Reset and initialize the sensor. sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE) sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240) sensor.set_windowing((240, 240)) # Set 240x240 window. sensor.skip_frames(time=2000) # Let the camera adjust. net = None labels = None try: # load the model, alloc the model file on the heap if we have at least 64K free after loading net = tf.load("trained.tflite", load_to_fb=uos.stat('trained.tflite')[6] > (gc.mem_free() - (64*1024))) except Exception as e: print(e) raise Exception('Failed to load "trained.tflite", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') try: labels = [line.rstrip('\n') for line in open("labels.txt")] except Exception as e: raise Exception('Failed to load "labels.txt", did you copy the .tflite and labels.txt file onto the mass-storage device? (' + str(e) + ')') clock = time.clock() while(True): clock.tick() img = sensor.snapshot() # default settings just do one detection... change them to search the image... for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5): print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect()) img.draw_rectangle(obj.rect()) # This combines the labels and confidence values into a list of tuples predictions_list = list(zip(labels, obj.output())) for i in range(len(predictions_list)): print("%s = %f" % (predictions_list[i][0], predictions_list[i][1])) print(clock.fps(), "fps")使用edge impluse网站的迁移学习训练出来的代码显示错误怎么解决呀?

很急,有没有大佬帮忙看看