import sensor, time, image

# 初始化设置

sensor.set_contrast(3)

sensor.set_gainceiling(16)

sensor.reset()

sensor.set_contrast(3)

sensor.set_gainceiling(16)

sensor.set_framesize(sensor.QQVGA)

sensor.set_pixformat(sensor.GRAYSCALE)

# 跳过两秒使摄像头初始化完成

sensor.skip_frames(time = 2000)

# 加入Haar Cascade 参数用于后面的识别人脸函数

# By default this will use all stages, lower satges is faster but less accurate.

face_cascade = image.HaarCascade("frontalface", stages=30)

print(face_cascade)

# 设置一个特征点变量

kpts1 = None

############# 把人脸的特征点录入 ##############

while (kpts1 == None):

img = sensor.snapshot()

img.draw_string(0, 0, "Looking for a face...")

# Find faces

objects1 = img.find_features(face_cascade, threshold=0.5, scale=1.3)

if objects1:

# 在每个方向上将fecd的ROI扩大31个像素

face1 = (objects1[0][0]-31, objects1[0][1]-31,objects1[0][2]+31*2, objects1[0][3]+31*2)

# 在face的roi范围内寻找关键点

kpts1 = img.find_lbp(face1)

# 画出人脸的框框

# image.save_descriptor(keypoint,"/%s.orb"%(kpts)) #keypoint为保存特征点的文件名

img.draw_rectangle(objects1[0])

print(kpts1)

# 标记出关键点

print(kpts1)

#img.draw_keypoints(kpts1, size=24)

img = sensor.snapshot()

time.sleep(2000)

# FPS clock

clock = time.clock()

########### 对比关键点 ##########

while (True):

clock.tick()

img = sensor.snapshot()

objects2 = img.find_features(face_cascade, threshold = 0.5 ,scale_factor = 1.3)

#try :

#face2 = (objects2[0][0]-31 ,objects2[0][1]-31 ,objects2[0][2]+31*2 ,objects2[0][3]+31*2)

#except : IndexError as e :

#print(objects2[0])

for face2 in objects2 :

# Extract keypoints from the whole frame

kpts2 = img.find_lbp(face2)

if (kpts2):

# Match the first set of keypoints with the second one

# kpts3 = image.load_decriptor(keypoint)

c = image.match_descriptor(kpts1, kpts2)

print(c)

#match = c[6] # C[6] contains the number of matches.

if (c<15000):

img.draw_rectangle(face2)

x = face2[0] + face2[2]/2

y = face2[1] + face2[3]/2

print([int(x),int(y)])

img.draw_cross(int(x), int(y), size=10)

# print(kpts2, "matched:%d dt:%d"%(match, c[7]))

else :

print("NONE")

# Draw FPS

img.draw_string(0, 0, "FPS:%.2f"%(clock.fps()))

6lgq

@6lgq

6lgq 发布的帖子

-

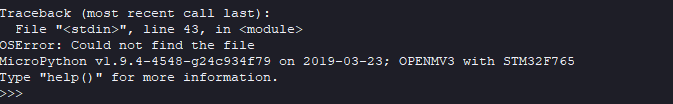

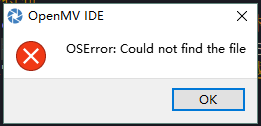

请问为什么我的openmv在运行这个程序的时候总会自动断开连接,尤其是在识别到人脸时发布在 OpenMV Cam

-

RE: 请问如何让find_features函数返回人脸中心区域的坐标值,而且find_features函数的rio是什么意思发布在 OpenMV Cam

希望有大佬可以告诉我人脸区域的中心坐标可以这么输出

-

请问如何让find_features函数返回人脸中心区域的坐标值,而且find_features函数的rio是什么意思发布在 OpenMV Cam

import sensor, time, image, pyb

led = pyb.LED(3)

Reset sensor

sensor.reset()

Sensor settings

sensor.set_contrast(1)

sensor.set_gainceiling(16)HQVGA and GRAYSCALE are the best for face tracking.

sensor.set_framesize(sensor.HQVGA)

sensor.set_pixformat(sensor.GRAYSCALE)face_cascade = image.HaarCascade("frontalface", stages=25)

#stages值未传入时使用默认的stages。stages值设置的小一些可以加速匹配,但会降低准确率。

print(face_cascade)clock = time.clock()

while (True):

clock.tick()img = sensor.snapshot() #注意:比例因子越低,图像越小,检测的对象越小。 #阈值越高,检测率越高,误报越多。 objects = img.find_features(face_cascade, threshold=0.75, scale=1.35, roi) #image.find_features(cascade, threshold=0.5, scale=1.5),thresholds越大, #匹配速度越快,错误率也会上升。scale可以缩放被匹配特征的大小。 #在找到的目标上画框,标记出来 for r in objects: img.draw_rectangle(r) a = r.x() print(a) #time.sleep(100) #延时100msprint(clock.fps())

-

请问关于教程中face_tracking例程是实现什么功能的,这个例程一直在输出fps值,如何让它更稳定的找到运动的人脸发布在 OpenMV Cam

import sensor, time, image

Reset sensor

sensor.reset()

sensor.set_contrast(3)

sensor.set_gainceiling(16)

sensor.set_framesize(sensor.VGA)

sensor.set_windowing((320, 240))

sensor.set_pixformat(sensor.GRAYSCALE)跳过几帧使图像稳定

sensor.skip_frames(time = 2000)

加载Haar算子

默认情况下,这将使用所有阶段,较低的阶段更快但不太准确。

face_cascade = image.HaarCascade("frontalface", stages=25)

print(face_cascade)第一组关键点

kpts1 = None

找到人脸

while (kpts1 == None):

img = sensor.snapshot()

img.draw_string(0, 0, "Looking for a face...")

# 找到人脸

objects = img.find_features(face_cascade, threshold=0.5, scale=1.25)

if objects:

# 在每个方向上将ROI扩大31个像素

face = (objects[0][0]-31, objects[0][1]-31,objects[0][2]+312, objects[0][3]+312)

# 使用检测面大小作为ROI提取关键点

kpts1 = img.find_keypoints(threshold=10, scale_factor=1.1, max_keypoints=100, roi=face)

# 围绕第一个面绘制一个矩形

img.draw_rectangle(objects[0])画关键点

print(kpts1)

img.draw_keypoints(kpts1, size=24)

img = sensor.snapshot()

time.sleep(2000)FPS clock

clock = time.clock()

while (True):

clock.tick()

img = sensor.snapshot()

# 从整个帧中提取关键点

kpts2 = img.find_keypoints(threshold=10, scale_factor=1.1, max_keypoints=100, normalized=True)if (kpts2): # 将第一组关键点与第二组关键点匹配 c=image.match_descriptor(kpts1, kpts2, threshold=85) match = c[6] # C[6] 包含匹配度. if (match>5): img.draw_rectangle(c[2:6]) img.draw_cross(c[0], c[1], size=10) print(kpts2, "matched:%d dt:%d"%(match, c[7])) # 绘制 FPS img.draw_string(0, 0, "FPS:%.2f"%(clock.fps())) print(clock.fps())请问这个例程是找到人脸后就一直输出FPS值吗?